Introduction

Many developers and organizations embark on their journey with Continuous Integration and Continuous Deployment (CI/CD) full of optimism, yet the real-life experiences of countless developers reveal that the path to mastering CI/CD is fraught with complications, unexpected setbacks, and sometimes overwhelming obstacles. As we strip away the buzz and the lofty claims about CI/CD revolutionizing development, we often find developers entangled in tedious manual setups, scrambling to manage breakdowns in production, hindered by team silos, and grappling with complex, fragile systems.

Note: This book is currently in beta. As such, you may encounter content that is still being refined, sections that are being reorganized, or explanations that will be expanded in future updates. We welcome your feedback as we continue to improve and develop this resource.

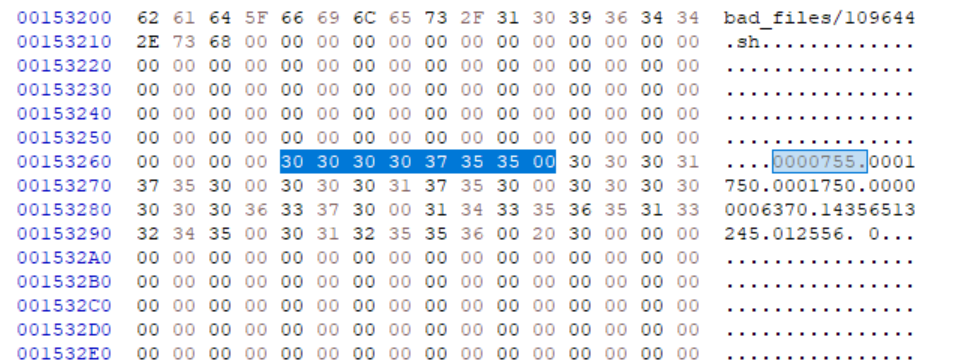

This book is an endeavor to peel back the layers of CI/CD, moving beyond mere automation and frequent code merging. We aim to guide you through the intricacies of what CI/CD truly entails and how to implement it effectively using GitHub Actions as a practical example. While the theory of CI/CD promises streamlined operations and faster deployments, the reality involves navigating through a myriad of challenges that can stymie even the most determined teams.

Readers familiar with foundational concepts like those outlined in works such as Minimum Viable Continuous Delivery will recognize shared principles here, focusing on the essential practices for achieving CI/CD benefits. This book complements such technology-agnostic frameworks by diving deep into the practical 'how-to' using a specific, widely-used tool. Given this strong focus, consider this book a detailed guide to implementing CI/CD specifically with GitHub Actions, bridging the gap between general principles and concrete execution.

What is CI/CD?

Continuous Integration (CI)

Integration is the act of constantly merging your changes with other developers', and vice-versa. It's the act of combining multiple changes, from multiple developers, into a single, cohesive whole, regularly. All developers work on a shared codebase. The product owner or another person (internally) should be able to use your app, or another team can demo their feature--it might not be finished but the application still works as intended.

Continuous Deployment (CD) and Continuous Development (CD)

Continuous Deployment (often confused with Continuous Delivery) is the practice where every change that passes the automated tests and other confidence-inducing procedures is automatically deployed into the production environment with little to no human intervention.

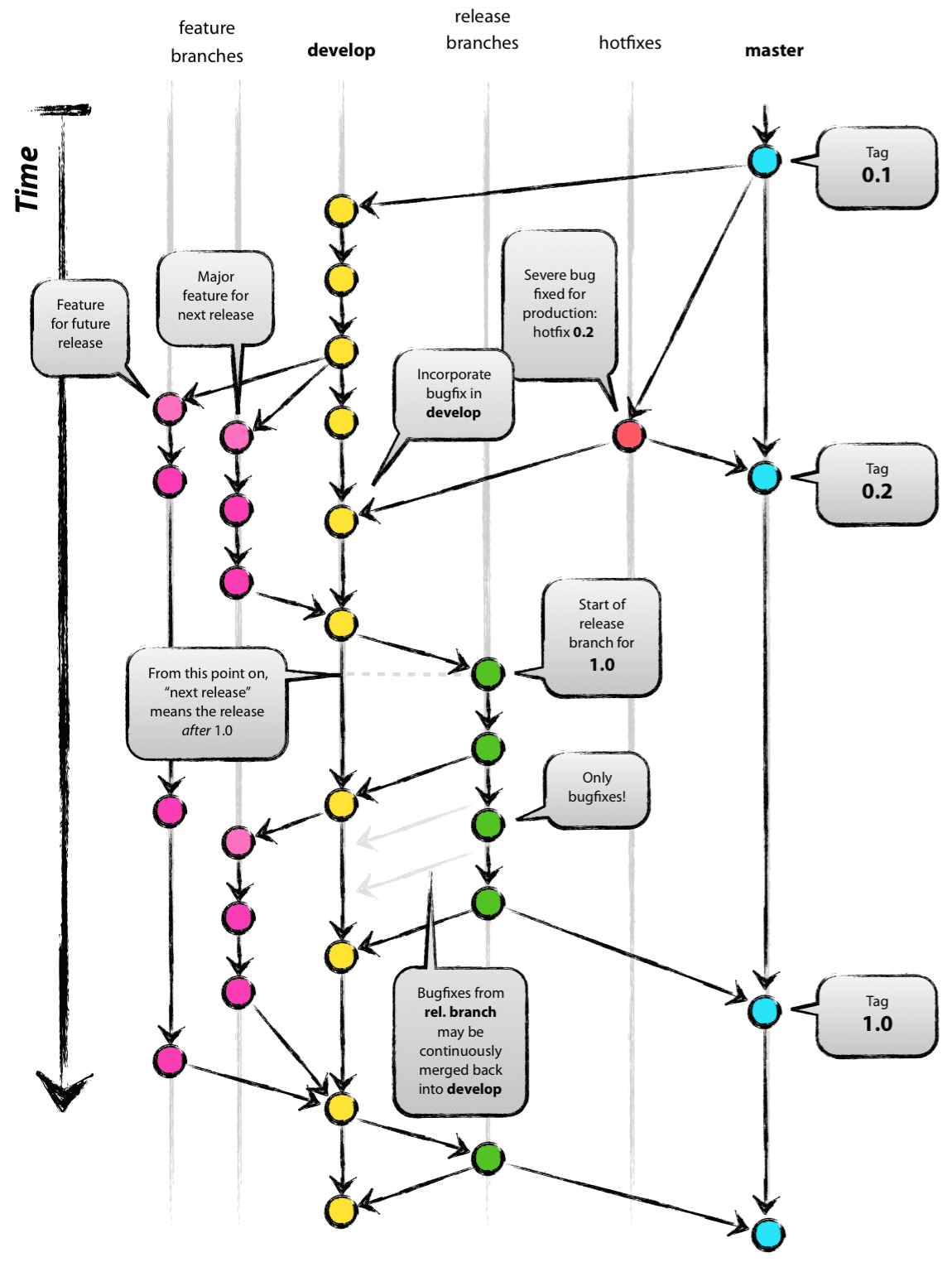

Continuous Delivery, on the other hand, ensures that the code is always in a deployable state, but it may not be deployed to production automatically. Instead, it might require manual approval. It provides the business with the opportunity but not the obligation to deploy at any point. Continuous delivery is not simply an automated pipeline for on-demand deployment. For example, code in long-lived feature branches necessitates retrieving specific versions or bug fixes that require complex version control, which can disrupt other work. Or, the build requires a special ceremony, such as complex testing, an implicit contract with another service that has to be deployed in a certain order, manually run scripts, manual checks, etc. This indicates the code base is not always deployable, thus not fully meeting continuous integration principles. This also includes necessary automated testing to ensure its capacity to be deployed continuously.

Deployments are technical events managed by engineering, releasing (making those features usable by customers) is both an engineering and a business task.

CI/CD

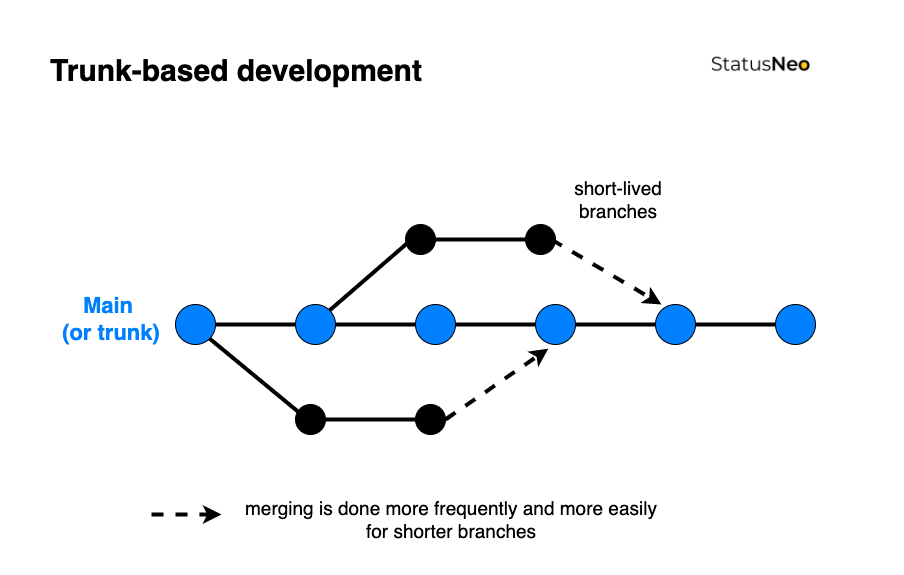

CI/CD aims to avoid "integration hell" by ensuring continuous integration and either continuous delivery or deployment. Work is constantly merged into the main/master branch after it has been verified via code review and the continuous integration pipeline. This involves practices like trunk-based development, where all developers work on a shared branch, promoting constant integration and minimizing merge conflicts.

Aside: Some companies deploy 100 times a day, but more deploys aren't inherently better—they simply indicate a robust, automated process. Continuous deployment automatically releases every quality-approved change, reducing the gap between versions. This means smaller changesets, easier bug identification, and faster rollbacks, all of which help minimize profit loss. Ultimately, frequent deploys reflect strong operational practices and many quality measures, not a superior app.

A misunderstanding of CI/CD is that it's just a build pipeline that continually builds the software. CI/CD requires both technical and cultural shifts, including:

-

Smaller work units: Breaking down features into independently deployable and testable components. This allows the features to be continually deployed, or behind a feature flag, while other features are being worked on. If all features are large and are on their own feature branch, then this defeats the point of CI/CD as the feature has not yet been integrated, that is, it does not co-exist with the rest of the application. Other developers are unable to build around it, and feature flagging is not possible. Idea transmission is still possible, and it is a myth that developers do not communicate with each other if not practicing CI/CD.

-

Modular codebase: Facilitating localized changes without impacting the entire application. This allows other developers to not be blocked while a parallel feature is in development.

-

Focus on rapid feedback: Prioritizing quick delivery of changes and gathering customer insights. If there is no need for fast customer feedback or to test changes, then moving to CI/CD becomes less important.

These shifts require that the application itself is modular and easy to modify, therefore, it could require code changes, depending on your application.

Some cases, such as rewriting the app to use another framework, may require feature branching or interrupting others' work.

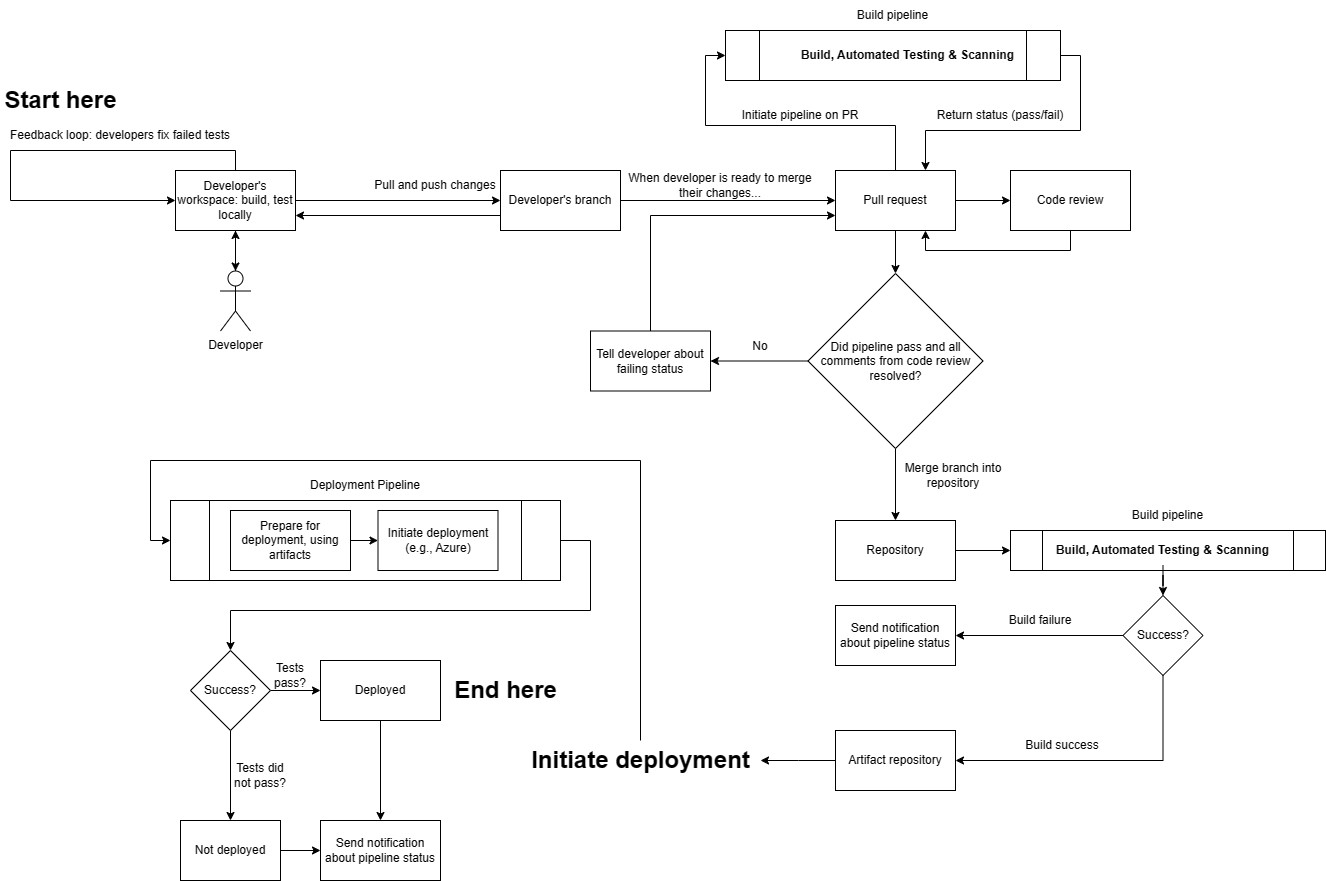

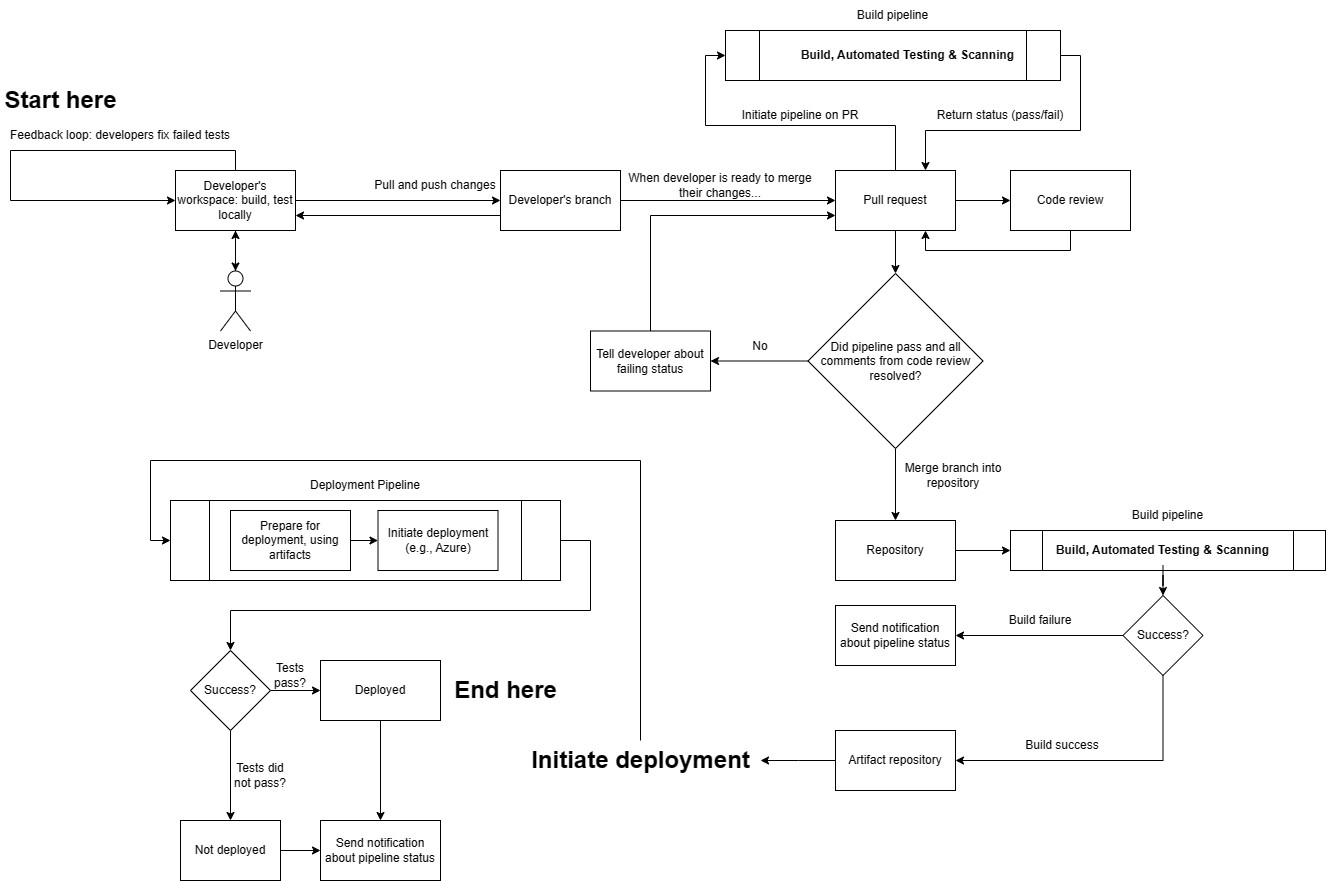

Here is what the software development process looks like when using CI/CD. Note that many of these processes are automated.

Why is CI/CD important?

There are many reasons why a company or a project may use CI/CD. Core Benefits:

-

Faster Development and Deployment: CI/CD enables rapid deployment of small changes, accelerating development and deployment cycles, allowing businesses to be more agile and responsive to customer needs.

-

Improved Code Quality: Continuous integration, automated testing, and code review practices built into CI/CD processes lead to higher-quality code and more reliable software.

-

Increased Collaboration and Transparency: CI/CD encourages collaboration between developers, operations, and QA teams, fostering shared understanding and transparency throughout the development lifecycle.

-

Decoupling of Integration, Deployment, and Release: CI/CD separates these stages, allowing for flexibility in releasing features and testing in production without impacting users.

-

Enhanced Confidence in Changes: Automated testing and build pipelines provide developers with a higher level of confidence in their code, reducing the risk of introducing bugs.

-

Improved Estimation Accuracy: By deploying frequently, teams gain a better understanding of the development process, leading to more accurate estimations.

-

Streamlined Workflow: Automation eliminates manual processes, smoothing workflows, and allowing developers to focus on core development tasks.

-

Support for Experimentation and Innovation: Feature flags enable controlled experimentation and incremental rollouts, allowing teams to test new features and gather feedback without risking the entire application.

Despite these benefits, several challenges can hinder successful CI/CD implementation:

-

Zero-Downtime Deployments: Achieving seamless deployments while managing resources and data integrity requires strategies like blue-green deployments, canary releases, and feature flags.

-

Database Schema Impacts: Even small code changes can disrupt database schemas, necessitating schema migration tools and a disciplined approach to database management.

-

Central Point of Failure: CI/CD creates a central point of failure that demands constant vigilance. Maintaining a "green" pipeline requires rigorous testing, code review, and ongoing maintenance to ensure stability and compliance. Do not rubber stamp PRs.

-

Culture Shift: CI/CD requires a shift in mindset, emphasizing collaboration, shared responsibility, and open communication across teams. This will exaggerate any communication issues, if they exist.

-

Continuous Learning: Teams must invest in ongoing training, keeping their skills up-to-date with evolving CI/CD technologies and security best practices.

-

Clear Objectives: A lack of clarity regarding CI/CD goals can lead to resistance and misaligned expectations. It's crucial to define objectives, communicate the value proposition, and secure stakeholder buy-in.

CI/CD is not a magic bullet. It demands discipline, commitment to quality, and a proactive approach to addressing technical and organizational challenges. However, when implemented effectively, it can significantly accelerate development, enhance software quality, and empower teams to deliver value more efficiently.

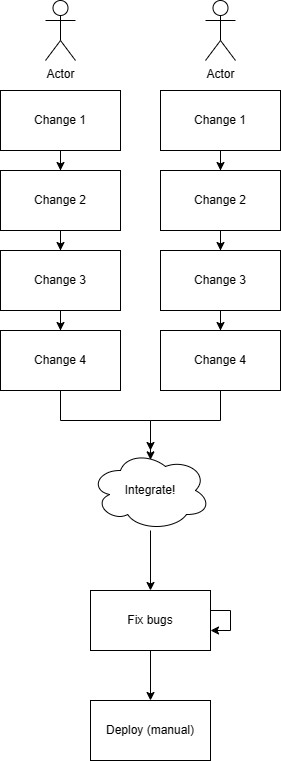

Traditional software development

Traditional software development is a methodology that is difficult to define because there's multiple definitions of what traditional means. This usually means before continuous integration and development was widely popularized, for example prior to 2010.

Traditional Development:

-

Teams often work in silos with limited visibility into each other's work. This does not mean that team members do not communicate with each other, rather, the act of integration is delayed.

-

Slow feedback loops and long development cycles are common.

-

Manual integration and deployment processes are complex and resource-intensive.

-

Late-stage testing limits opportunities for early customer feedback.

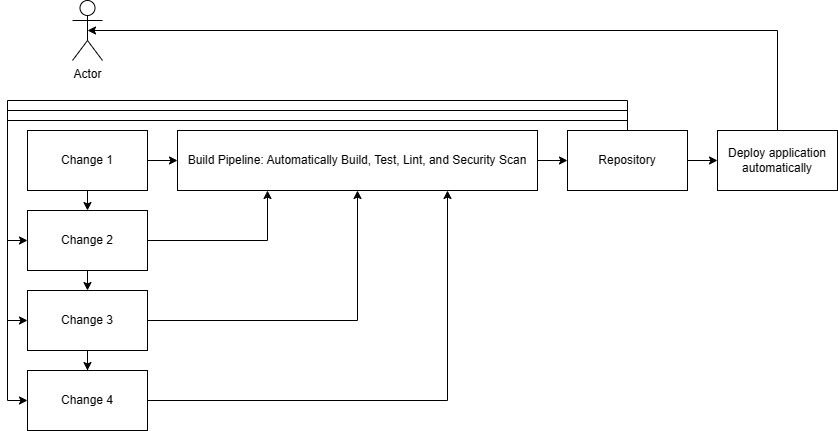

CI/CD Development:

-

Promotes continuous collaboration and transparency through practices like trunk-based development.

-

Enables rapid feedback loops and iterative development with frequent integrations and deployments.

-

Automates builds, tests, and deployments, freeing developers to focus on core tasks.

-

Allows controlled feature rollouts and early customer feedback through feature flags.

The build server or build pipeline

A build server or, more accurately, a build runner or agent, is the environment where automated CI/CD tasks like building, testing, and scanning are executed. While the underlying host machine might be a virtual machine (especially for cloud-provided runners like GitHub's ubuntu-latest), the actual build often runs inside a container. This containerized approach provides a consistent, isolated environment with all necessary tools and dependencies, ensuring reproducibility and acting as a quality gatekeeper for the CI/CD workflow. The runner executes instructions defined in the workflow configuration file.

Build runners/agents are used instead of developer workstations because:

-

Security: These servers handle sensitive resources like company source code and secrets. It is crucial to secure them to prevent unauthorized access and protect against lateral attacks. Simply storing them on a developer's machine means that other software could use the secrets, the secrets are transmitted over other mediums, etc.

-

Consistency and Isolation: Each server, agent, or VM should operate independently to minimize the impact of potential compromises. The agent only runs for a fixed amount of time, then is erased. Developer machines are long-lived, and could have lots of software unnecessary for building the application.

Automation

Automation is essential for CI/CD, streamlining tasks like builds, deployments, and testing. This saves time, improves efficiency, and ensures consistency and reliability, crucial for frequent deployments. However, over-automation can be detrimental, especially for tasks requiring human judgment or adaptability.

The key is to find the right balance, automating repetitive tasks while retaining human oversight for critical decision-making and complex scenarios. Robust error handling and clear guidelines for human intervention are crucial for successful automation.

Trust in automation: Part I. Theoretical issues in the study of trust and human intervention in automated systems. Ergonomics, 37(11), 1905--1922 | 10.1080/00140139408964957

A model for types and levels of human interaction with automation. IEEE Transactions on Systems, Man, and Cybernetics - Part A: Systems and Humans, 30(3), 286--297 | 10.1109/3468.844354

Testing, code review, and quality assurance

Testing and quality assurance are crucial for CI/CD, ensuring software quality and confidence in deployments. While both automated and manual testing play vital roles, they address different aspects:

-

Automated Testing: This process verifies functionality and performance through predefined tests, similar to controlled experiments, providing rapid feedback on code changes. Imagine a chemistry teacher at the front of a classroom, mixing two chemicals and instructing students to watch closely. This scenario serves as an example of a demonstration because the outcome is known beforehand, akin to how these tests predictably assess the impacts of changes in the code.

-

Manual Testing: Leverages human judgment for evaluating usability, aesthetics, and user experience, crucial for aspects difficult to automate.Humans should not be doing the checking aspect.Rather, automated testing should be responsible for that.

-

CI/CD emphasizes automation but doesn't eliminate the need for manual testing. Instead, it allows testers to focus on higher-level tasks requiring human expertise. Maintaining a balance between automated and manual testing is key for efficient, high-quality software development.

-

Skipping quality assurance in CI/CD can be tempting due to the fast-paced nature, but it's essential for ensuring customer satisfaction and protecting the business's reputation.It is additionally very tempting because the lack of automation will not show up for quite some time.

Aside: fire QA, right?! Well, no. QA shifts left, and instead prioritizes testing PRs (which have a smaller scope and smaller changeset.) Since checking (testing an outcome that is known) is done mostly via unit tests, QA can use their human-ness to evaluate the product for quality, usability, functionality, and exploration testing. When a feature is developed under a feature flag, QA can test it in the pre-production environment (feature flag enabled for them), allowing developers to get early feedback.

Rapid Feedback Loops

The essence of CI/CD lies in maintaining business agility through a fast feedback loop. This allows companies, especially startups and small businesses, to rapidly experiment, identify what works, and make quick improvements.

Rapid feedback loops are a multi-pronged approach:

-

Streamlined Local Testing: Developers need easily accessible local testing environments mirroring production. Tools like hot reloading and ephemeral test environments with simple provisioning are crucial.

-

Efficient Build Pipeline: Aim for a build time under 15-20 minutes with automated processes, notifications for failures, and minimal manual intervention.This period of time is arbitrary. However, if the build time is too long, then there's a possibility of frustrating developers as well as not being able to quickly react to feedback from your customers.You will also make it more difficult to quickly push changes should there be a production outage.

-

Timely Code Reviews: Prioritize prompt and thorough PR reviews (ideally within a day) with constructive feedback and a focus on code readability.

-

Regular Deployments: Embrace semi-regular deployments to accelerate feedback loops and customer value delivery (refer to DORA metrics).

-

Comprehensive Monitoring & Alerting: Implement robust monitoring in all environments to detect issues early. Define a severity matrix for appropriate stakeholder notifications, escalating critical incidents promptly.

Infrastructure as Code and modularity

To achieve continuous integration and efficient deployments, it's essential to structure applications so that small changes are manageable. This involves both the application itself and its underlying infrastructure. If making small changes is cumbersome, integration becomes challenging, as larger updates can span multiple components, increasing both the testing burden and the associated risks.

-

Independent Modules: Structure applications with clear boundaries between components. This facilitates isolated changes and reduces testing complexity. This isn't the fact that you must adopt microservices, rather it's just structuring your code to be a modular approach. Modularity leads to smaller, more manageable changes, simplifying testing and increasing development speed.

-

Version-Controlled Infrastructure: Treat infrastructure configurations like code, storing them in version control systems for tracking, reverting, and collaboration.Your application. This could be terraform templates or ARM templates.

-

Eliminate configuration inconsistencies between development, testing, and production, preventing "snowflake servers" and ensuring reliable deployments.

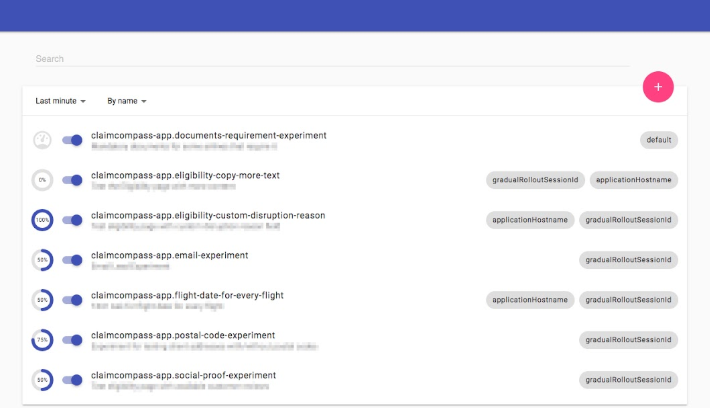

Feature Flags

Feature flags are for experimentation and release. They separate the act of deploying (moving the code to production, managed by engineering) and the act of making the changes usable by customers (commonly associated with a marketing event from the business's side.) They are remote-controlled conditional statements that allow the selective activation or deactivation of application functionalities across different environments (development, integration, pre-production, production) without needing a redeployment. These flags can be toggled within seconds or minutes and can be set based on criteria like geographic location, IP address, or user type, facilitating targeted and gradual feature rollouts.

What exactly constitutes a feature or needs to be released via a feature flag is up to the product managers and the business. Usually not everything is behind a feature flag, for example, features that cannot be code compiled, those that are incomplete to the extent that they have security issues that could harm the product, logging statements, refactors, package upgrades, security fixes, bug fixes, or small changes like typo fixes.

Typically, developers can enable these feature flags by themselves. Here's an example of an application in development, and it shows a special development overlay that allows developers to toggle feature flags.

Implementing feature flags in React with Unleash - Case Study (claimcompass.eu)

Feature flags need not be complicated or require third-party software. You can get started with a simple JSON file with a list of key/value pairs that is outside of the deployment system, but still accessible by your app. This does not require any subscription to a feature flag service. They can also be embedded in your application, for example, in a config file. This approach limits flexibility, however, as a redeployment is needed to change the config file.

This approach is beneficial for trunk-based development, where changes are incremental. Developers can merge new features behind feature flags, allowing others to activate these flags for testing selectively.

Feature flags also enable controlled risk-taking. For example, a promising feature might be released to a small user segment (e.g., 1%) to evaluate performance and gather feedback, minimizing risks of broader release.

Branches versus Feature Flags:

Branches provide isolated workspaces for developers, supporting multiple application versions or development paths. However, unlike branches that delay integration, feature flags allow for integration while controlling feature activation.

Limitations:

Feature flags should not be used to restrict feature access (for example, paid features), as they are often visible and modifiable on the client-side. They are better suited for testing, phased rollouts, and controlled changes.

Maintenance:

Proper feature flag management is crucial. Unused flags should be removed to avoid clutter and potential confusion. Limiting the number of active feature flags helps reduce code complexity and ease debugging.

Summary table,

| Aspect | Branching | Feature Flags |

|---|---|---|

| What It Is | Managing and isolating code changes in separate lines of development. | Tools for remotely controlling the visibility of new features in the production environment. |

| Main Actions | Changes stay within the branch. To make changes visible, merge, copy, squash, or rebase onto a production-bound branch (like trunk/master). | Allowing code changes to exist in production without being visible to everyone. Can be enabled for specific users or scenarios. |

| Visibility to Customers | Changes are not visible to customers unless the branch is deployed to production. Testing in environments like test, dev, experimental is possible. | Feature flags are crucial in managing what customers see in production. They hide or reveal new features based on their status. |

| Specific Considerations | Recommended to deploy the main or trunk branch to production, especially in TBD (trunk-based development). Branches are ideal for testing and isolated development. | Feature flags should be used judiciously, as overuse can complicate application maintenance. They are intended to be temporary and should not replace good branching and merging strategies. |

Version Control System (VCS)

Version control systems are crucial for continuous integration and development because they track changes, simplifying the integration process. For instance, if you have two versions of a document, merging them into one requires a detailed comparison of each word. This task involves identifying and understanding changes. Version control automates this process, significantly reducing the likelihood of errors associated with manually tracking changes. This automation ensures smooth and accurate integration of code changes, forming a cohesive whole.

VCSes show that work has been integrated because it is considered a central source of truth. Multiple copies of the application with different versions mean that there isn't a single source of truth, therefore, we can't know if our changes have been integrated.

VCSs enhance auditability, allowing developers to easily trace back to see when and why code was altered. This is particularly important from a security perspective to ensure that only authorized changes are made. For example, if unauthorized changes occur, they can be quickly identified and reverted.

Culture and communication, collaboration

While CI/CD tools automate integration and deployment, successful implementation requires more than just technology. It demands a fundamental shift in organizational culture and project management.

CI/CD thrives on:

-

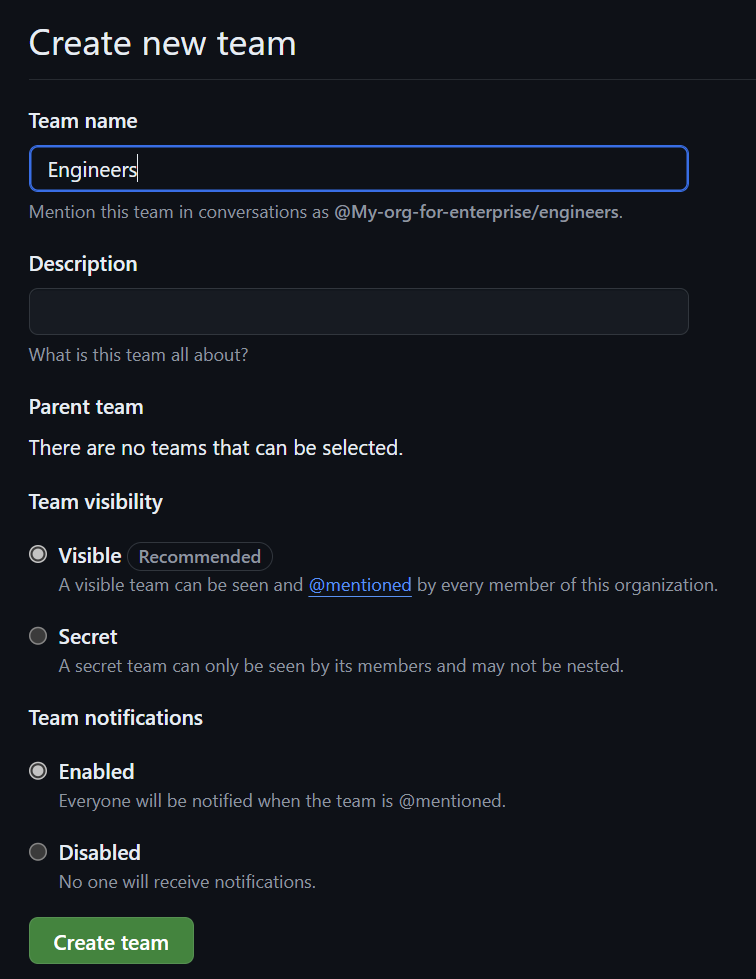

Collaboration and Communication: Teams must work closely, sharing information and coordinating efforts to ensure smooth integration and deployment.

-

Rapid Iteration: Frequent code merges, small feature updates, and continuous feedback loops are essential for maximizing the benefits of CI/CD.

-

Strategic Project Management: Breaking down features into manageable, independently testable units facilitates continuous integration and deployment without disrupting the entire application.

Ignoring the human element of CI/CD can lead to challenges:

-

Batched Changes and Integration Conflicts: Infrequent code merges increase the risk of complex integration issues.

-

Delayed Feedback: Waiting to test in production hinders rapid iteration and learning.

-

Siloed Information and Debugging Difficulties: Poor communication can lead to significant debugging challenges.

CI/CD is not a one-time setup. It requires ongoing maintenance, pipeline updates, and continuous learning to adapt to evolving practices. Effective testing, code reviews, and organizational support for these processes are vital for maintaining a smooth development cycle.

Continuous Deployment/Continuous Delivery

Infrastructure as Code (IaC) represents a transformative approach in managing and provisioning computing resources, utilizing machine-readable definition files rather than traditional physical hardware setups. This automation-focused methodology enhances the setup, configuration, and management of infrastructure, promoting rapid deployments, efficient resource utilization, and consistent, reliable environments. IaC is mainly declarative, targeting the desired end state of the infrastructure while the underlying tooling manages the execution. This is crucial in Continuous Deployment (CD) pipelines where IaC templates are automatically deployed in the cloud, ensuring each deployment is consistent, reproducible, and easily reversible. This aligns with principles like idempotency, immutability, and composability---key for maintaining interoperable and stable components.

The benefits of adopting IaC are extensive, including consistent infrastructure deployments across environments, enhanced reproducibility, and robust version control which acts as a single source of truth. Such structured deployments reduce configuration drifts between different environments such as QA/dev and production, speeding up the feedback loop for developers and boosting security measures. Tools such as Terraform offer cloud-agnostic deployment options, whereas AWS CloudFormation, Azure Resource Manager, and Google Cloud Deployment Manager cater to specific cloud environments. Additionally, open-source tools like Ansible and traditional configuration management tools like Chef and Puppet provide further automation capabilities, ensuring thorough enforcement of system states.

Historically, server management was a manual process involving system administrators physically logging into servers to apply changes, a method prone to errors and inconsistencies, especially in complex server environments. This labor-intensive process made replicating servers difficult, often requiring extensive documentation and manual reconfiguration. Before the adoption of IaC, administrators relied on shell scripts to manage and synchronize server configurations, though these scripts were limited in handling complex scenarios effectively. The rise of configuration management tools in the mid-to-late 2000s, such as CFEngine, Puppet, and Chef, began to address the issue of "snowflake servers"---highly customized servers difficult to replicate from scratch. Despite the advancements, many continued using shell scripts and command-line tools for their simplicity and familiarity. Today, IaC practices, exemplified by Terraform and other cloud-specific tools, have largely superseded these older methods, providing scalable, reliable, and repeatable server environment setups.

provider "aws" {

region = "us-west-1"

}

resource "aws_vpc" "sample_vpc" {

cidr_block = "10.0.0.0/16"

... // Additional configurations

}

resource "aws_subnet" "sample_subnet" {

vpc_id = aws_vpc.sample_vpc.id

cidr_block = "10.0.1.0/24"

... // Additional configurations

}

resource "aws_instance" "sample_ec2" {

ami = "ami-0c55b159cbfafe1f0"

instance_type = "t2.micro"

subnet_id = aws_subnet.sample_subnet.id

... // Additional configurations

}

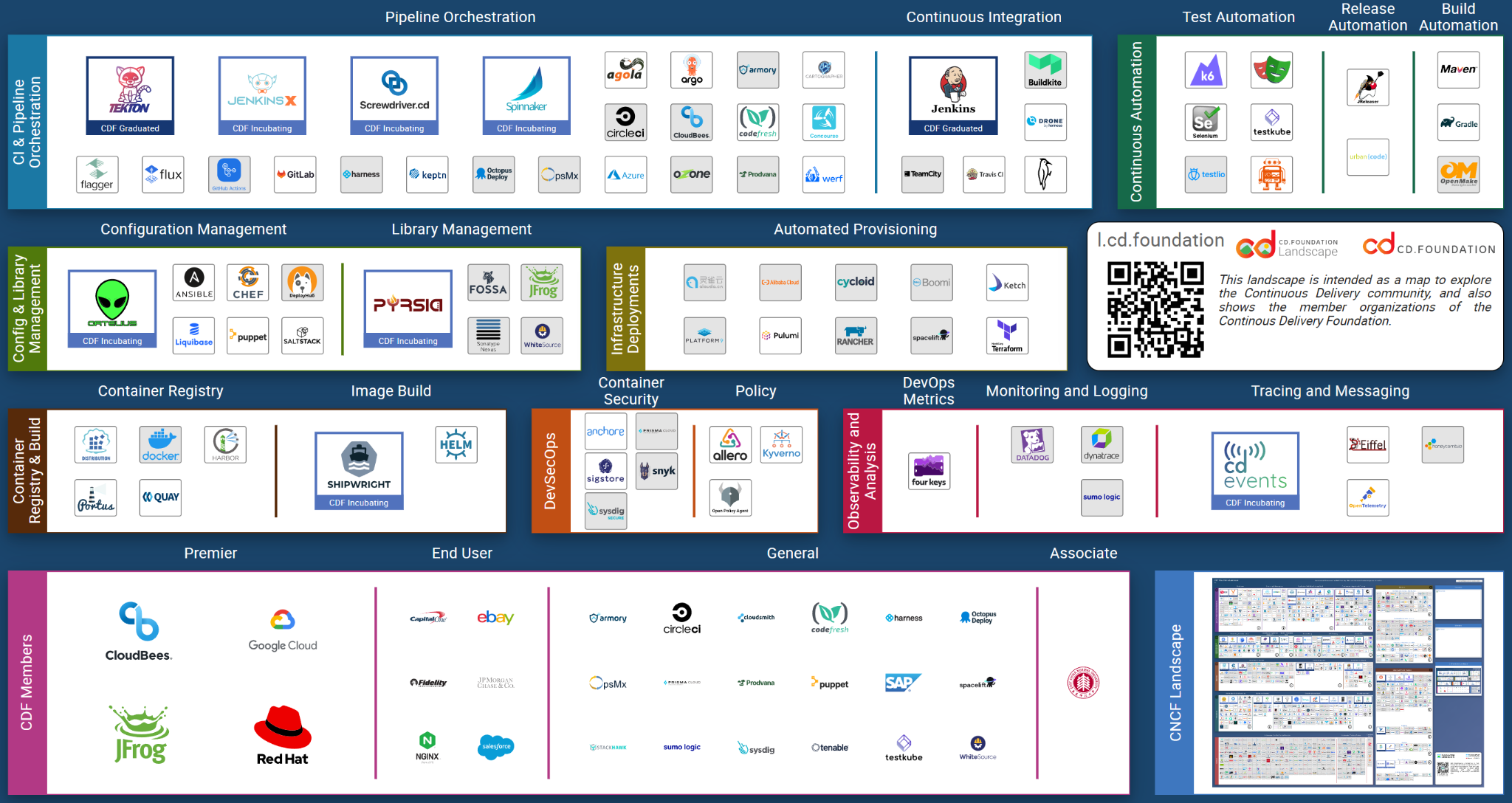

Providers and hosting

Providers fall into two overlapping categories: CI/CD tooling and application hosting infrastructure. You can mix and match—for example, use GitHub Actions for CI/CD while hosting your application on AWS, Azure, or Google Cloud. Sticking with a provider you already have a contract with can streamline integration with your security policies.

CI/CD Tools:

- GitHub Actions: Built into GitHub for automated build, test, and deployment workflows.

- GitLab CI/CD: An integrated solution with built-in CI/CD and version control.

- Jenkins: A flexible, open-source automation server with a vast plugin ecosystem.

- CircleCI: A cloud-based service known for ease of integration, Docker support, and parallel builds.

- Azure DevOps: A comprehensive suite covering planning, coding, building, and deploying.

- Bitbucket Pipelines (Atlassian): Integrated CI/CD service within Bitbucket Cloud, offering a cloud-native alternative.

- Travis CI: A hosted service that integrates well with GitHub and Bitbucket.

Infrastructure Providers:

- AWS: Offers comprehensive cloud services with CI/CD tools like CodePipeline and CodeBuild.

- Azure: Provides robust hosting alongside Azure Pipelines and other DevOps services.

- Google Cloud Platform: Features Cloud Build and strong support for containerized workflows.

- IBM Cloud: Delivers end-to-end DevOps toolchains integrated with popular tools.

- DigitalOcean: A straightforward platform that supports Kubernetes and common CI/CD integrations.

Terminology

This book is somewhat focused on GitHub Actions, but tries to provide a provider-agnostic view. Some of the terms might be a bit different depending on your CI/CD provider. Here is a table that helps clarify.

| Definition | Generic Term | Jenkins | GitHub Actions | GitLab CI/CD | CircleCI |

|---|---|---|---|---|---|

| Build Step: A single CI/CD task (e.g. compile, test, deploy). | Build Step | Build Step | Job | Job | Job |

| Environment: The runtime setup (OS, tools, variables, network). | Environment | Node | Runner | Runner | Executor |

| Workflow: A series of tasks defining the build process. | Workflow | Pipeline | Workflow | Pipeline | Workflow |

| Trigger: An event (commit, PR, schedule) that starts the pipeline. | Trigger | Build Trigger | Event | Trigger | Trigger |

| Secrets: Sensitive data (passwords, tokens, keys) used securely. | Secrets | Credentials | Secrets | Variables | Environment Variables |

| Container: An isolated package with code, runtime, and tools. | Container | Agent/Docker Agent | Container | Docker Executor | Docker |

| Configuration: Files specifying build settings (e.g. YAML). | Configuration | Jenkinsfile | .github/workflows/* | .gitlab-ci.yml | .circleci/config.yml |

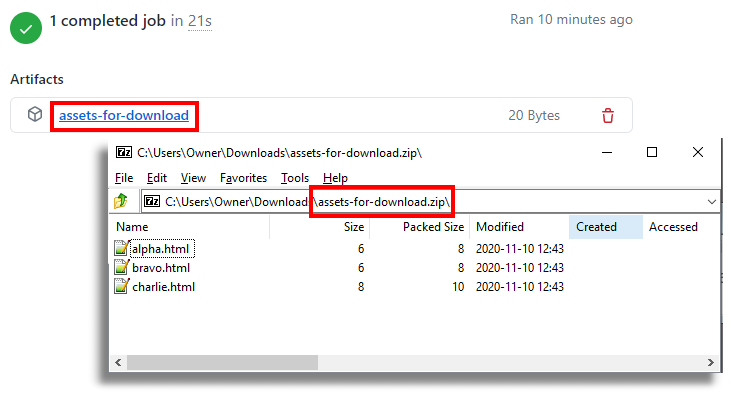

| Artifacts: Files produced by the build (binaries, docs, containers). | Artifacts | Build Artifacts | Artifacts | Artifacts | Artifacts |

| Cache: Stored build data (dependencies, compiled code) for faster runs. | Cache | Workspace | Cache | Cache | Cache |

| Parallelism: Running multiple tasks concurrently to speed builds. | Parallelism | Parallel Builds | Matrix Builds | Parallel Matrix | Parallel Jobs |

| Build Status: Indicator of build success or failure. | Build Status | Build Status | Build Status | Build Status | Build Status |

Further readings

Thanks for reviewing my book!

What I am looking for:

- Sections that should be removed (have no relevance to the book at all), added, or are debatable (provide a case study or references).

- What sections you'd like me to write more about, what sections are neutral, and what sections are boring.

- High-level overview of any organization changes (e.g., rearranging the sections in the table of contents).

- Technical inaccuracies (when possible).

- Changing the table of contents to better align with the audience.

- Whether you would recommend this book to your friends (I promise I won’t hold you to it).

- Whether the current table of contents may be sufficient to meet the page count goal, or I may need to write more about other sections.

What I am not looking for as much:

- Spelling, grammar, or formatting, unless formatting makes the text unreadable.

- Nit-picks.

Target Audience: Software developers, system administrators, and DevOps professionals with 1-3 years of experience, seeking to implement efficient CI/CD practices in startups and small businesses.

Focus: This practical guide provides a streamlined approach to setting up and managing CI/CD pipelines with limited resources, emphasizing business needs and rapid deployment.

Key Features:

- Advanced Beginner/Intermediate Level: Goes beyond introductory concepts, catering to developers with existing programming, testing, and Git experience.

- Cost Management Strategies: Practical tips for optimizing runner usage, leveraging free tiers, and avoiding unexpected billing.

- GitHub Actions Focus: Detailed walkthroughs and pitfalls of GitHub Actions, with a brief overview of other CI/CD providers.

- Efficient Pipeline Setup: Streamlined workflows, avoiding common pitfalls and unnecessary debugging, with a focus on business value.

- Trunk-Based Development: Emphasis on frequent deployments and rapid integration, with concise mentions of alternative branching strategies.

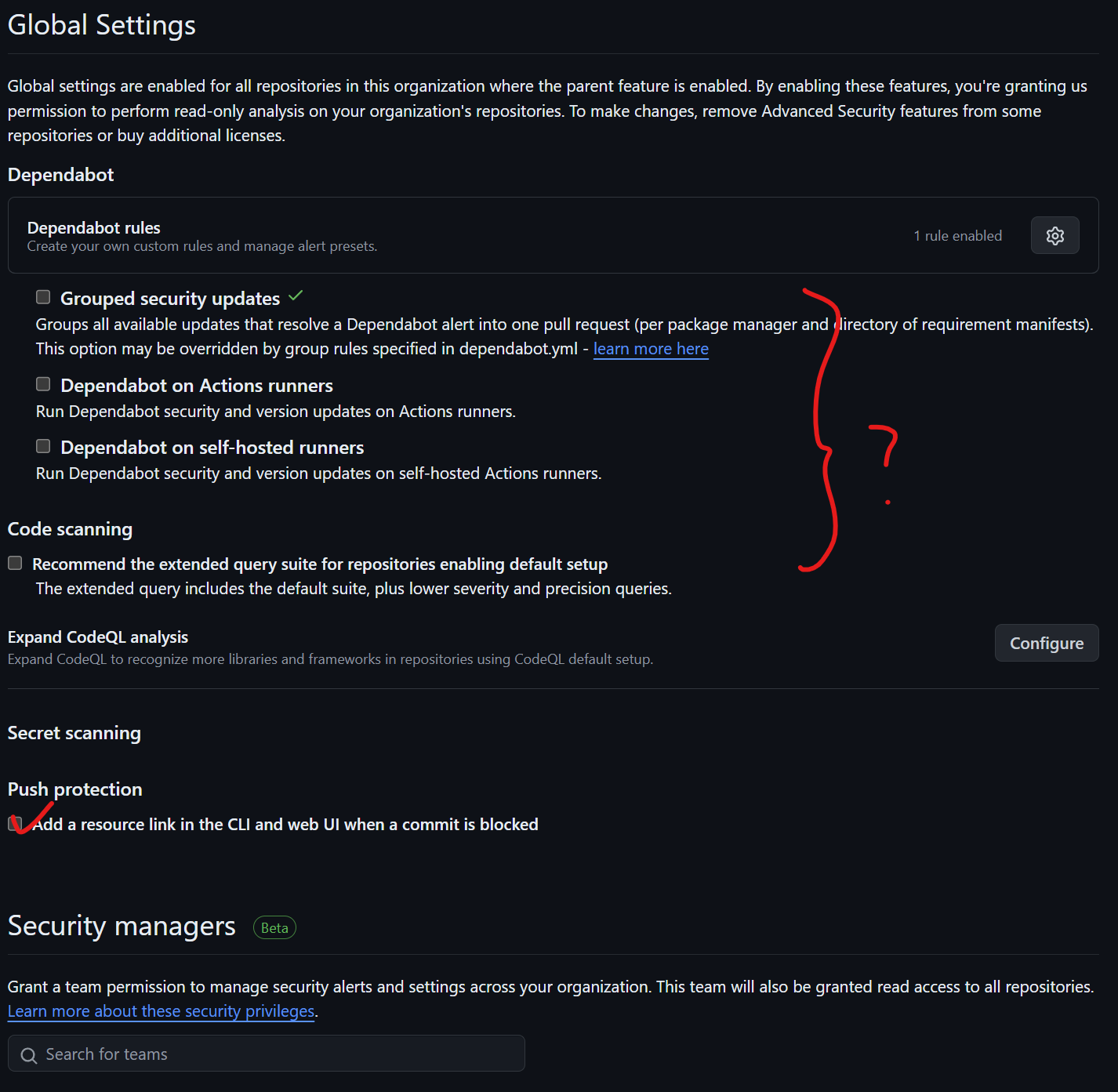

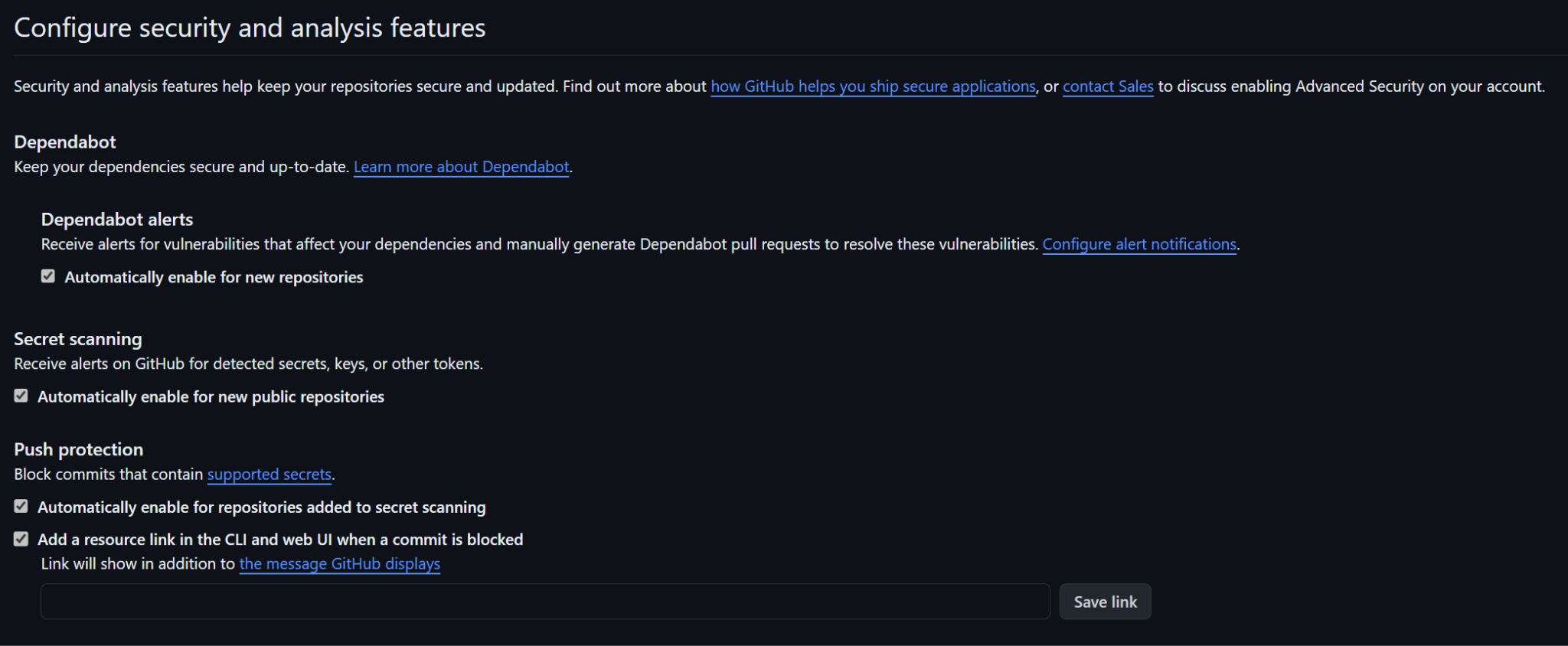

- Security Best Practices: Basic security scanning tools and techniques, secrets management, and prioritization of security alerts (e.g., Dependabot).

- Effective Testing Strategies: Writing impactful tests, managing manual testing, and aligning test strategies with business goals.

- Practical Deployment and Monitoring: Deploying updates quickly, handling rollbacks, and understanding the importance of continuous monitoring.

- Automation vs. Manual Processes: Identifying what to automate and what to keep manual in a dynamic startup environment.

- Real-World Context: Touches on Agile methodologies, regulatory considerations (e.g., FDA), and the HP case study for embedded systems.

- Emphasis on Practicality: Real-world scenarios, such as receiving phone alerts for production issues, and adapting CI/CD to dynamic environments.

This book provides the essential knowledge and practical skills needed to successfully implement and manage CI/CD, empowering developers to streamline their workflow, accelerate deployments, and improve software quality.

Book will have about 350 pages when complete.

CI/CD Introduction and Overview

Introduction to CI/CD

Consolidated from Introduction_to_CI_CD.md.

Preserved content excerpts (from Introduction_to_CI_CD.md)

What is CI/CD?

- CI/CD is a methodology and toolchain for integrating and shipping small changes frequently.

- It reduces “integration hell” by continuously merging work and validating it automatically (builds/tests).

- It requires both technical practices (automation, tests, reproducible builds) and cultural practices (small PRs, fast feedback, fix-the-build discipline).

Continuous Integration (CI)

- CI is the practice of integrating changes into a shared codebase frequently.

- Automation (build + tests + lint) is the baseline “confidence gate” before merging.

Continuous Delivery vs Continuous Deployment (CD)

- Continuous Delivery: the software is always deployable, but promotion to production may require approval.

- Continuous Deployment: changes that pass the pipeline are deployed automatically (often gated with feature flags / staged rollout).

Note: “environment” is overloaded (runtime environment, environment variables, and GitHub Environments).

For a cross-platform terminology table (Jenkins/GitHub Actions/GitLab/CircleCI), see the CI/CD Overview and Diagram section below.

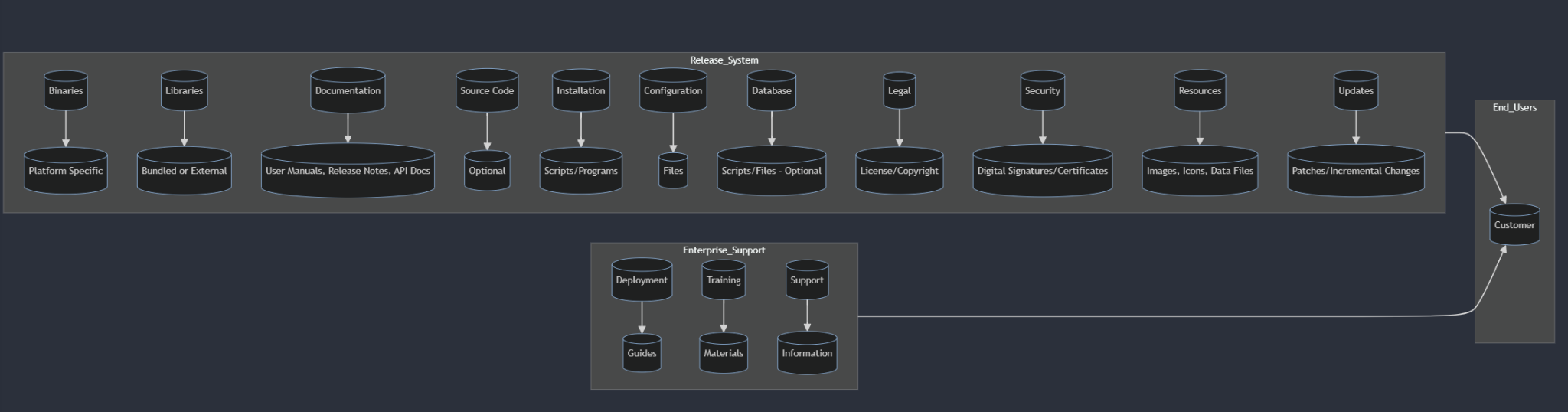

CI/CD Overview and Diagram

Consolidated from CI_CD_Overview_and_Diagram.md with process summary, artifacts, deployment strategies, and cross-platform terminology table.

Preserved content excerpts (from CI_CD_Overview_and_Diagram.md)

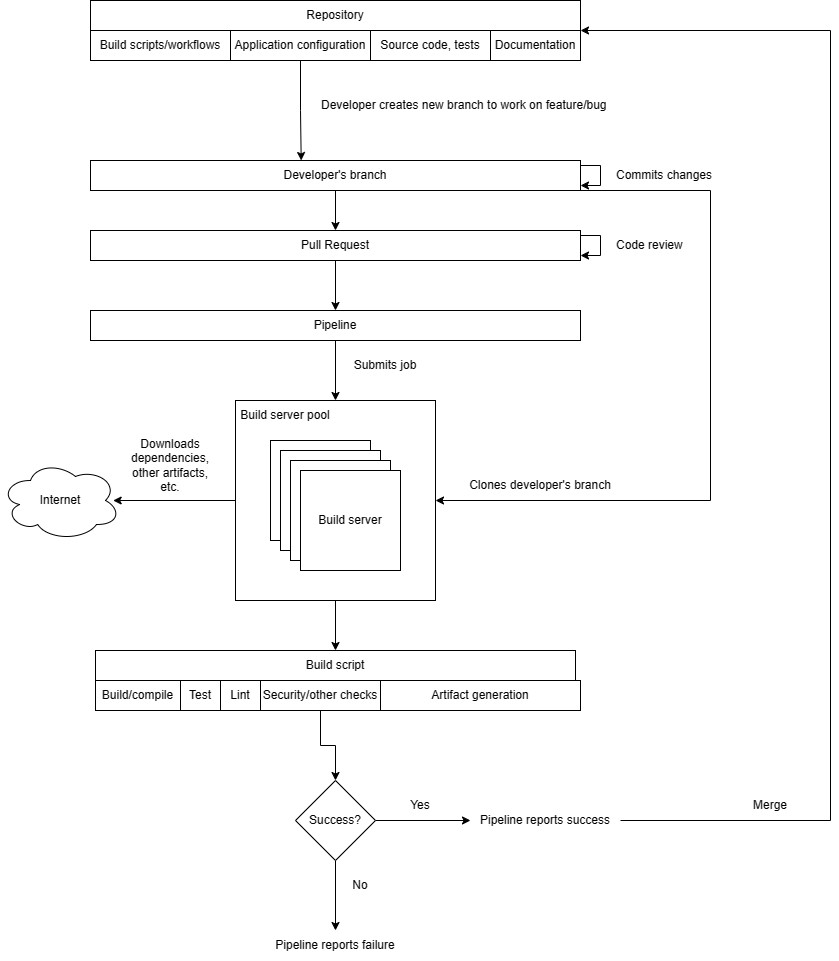

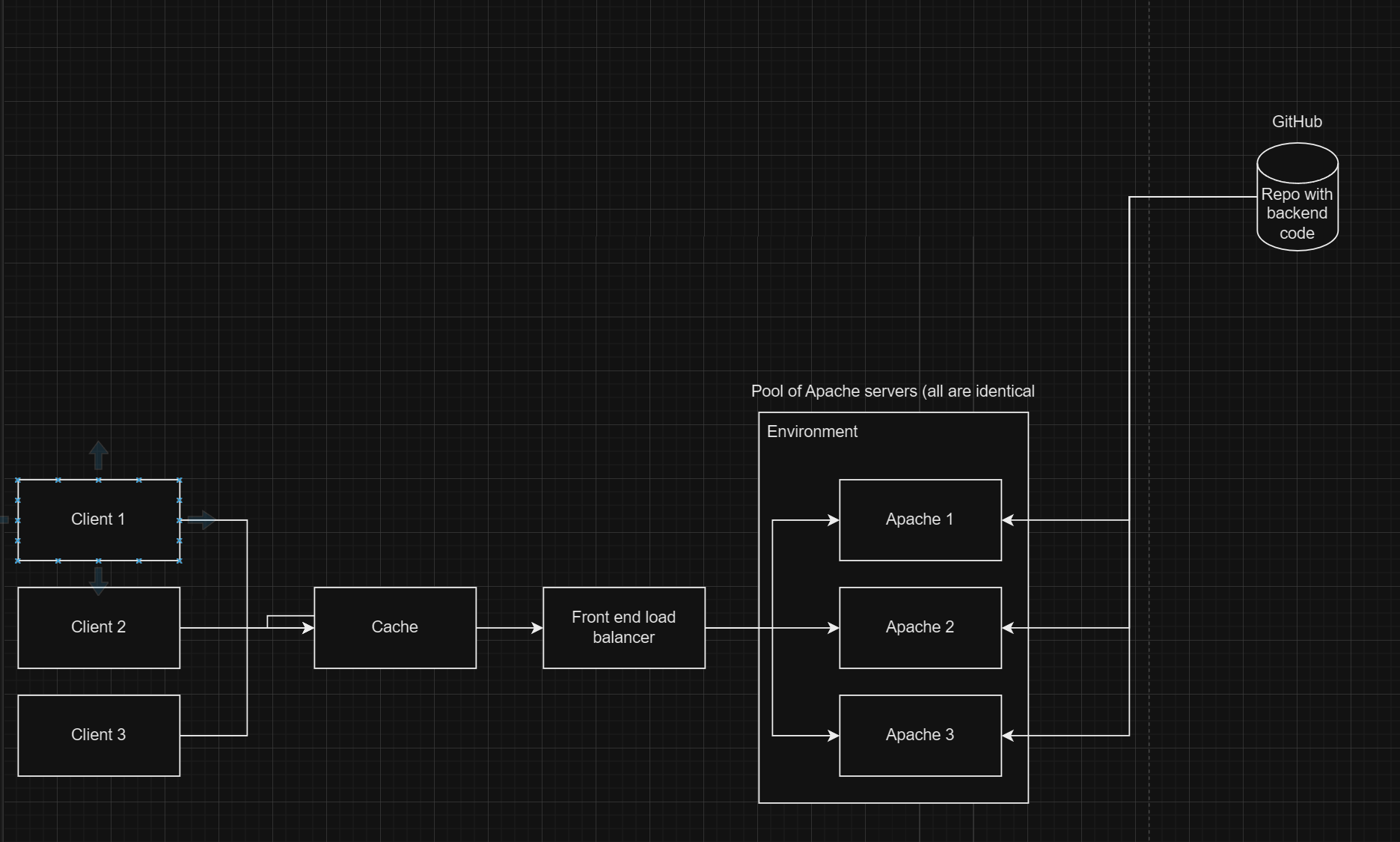

What’s happening in the diagram

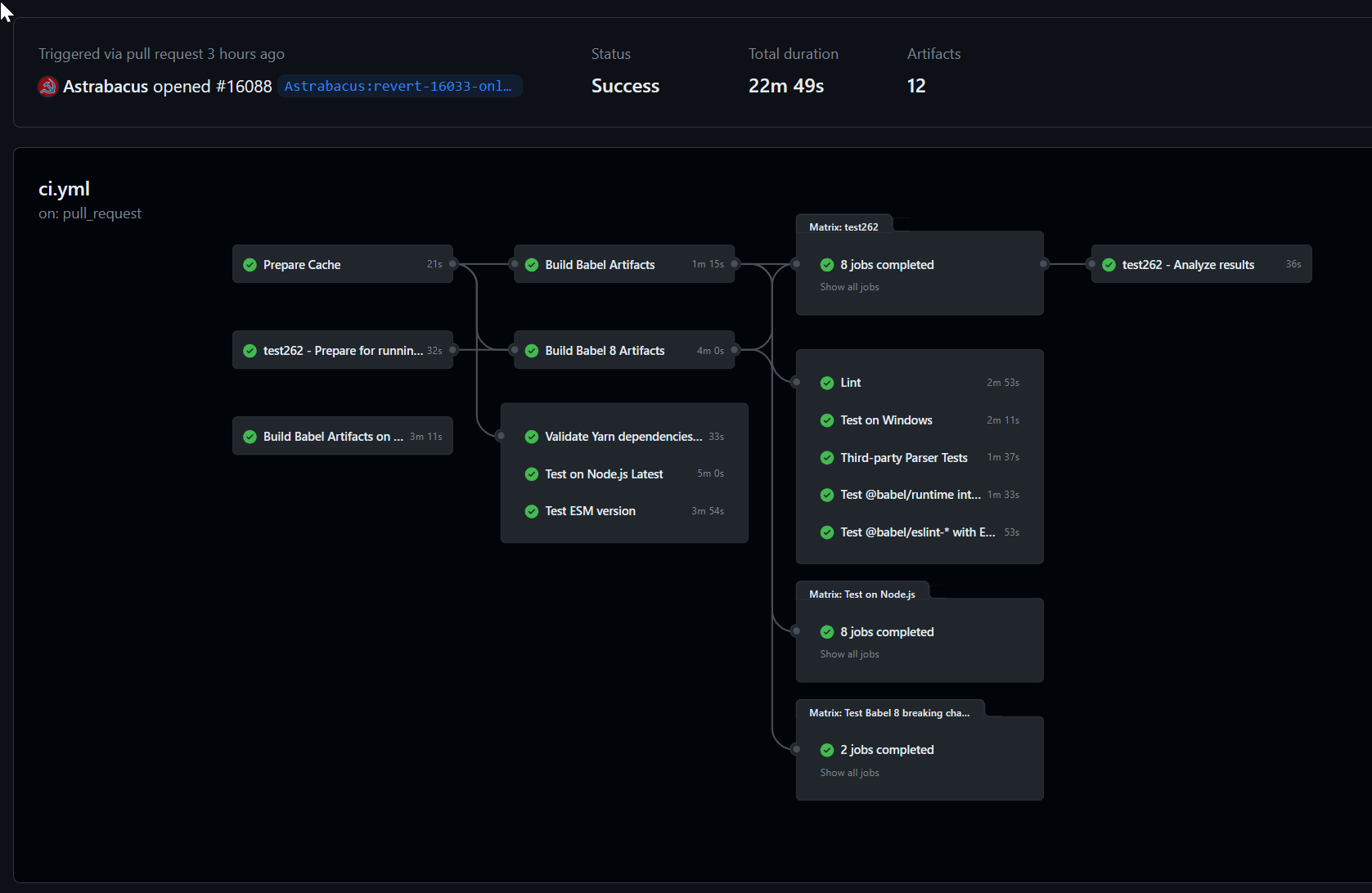

- After tasks are broken down, developers implement them and open a pull request (PR). This triggers a CI pipeline (build, test, lint). The pipeline must succeed before the PR is merged.

- A successful CI run publishes build artifacts to an artifact repository. At this stage, artifacts exist but are not yet customer-accessible.

- A deployment pipeline (CD) promotes a selected artifact to an environment using infrastructure as code (IaC) so infrastructure and configuration are reproducible (avoid “snowflake” machines).

- CD can deploy work-in-progress safely when the feature is gated behind a feature flag (code can be present in production but inactive).

- Common rollout strategies include:

- Blue/green: two environments (blue = current, green = new) for zero-downtime cutover.

- Incremental/canary: release to a subset first, then gradually increase exposure.

- Deployment does not necessarily mean release: deploy, deliver, and release are distinct concepts in modern pipelines.

- Post-deploy, use continuous monitoring so issues can be detected quickly and rollbacks can occur if needed.

Common steps in build/deploy workflows

- Trigger: pipeline runs on events (PR opened, merge to main, etc.).

- Checkout and dependencies: runner checks out repo and restores dependencies from trusted sources.

- Compilation/build: compile/transpile where applicable (language-dependent).

- Linting/static analysis (optional): style, quality, and some bug patterns.

- Automated tests:

- Unit tests

- Integration tests

- End-to-end tests

- Artifact publishing: publish immutable build outputs to an artifact repository.

- Deployment: select an artifact and deploy to the target environment (often with additional checks).

Cross-platform terminology (CI/CD)

| Generic term | Definition | Jenkins | GitHub Actions | GitLab CI/CD | CircleCI |

|---|---|---|---|---|---|

| Build step | A single task/command as part of the build process. | Build Step | Job | Job | Job |

| Environment | The compute environment where build/tests run (OS/tools). | Node | Runner | Runner | Executor |

| Workflow | Sequence of jobs/steps defining the CI process. | Pipeline | Workflow | Pipeline | Workflow |

| Trigger | Event/condition that starts CI/CD. | Build Trigger | Event | Trigger | Trigger |

| Secrets | Sensitive build/deploy data (passwords, keys, tokens). | Credentials | Secrets | Variables | Environment Variables |

| Container | Isolated runtime environment for predictable execution. | Agent/Docker Agent | Container | Docker | Docker Executor |

| Configuration | File(s) that define the CI/CD behavior. | Jenkinsfile | .github/workflows/* | .gitlab-ci.yml | .circleci/config.yml |

| Artifacts | Files produced by builds (binaries, bundles, packages). | Build Artifacts | Artifacts | Artifacts | Artifacts |

| Cache | Temporary storage to speed up subsequent runs. | Workspace Cache | Cache | Cache | Cache |

| Parallelism | Ability to run jobs concurrently. | Parallel Builds | Matrix Builds | Parallel | Parallel Jobs |

| Build status | Outcome indicator (success/fail/in-progress). | Build Status | Check | Pipeline Status | Build Status |

Adopting CI/CD and Makefile Example

Consolidated from Adopting_CI_CD_and_Makefile.md with staged adoption advice and simple makefile.

Preserved content excerpts (from Adopting_CI_CD_and_Makefile.md)

Prerequisites

- Ensure the codebase is in version control (e.g., Git).

- Ensure you can build from the command line (no “IDE-only” builds).

Start small and iterate

- Start with a basic pipeline that builds your targets and notifies on failure.

- Treat the pipeline as production infrastructure: a broken pipeline must be fixed immediately.

- Add capabilities incrementally:

- Static analysis

- Unit tests (start with new code and high-risk areas)

- Formatting enforcement

- Metrics and dashboards (build time, artifact size, coverage)

- On-target testing (automate flashing + running + collecting results)

Makefile example

main.o: main.c mathFunctions.h utilFunctions.h gcc -c main.c

utilFunctions.o: utilFunctions.c utilFunctions.h gcc -c utilFunctions.c

mathFunctions.o: mathFunctions.c mathFunctions.h gcc -c mathFunctions.c

Important terms

-

Build pipelines (or just pipelines) are simply scripts that developers maintain to perform useful work, such as building your application, generating build artifacts, or deploying your application. It is called a pipeline because the way it is structured encourages a one-way dataflow, and a set of goals that are or are not achieved.

-

They are central to CI/CD, and developers will be maintaining them constantly, and updating them. Therefore, it's very important that you know the right terminology for them, how they work, and how to create them. If, for example, the deployment pipeline fails, then it must be fixed quickly, because this is usually the only route to production. Therefore, it is important to know what they are, how to create them, and how to debug them quickly and effectively.

Disambiguation of terms between CI providers

-

This book is somewhat focused on GitHub Actions, but tries to provide a provider-agnostic view. Some of the terms might be a bit different depending on your CI/CD provider. Here is a table that helps clarify.

-

Definition Generic Term Jenkins GitHub Actions GitLab CI/CD CircleCI

Build Step: A build step is a single task or command within a CI/CD pipeline. It's a specific action to be executed, such as compiling code, running tests, or deploying software. Build Step Build Step Job Job Job

Environment: In software development, an environment refers to a setup where software runs. This can include factors like the operating system, available software and tools, system variables, and network access. Different environments (like development, testing, and production) mimic different stages of the software lifecycle. Environment Node Runner Runner Executor

Workflow: A workflow is a sequence of tasks that process a set of data. In CI/CD, a workflow is a set of rules for defining the build process, typically comprising multiple jobs or build steps. Workflow Pipeline Workflow Pipeline Workflow

Trigger: In CI/CD, a trigger is an event that initiates the execution of a workflow or pipeline. Common triggers include code commits, pull requests, scheduled times, or manual intervention. Trigger Build Trigger Event Trigger Trigger

Secrets: Secrets are sensitive data, such as passwords, tokens, or keys, essential for the operation of applications and the security of resources. In CI/CD pipelines, secrets are used to access resources without exposing them in the code or workflow definitions. Secrets Credentials Secrets Variables Environment Variables

Container: A container is a lightweight, executable package that includes everything needed to run a piece of software, including the code, runtime, system tools, libraries, and settings. Containers are isolated from each other and the host system, ensuring consistency across different environments. Container Agent/Docker Agent Container Docker Executor Docker

Configuration: Configuration in software development refers to the settings and parameters that define how software or hardware operates. In the context of CI/CD, configuration files (like YAML files in GitHub Actions) specify the parameters and settings of the build process. Configuration Jenkinsfile .github/workflows/* .gitlab-ci.yml .circleci/config.yml

Artifacts: Artifacts are files or data that are produced as a result of a build step or job in a CI/CD pipeline. These can include compiled code, binaries, libraries, containers, and documentation. Artifacts Build Artifacts Artifacts Artifacts Artifacts

Cache: In CI/CD, caching refers to the practice of storing a part of the build process, like dependencies or compiled code, so that it can be reused in subsequent runs, improving build speed and efficiency. Cache Workspace Cache Cache Cache

Parallelism: Parallelism in CI/CD is the execution of multiple build steps or jobs simultaneously. It is used to speed up the build process by dividing the workload across multiple runners or agents. Parallelism Parallel Builds Matrix Builds Parallel Matrix Parallel Jobs

Build Status: Build status is an indicator of whether a build or integration process in a CI/CD pipeline succeeded or failed. It provides immediate feedback on the health and success of a change or a set of changes made in the repository. Build Status Build Status Check Pipeline Status Build Status

- Some testing environments might have different terms. These might be called "Development Environment", Dev, QA, Staging, UAT, PPE, Testing, Experimental, or Beta. These terms may have different connotations, depending on which environments you are using, and for which purpose.

What steps should my workflow have?

The steps can be grouped by major themes such as Release Management, Build Process, Docker and Container Management, Version Control, Testing, Caching, Environment and Setup, and Miscellaneous Tools. Each sub-theme under these major themes is based on the actions that the steps are implied to perform.

Each major theme will be annotated with its summed frequency and ordered according to the sum. Sub-themes will be described along with speculative actions they might be performing.

Release Management (Total: 5308)

Total frequency calculated by summing all frequencies in this theme.

-

Release Creation and Publication (Total: 3417)

-

Creating GitHub releases, identifying upload URLs, and handling release drafts.

-

Tag Management (Total: 936)

-

Handling version tags, extracting tag information, and managing release versions.

-

Version Bumping and Semantic Versioning (Total: 572)

-

Managing version increments and providing semantic versioning guidance.

-

Changelog and Release Notes (Total: 383)

-

Generating and organizing changelog entries, and managing release notes.

Build Process (Total: 1462)

Total frequency calculated by summing all frequencies in this theme.

-

Compilation and Assembly (Total: 455)

-

Compiling code and managing compilation status.

-

Build Utilities (Total: 373)

-

Handling various build utilities and helpers.

-

Continuous Integration (Total: 634)

-

Managing CI processes, organizing build artifacts, and preparing environments.

Docker and Container Management (Total: 1321)

Total frequency calculated by summing all frequencies in this theme.

-

Docker Image Handling (Total: 879)

-

Building, tagging, naming, and pushing docker images.

-

Container and Platform Configuration (Total: 292)

-

Setting up container and platform specifics such as platforms and labels.

-

Miscellaneous Docker Related (Total: 150)

-

Other Docker-related tasks and cache management.

Version Control (Total: 2048)

Total frequency calculated by summing all frequencies in this theme.

-

Version and Release Information (Total: 1064)

-

Retrieving and setting version data, including semantic versioning.

-

Branch Management (Total: 577)

-

Extracting and managing branch information.

-

Commit and Repository Info (Total: 407)

-

Checking repository states, managing commit data.

Testing (Total: 267)

Total frequency calculated by summing all frequencies in this theme.

-

Test Execution and Reporting (Total: 267)

-

Executing tests and outputting results or statuses.

Caching (Total: 953)

Total frequency calculated by summing all frequencies in this theme.

-

General Caching (Total: 520)

-

Managing cache entries and directory paths.

-

Specialized Caching (Total: 433)

-

Caching specific tools or environments such as Node modules or Composer.

Environment and Setup (Total: 1323)

Total frequency calculated by summing all frequencies in this theme.

-

Environment Configuration (Total: 851)

-

Populating environment variables, managing configurations.

-

Project and System Setup (Total: 472)

-

Preparing the build environment, checking system states.

Miscellaneous Tools (Total: 405)

Total frequency calculated by summing all frequencies in this theme.

-

Utility Tools (Total: 405)

-

Varied utilities such as timestamp retrieval, setup of tools like GPG, or workflow operation utilities.

Please note that this is an approximation based on the step names. Actual actions performed by these steps would depend on the specific configurations and logic within the GitHub Actions definitions.

=====================================================================================================

For the Version and Release Information sub-theme, we can further break it down into:

-

Version Extraction and Parsing (Total: 839)

-

Operations that focus on extracting version strings, parsing, or converting version notation.

-

Version Management (Total: 237)

-

Actions associated with incrementing a version, checking version alignment, or managing version-related metadata.

-

Release Versioning (Total: 65)

-

Handling the versioning related to the releases specifically.

For the Build Process sub-theme, we can further categorize into:

-

Code Compilation (Total: 455)

-

The process that involves taking source code and converting it into an executable binary, library, or another output type.

-

Artifact Assembly (Total: 50)

-

Steps involved in assembling, packaging, or preparing build artifacts for deployment or distribution.

-

Build Assistance (Total: 227)

-

This includes setting arguments for the build, handling build numbers, or other secondary operations that support the main build process.

-

Build Environment Preparation (Total: 730)

-

These steps encompass preparing the build environment, setting up necessary dependencies or tools, configuring platforms, etc.

These categorizations are estimated based on the names of the steps and the frequencies at which they occur. They indicate the granularity of actions taken within Version and Release Information management and the Build Process, which would typically be configured within a GitHub Actions workflow for CI/CD pipelines. ================================================================================================================================================================================================================================================================================================================================+ --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

Getting started with the workflow

In order to have a good understanding of how CI/CD works, it's important to have a good idea of how everything fits in together. Here's the overall process, at a very high level view, from working on a feature to getting it out into production.

| Development Stage | Sub-Stage | Description |

|---|---|---|

| Planning & Design | Define Work Item | Identify and document features, tasks, or bugs to be addressed. Example |

| Prioritization & Scheduling | Decide on the priority of the work item and when it will be addressed. Example | |

| Development | Code Implementation | Writing the actual code and implementing features or bug fixes. Example |

| Local Testing & Verification | Run unit tests and perform manual testing to verify code behavior on a local dev environment. Example | |

| Code Submission | Create Pull Request (PR) | Submit the code for review. Example |

| PR Awaiting Review | Time period the code waits to be reviewed. Example | |

| Code Review | Peer Code Review | Team members review the code for quality, functionality, and style. Example |

| Feedback Incorporation | Apply changes based on code review feedback. Example | |

| Build & Integration (CI) | Build Initialization | Setup for build environment and dependencies. Example |

| Core Build Process | Compilation, linking, and creation of executable files. Example | |

| Security & Compliance Scan | Scan for security vulnerabilities and compliance issues. Example | |

| Automated Testing (CI) | Test Initialization | Setup for testing in an isolated environment. Example |

| Execute Automated Tests | Run automated test suites. Example | |

| Deployment (CD) | Canary Deployment | Deploy to a subset for monitoring and testing. Example |

| (Coming up next) | Monitor & Validate | Monitor and validate new features. Example |

| Full Production Deployment | Roll out to the entire production environment. Example |

Developers create short-lived branches for their work:

-

This isolates their changes from the main codebase ("trunk") and allows collaboration with other developers.Short lived in this context refers to just the minimal amount of time needed to work on that particular task and no more.This means that the tasks have to be broken down sufficiently as well as broken down in such a way where the tasks are easy to complete and don't interfere necessarily with other commits. They're also testable as well, capable of showing that they can have the capacity to be integrated. You can look at the appendix For more information on how to break down tasks into such a way that makes them.To show that they've been integrated successfully.

-

Branches can be created locally or through GitHub's UI.

Pull requests (PRs) are used to merge code into the trunk:

-

Developers create PRs when they're ready to integrate their changes.They create the PR from their branch.

-

This triggers a pipeline that builds, tests, and runs the code to ensure quality.The pipeline and its trigger must be set up by developers beforehand. By default it does not do this.

-

PRs require review and approval before being merged.Normally to approvers are required, but it depends on your team.At least one approver should approve.

Merging PRs updates the trunk:

-

This makes the changes available to all developers.

-

Developers need to pull the latest changes into their branches to stay up-to-date.This is not a requirement as when you create the PR it will automatically merge their branch into the trunk when building.However, it's recommended that developers pull their latest changes to keep up to date because the merge might be different than what they tested locally, potentially introducing the possibility of bugs.

Branch Management:

-

Short-lived branches are typically deleted after merging.

-

Long-lived branches are useful for experiments and large refactorings, such as migrating frameworks (e.g., Spring to Hibernate). However, these situations are infrequent. Most development (90-95%) utilizes short-lived branches to ensure continuous integration, cohesion, and comprehensive testing.

-

Descriptive branch names, including developer IDs (e.g., "username/feature-name"), improve organization and maintenance. This convention aids in automatic categorization within popular CI/CD platforms like Azure DevOps, grouping branches into logical directories.

Build Server:

-

The build server clones the developer's branch associated with the PR and builds the code.In this case the build server is provided to you by GitHub Actions, but you can also use a self hosted runner yourself.

-

This ensures that the changes are compatible with the existing codebase.It's important to write good tests as well as making sure that the bell script is up to date to make sure that the pipeline sufficiently instills confidence in your changes. The build pipeline is only as useful as the effort and criteria that you put into it. It is not magical.

What is a repository?

-

A repository is a way to store a collection of files that are used in the build process, and should include all files that allow the application to be built, including configuration files, database migrations, tests, etc. Developers work off of a branch, which is a snapshot of the repository. Each repository is usually a deployable unit, and normally should not include other projects unless they are part of the same application or must be deployed together. It is managed with a VCS (e.g., Git.)

-

However, it should not include environment-specific files, secrets (such as passwords), API keys, or files specific to a single developer's environment because these are typically injected at runtime, and should not be part of the application, as they could be erroneously leaked to production.Normally injected at runtime. Do not inject it in your continuous integration and development server.

-

Also, if a single developer's settings are in the repository, it's not much use for the other developers and could cause confusion.

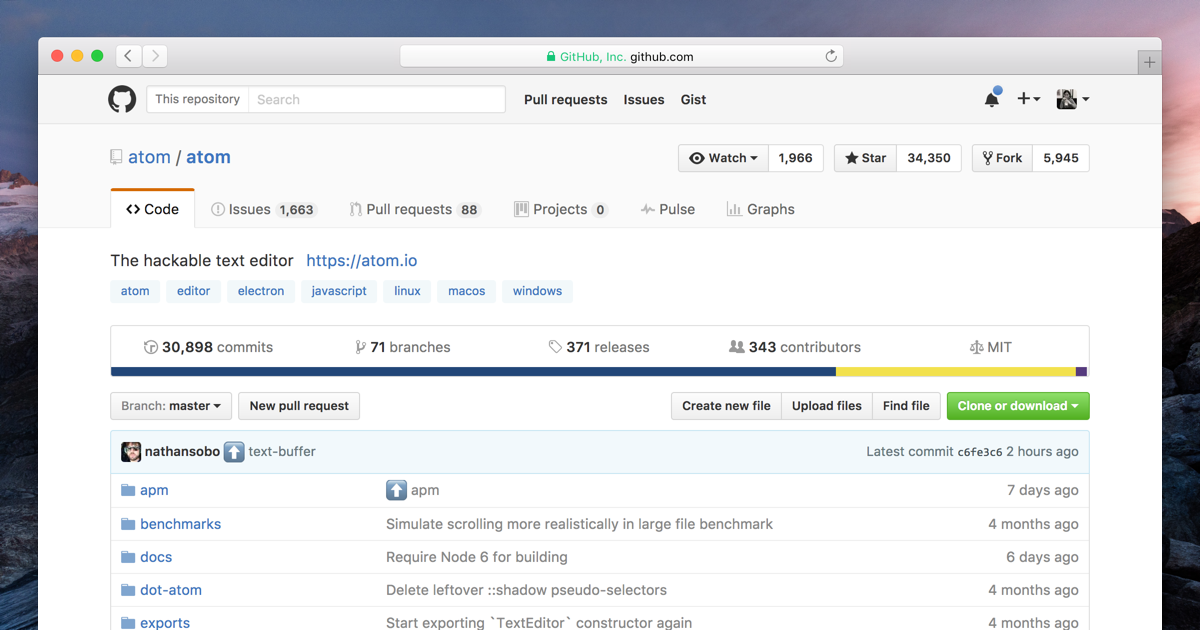

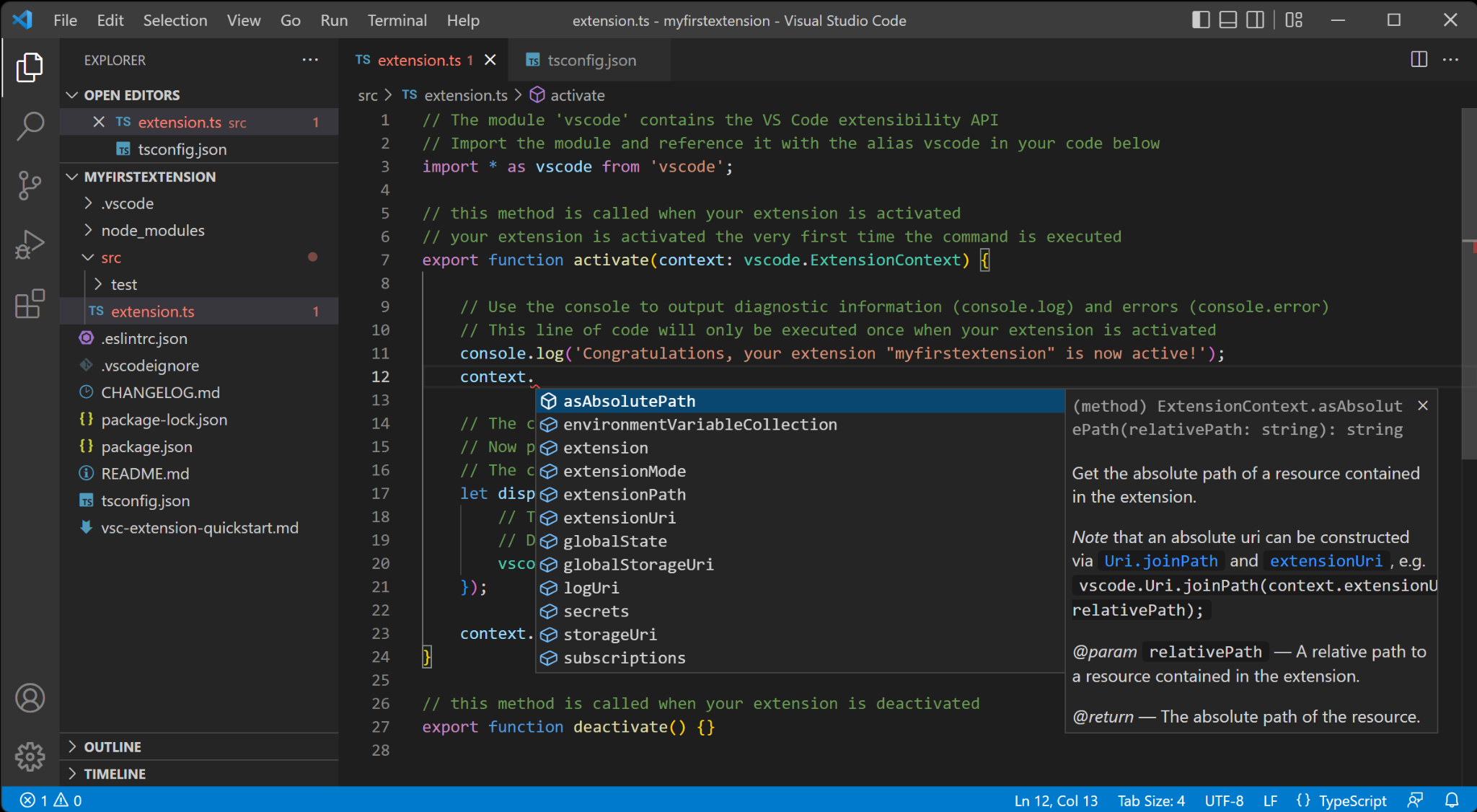

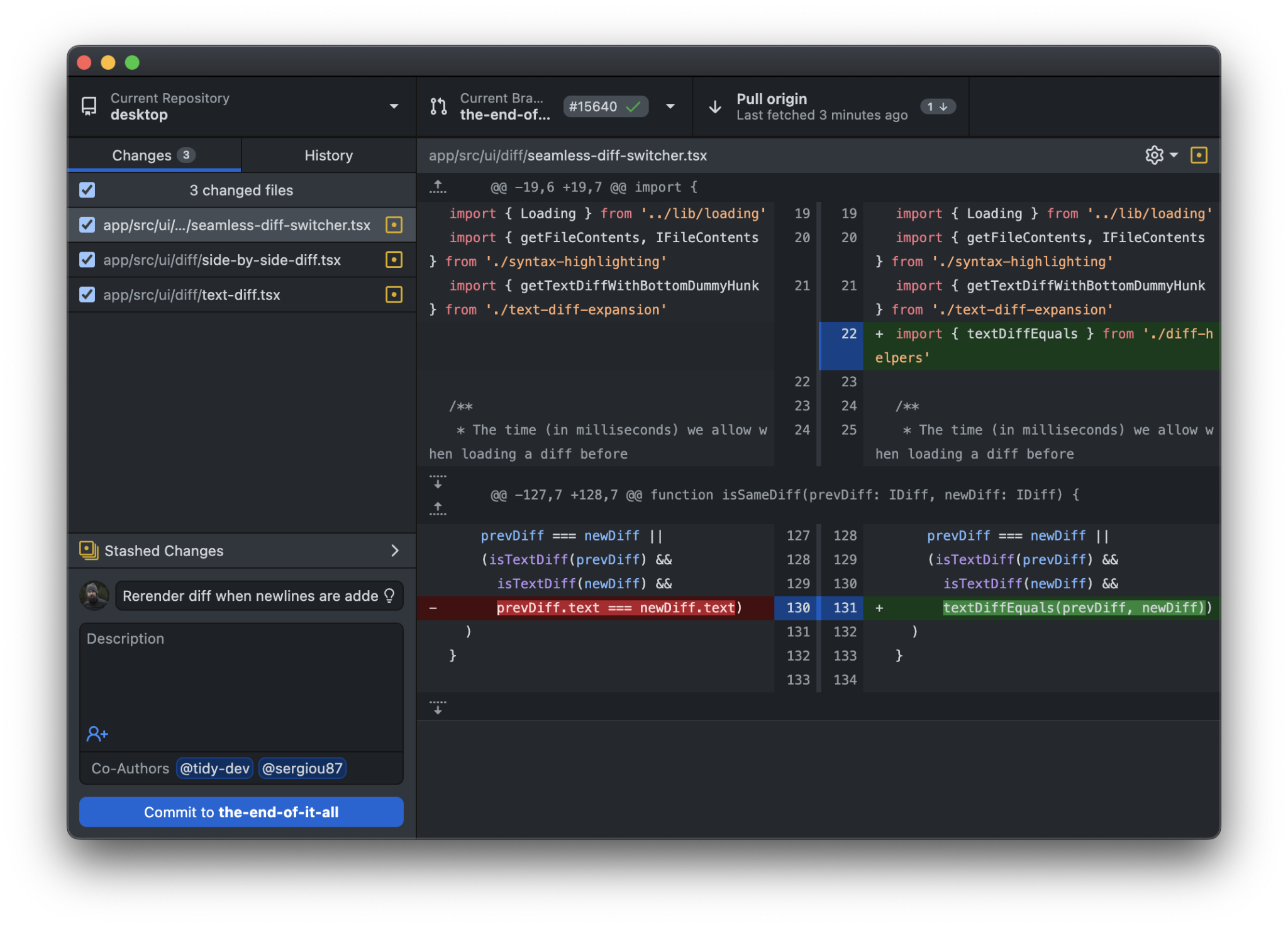

Typical development scenario using VCS

A developer works on code using their IDE, on their development branch, from their repository as shown in this screenshot. This is the code repository as discussed earlier. VS Code is a free IDE that is open source.

TypeScript Programming with Visual Studio Code

While git can be easily used on the command line, sometimes developers prefer a GUI-based approach. This can be especially helpful for those who are new to git. Some applications can help with this, such as "GitHub Desktop".

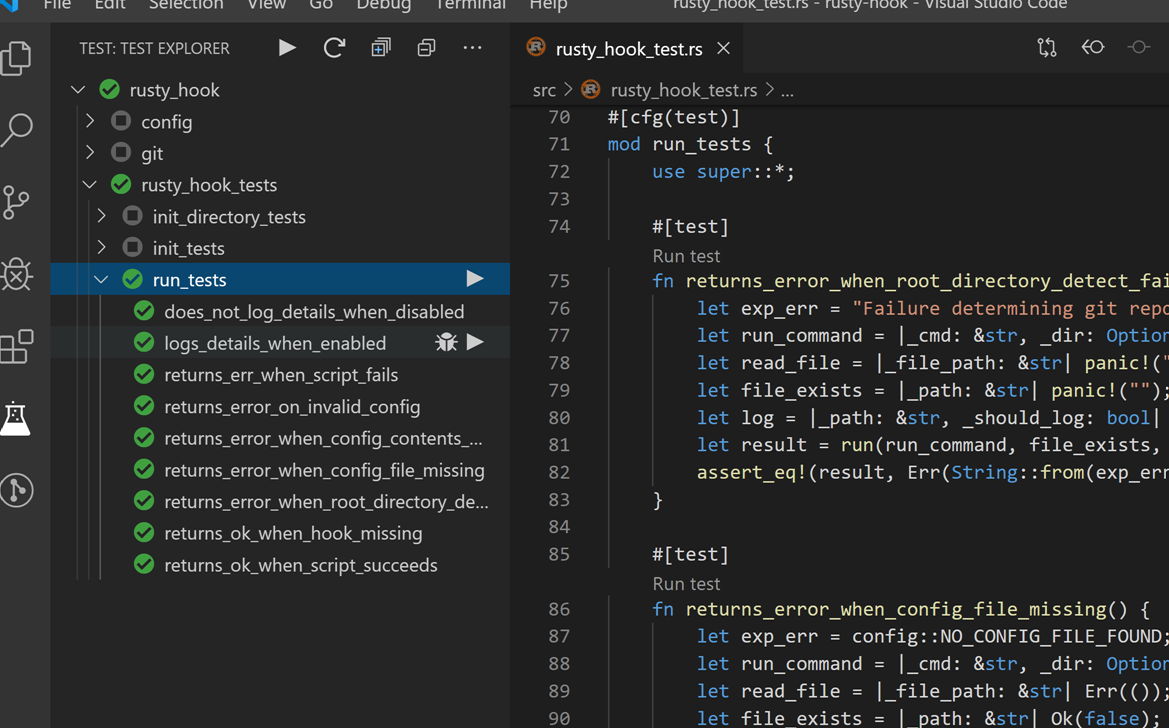

While the developer is coding, they normally run unit tests or integration tests in their local environment, which is part of having a fast feedback loop. These tests exist as part of the repository. This provides them confidence for their changes. If they find a broken or failing test, then they would fix it on their development workstation before proceeding, because otherwise the pipeline would fail and they wouldn't be able to merge their code. Developers are responsible for writing and maintaining tests.

Rust Test Explorer - Visual Studio Marketplace

For instance, consider a repository opened in GitHub Desktop. It's a tool to visualize and interact with a Git repository, showing individual changes and their details. While this isn't a tutorial on Git, it's worth noting that any VCS with the capability to track and manage changes suffices; it doesn't have to be Git. The choice depends on your team's preferences and needs. Here is a screenshot of GitHub Desktop:

https://github.com/desktop/desktop Some other GitHub Desktop-like tools are SourceTree, GitKraken, Tower, and TortoiseGit.

SourceTree – Free for Windows and Mac.

- Offers a visual representation of branches and commits.

- Integrated with Bitbucket and GitHub.

- Can be slow and occasionally buggy.

- Somewhat steeper learning curve for beginners.

GitKraken – Intuitive UI, great for beginners.

- Cross-platform (Windows, Mac, Linux).

- Supports GitFlow.

- Free version has limitations; Pro version is paid.

- Some users report performance issues.

Tower – Clean UI and efficient performance.

- Offers advanced features like single-line staging.

- Good integration with multiple services.

- No Linux version.

- More expensive compared to other tools.

TortoiseGit – Integrates directly into Windows Explorer.

- Free and open source.

- Mature and well-maintained.

- Windows only.

- UI might not be as modern or intuitive as other tools.

- Requires separate Git installation.

After developers are done, they then create a PR. This shows the developer's changes and allows other developers to comment on them (i.e., code review.)

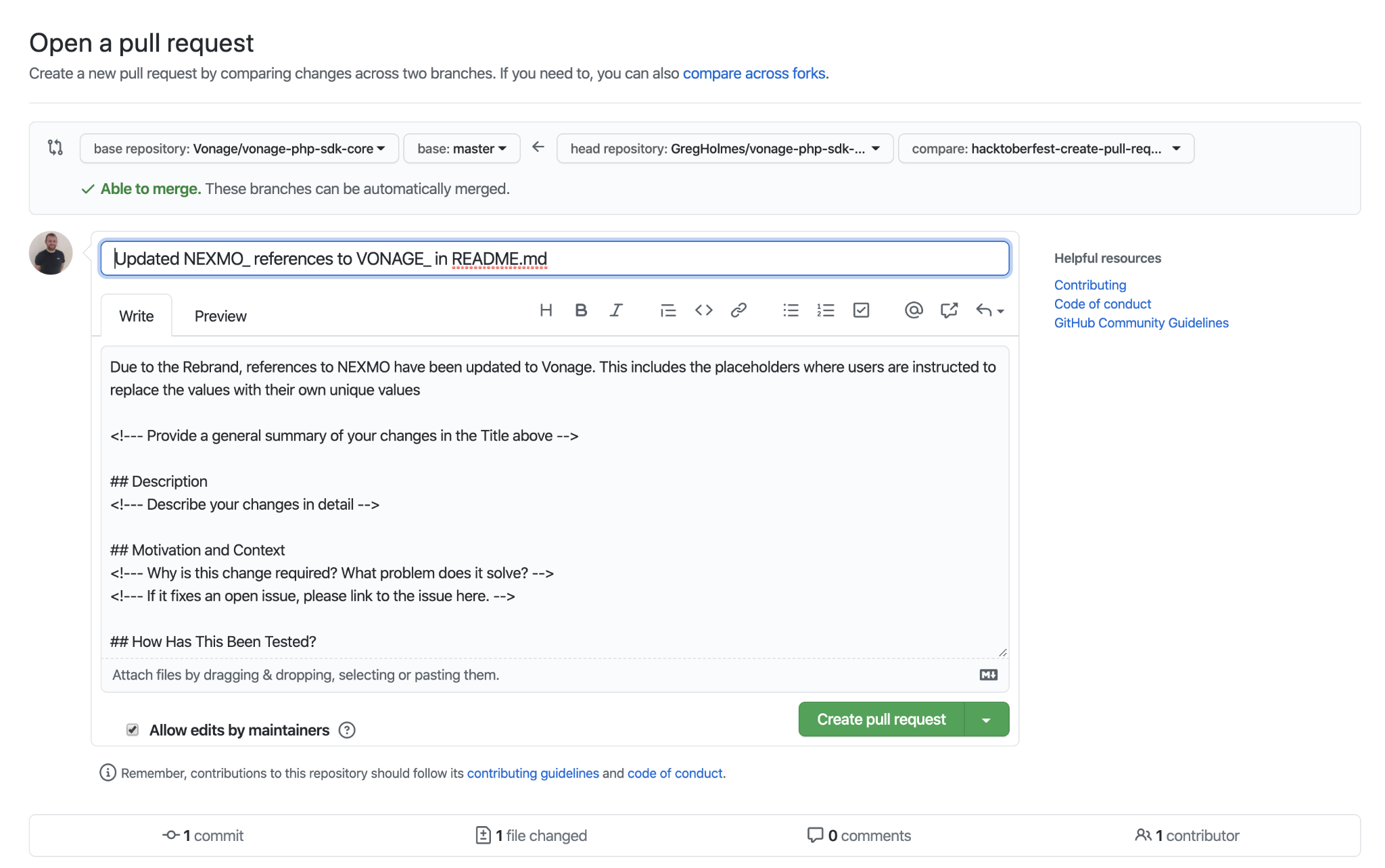

What is a pull request?

A pull request (or a change request) is a request from a developer to merge changes from their branch into the repository. For example, when a developer is ready to integrate their changes, they would create a pull request. The pull request allows others to comment on the developer's changes and also triggers the build pipeline to validate their changes. Since a developer needs to create a pull request before merging, this means that the confidence-instilling checks can run.

After the pull request is merged, then the changes become part of the "main" branch in the repository. This means that they can be deployed, or other developers can integrate on top of their work.

Here are some key characteristics of a good pull request:

-

Clear Title: The title should be concise and describe the changes at a high level. Someone should be able to get an idea of what the PR is about just by reading the title.

-

Descriptive Description: A PR description should provide context. It should answer:

-

- What changes are being made?

-

- Why are these changes necessary?

-

- How have these changes been tested?

-

- Are there any related issues or PRs?

-

Small and Focused: Ideally, a PR should be small and address a single issue or feature. This makes it easier to review and understand. Large PRs can be daunting for reviewers.

-

Includes Tests: If the project has a testing framework, the PR should include tests that cover the new functionality or bug fixes. This ensures that the changes work as expected and prevents regressions.

-

Follows Code Style and Conventions: The PR should adhere to the project's coding standards and conventions to maintain consistency.

-

Updated Documentation: If the changes introduce new features or modify existing ones, the PR should also update related documentation.

-

Comments on Complex or Ambiguous Code: If the changes involve complex logic or hacks to address specific edge cases, they should be accompanied by comments explaining the rationale.

-

Includes Relevant Assets: If the PR has UI changes, including screenshots, gifs, or videos can be very helpful for reviewers.

-

Has Been Self-reviewed: Before submitting, the author should review their own PR. This can catch many small issues like typos, console logs, or forgotten debug statements.

-

Passes Continuous Integration: If the project uses CI tools, the PR should pass all checks (like building without errors, passing tests, lint checks, etc.)

-

Addresses Feedback: After receiving feedback, the PR author should make the necessary changes and might need to clarify if something isn't clear. A good PR evolves through collaboration.

-

Links to Issue: If the PR addresses an open issue, it should link to or mention that issue. This provides context and allows for tracking the resolution of bugs or features.

How To Create a Pull Request With GitHub Desktop (vonage.com)

Testing and automated testing in the pipeline

Software testing is crucial for ensuring that applications meet quality standards, function correctly, and deliver a positive user experience. It's a vital part of CI/CD because it helps developers catch bugs early and build confidence in their code changes. When the build pipeline runs, it runs your automated tests. If the automated tests fail, then therefore the build pipeline will fail as well. This is important, because this tells the developer that their changes cannot be merged (i.e., integrated and available to other developers) because something is wrong.

There are two main categories of testing:

-

Automated Testing ("Checking"): These tests are predefined (automated tests), can be run by computers, and are coded by developers. They are essential for verifying functionality and performance but very tedious, time-consuming, expensive and boring for a human to do manually.

-

Manual Testing: This involves human judgment and focuses on aspects like usability, aesthetics, and user experience that are difficult to automate.

Checking is like a demonstration. Imagine a chemistry teacher in front of an eager class. The teacher says, "Watch this!" and mixes two chemicals together. Then, everything changes color and makes a pop. The teacher already knew what was going to happen, and in this case demonstrated that fact in front of a class: the chemicals were going to change color, and make a pop. Or, say someone enters in 1+1 in the calculator. There is an expectation that it will always be "2".

Now, contrast this with manual testing: tacit knowledge. You have to write a set of rules to determine if a website is designed well. It's not very easy to write a set of rules, or instructions for someone to determine that. But, it is easy to figure out using our human brains: we try to use the website, and if we are having issues, then we know that it's not usable. But we can't create a document that describes every possible facet in great detail for every possible situation of what usable means. It's highly subjective and complex. It's important to have both types of testing.

There are many types of automated tests, including:

-

Unit Testing: Verifies individual components of code.

-

Integration Testing: Checks how different units of code work together.

-

Functional Testing: Tests the application against business requirements.

-

System Testing: Examines the fully integrated software product.

-

End-to-End Testing: Simulates real-user scenarios.

-

Acceptance Testing: Determines if the software is ready for release.

-

Performance Testing: Assesses speed, response time, and stability.

-

Stress Testing: Evaluates how the system performs under extreme conditions.

-

Security Testing: Identifies vulnerabilities.

-

Usability Testing: Evaluates user-friendliness, which may or may not include a human reviewer.

-

Regression Testing: Ensures new changes don't break existing functionality.

-

Smoke Testing: Identifies major failures early on.

-

Exploratory Testing: Involves less structured exploration of the software.

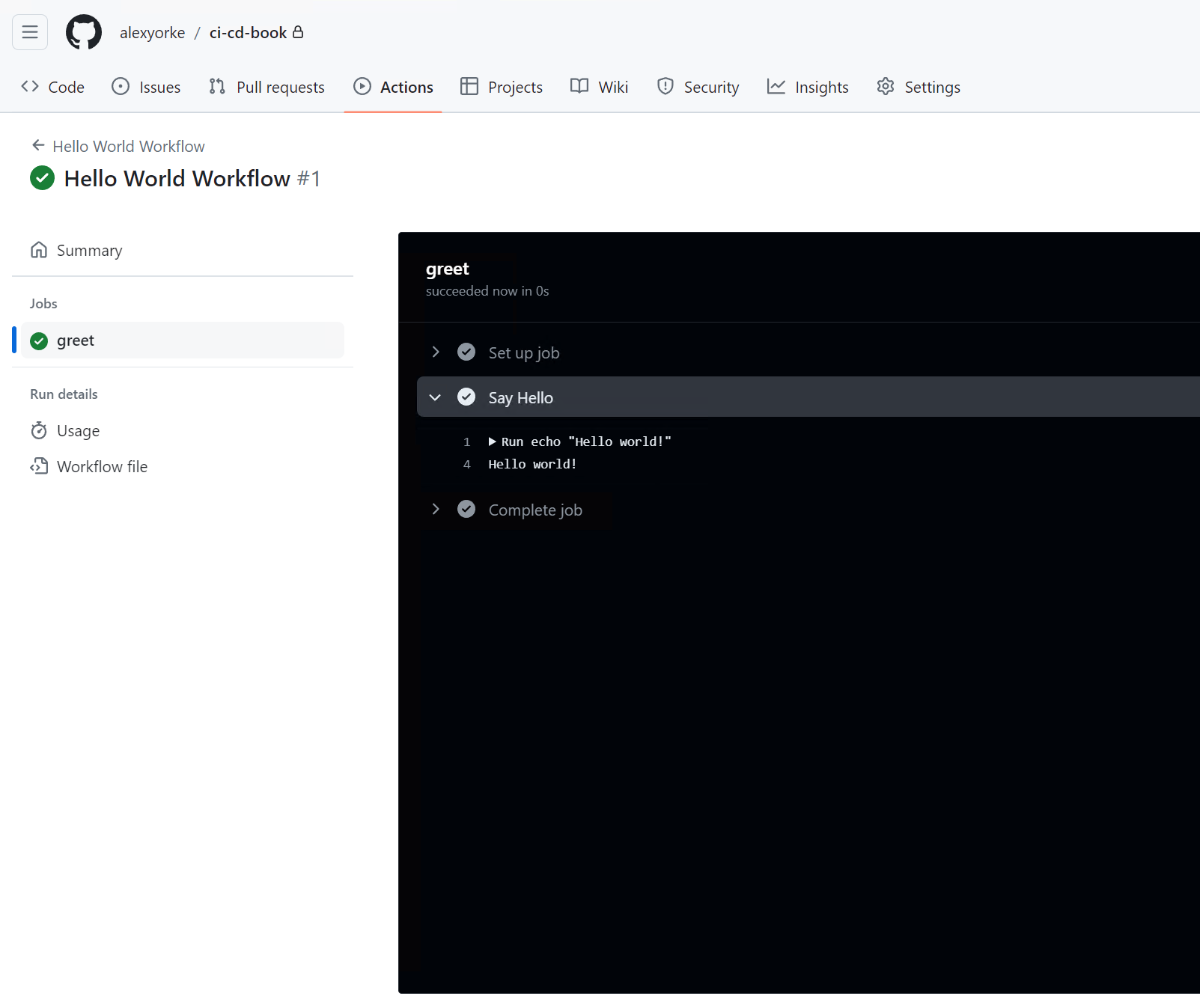

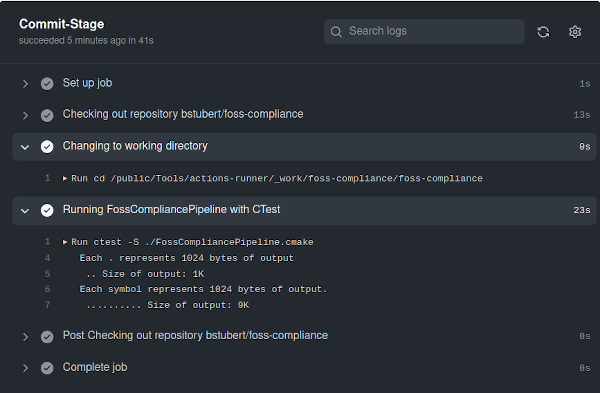

Getting started with GitHub Actions

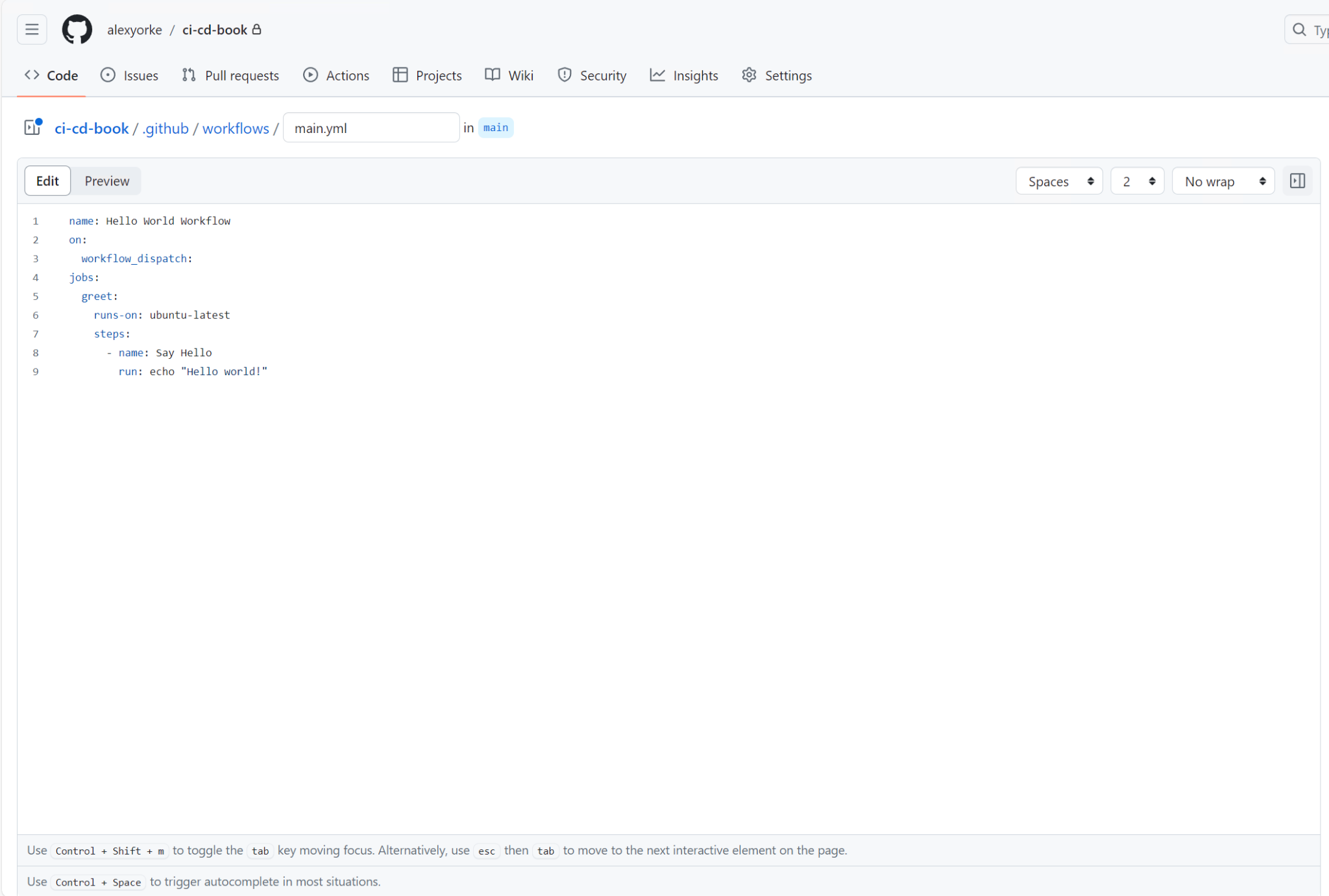

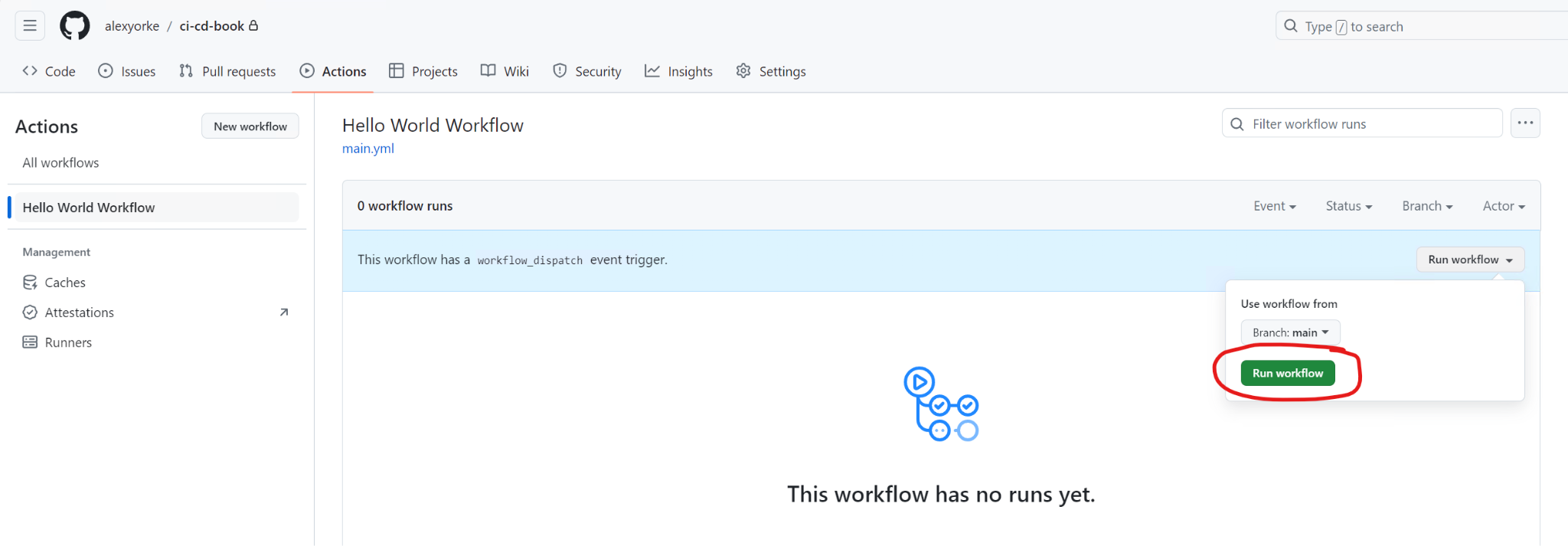

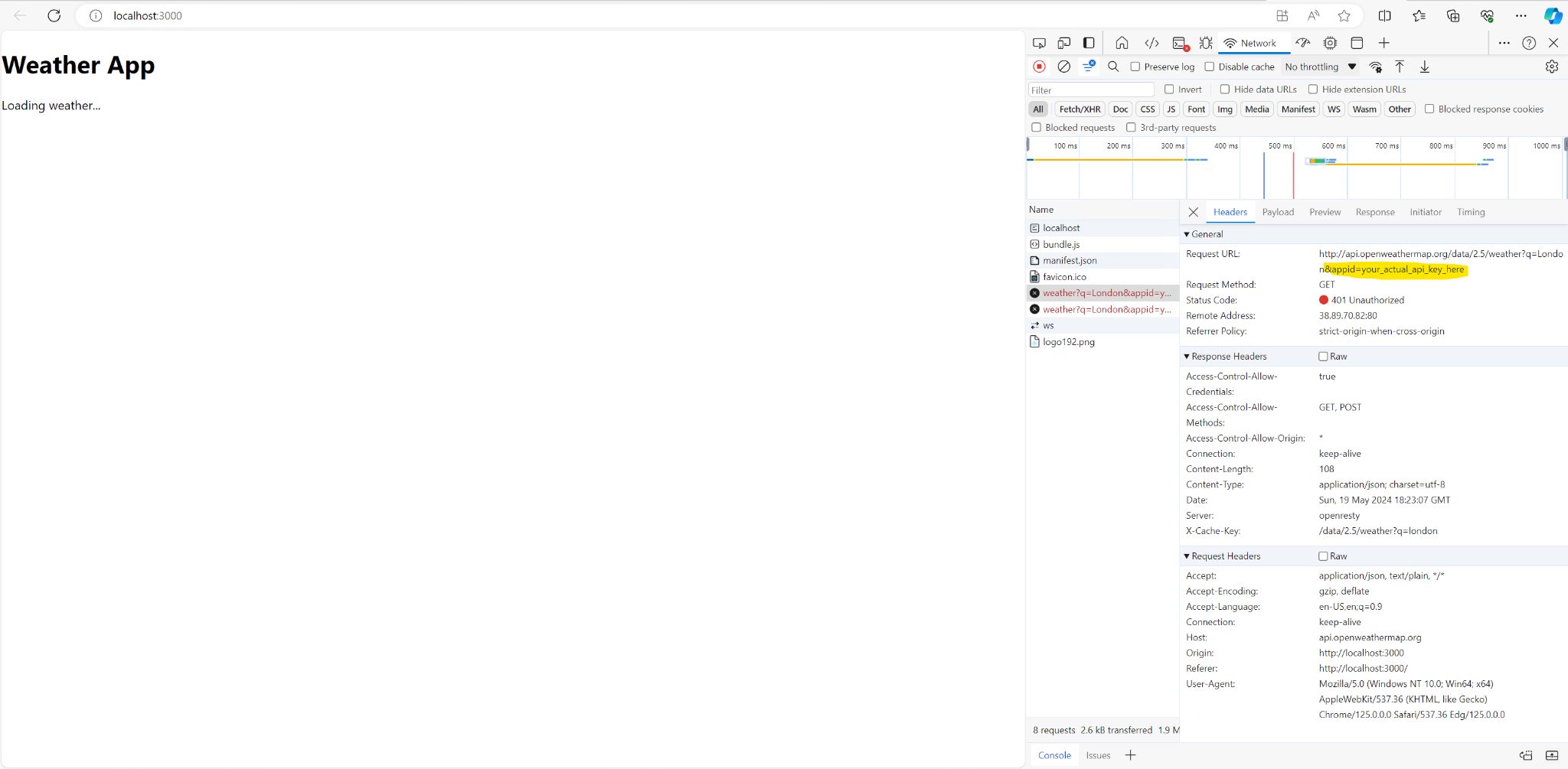

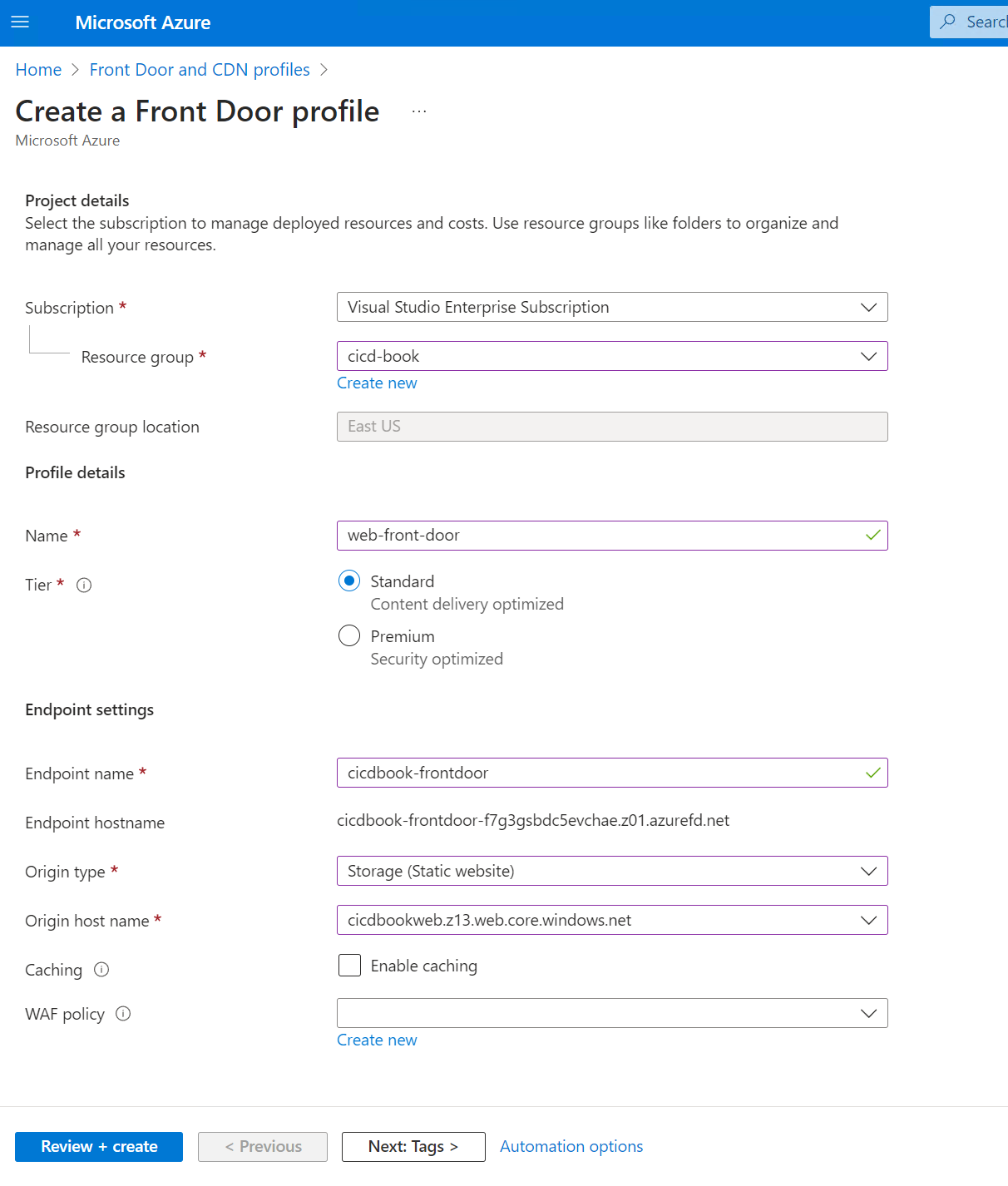

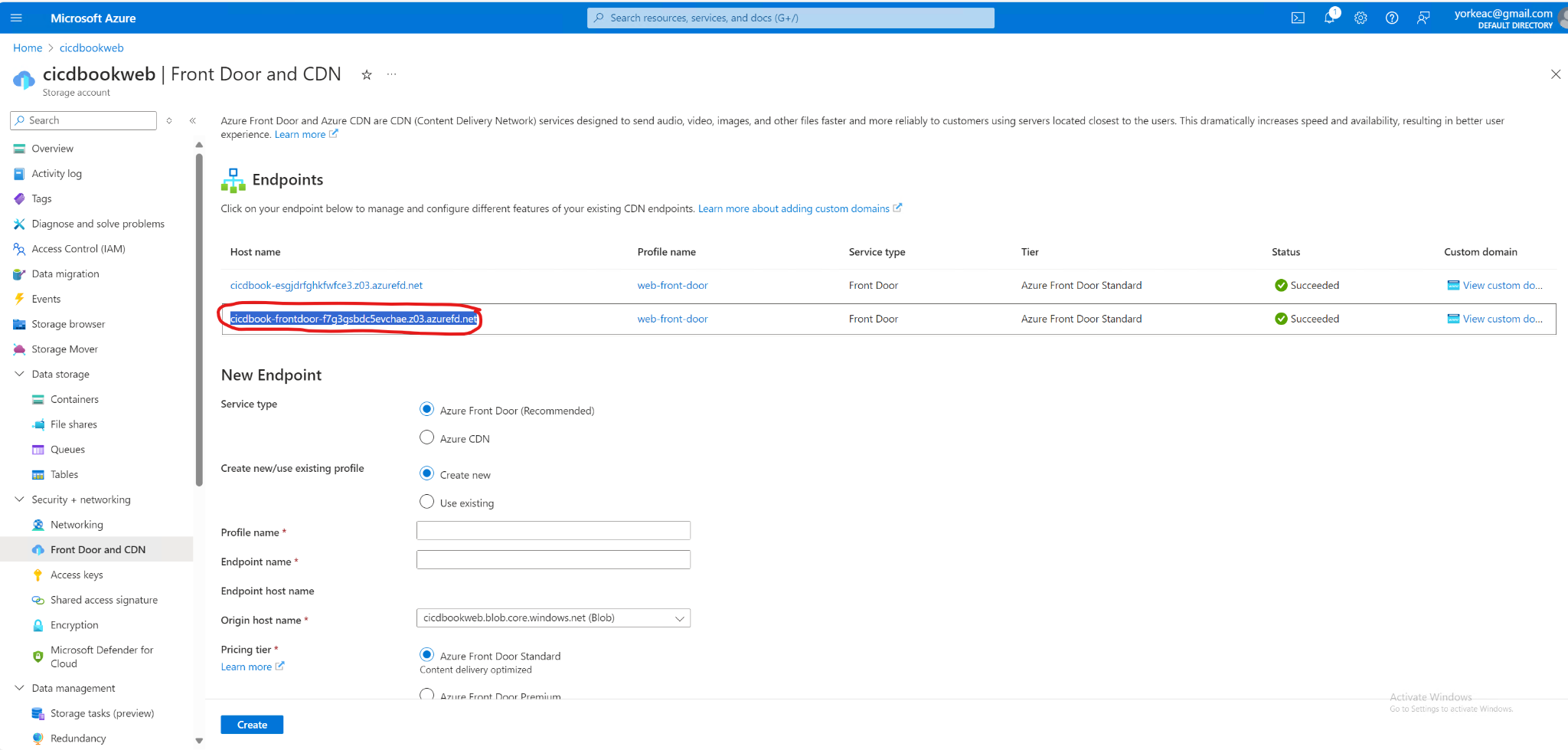

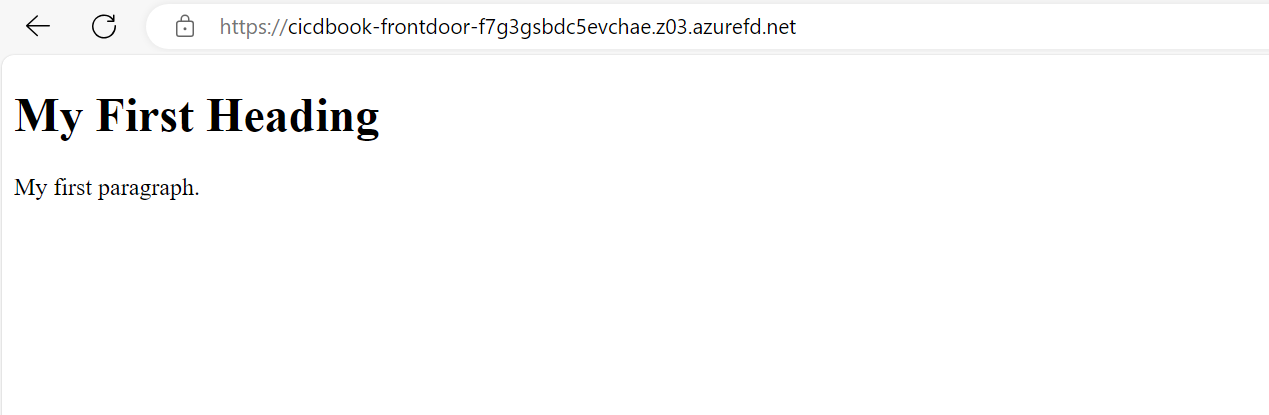

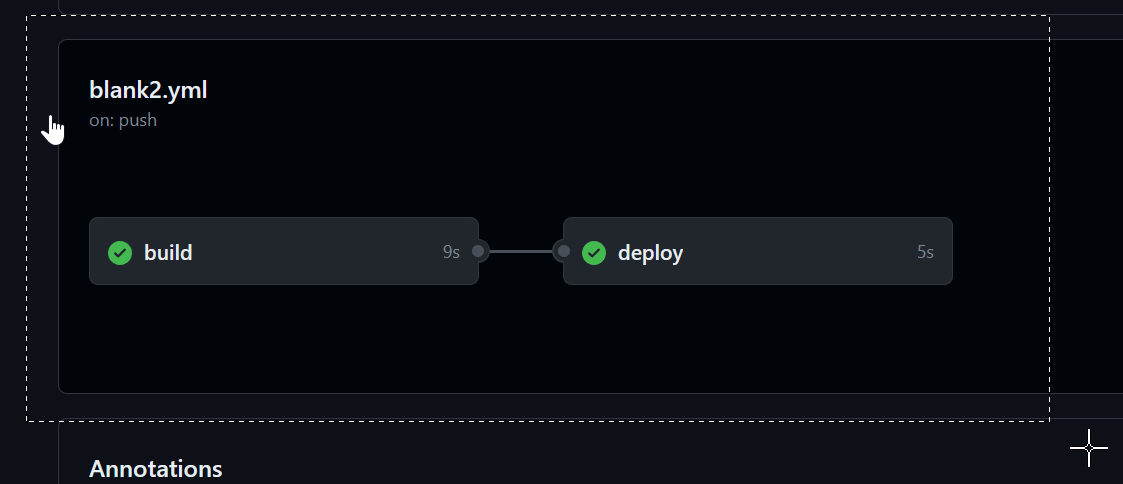

Throughout this guide, we will explore the key features of GitHub Actions and how to effectively structure workflow files in YAML to maximize the benefits of CI/CD. We'll start by creating a somewhat simple weather application, but make it more complex over time. This is designed to simulate a real world application.

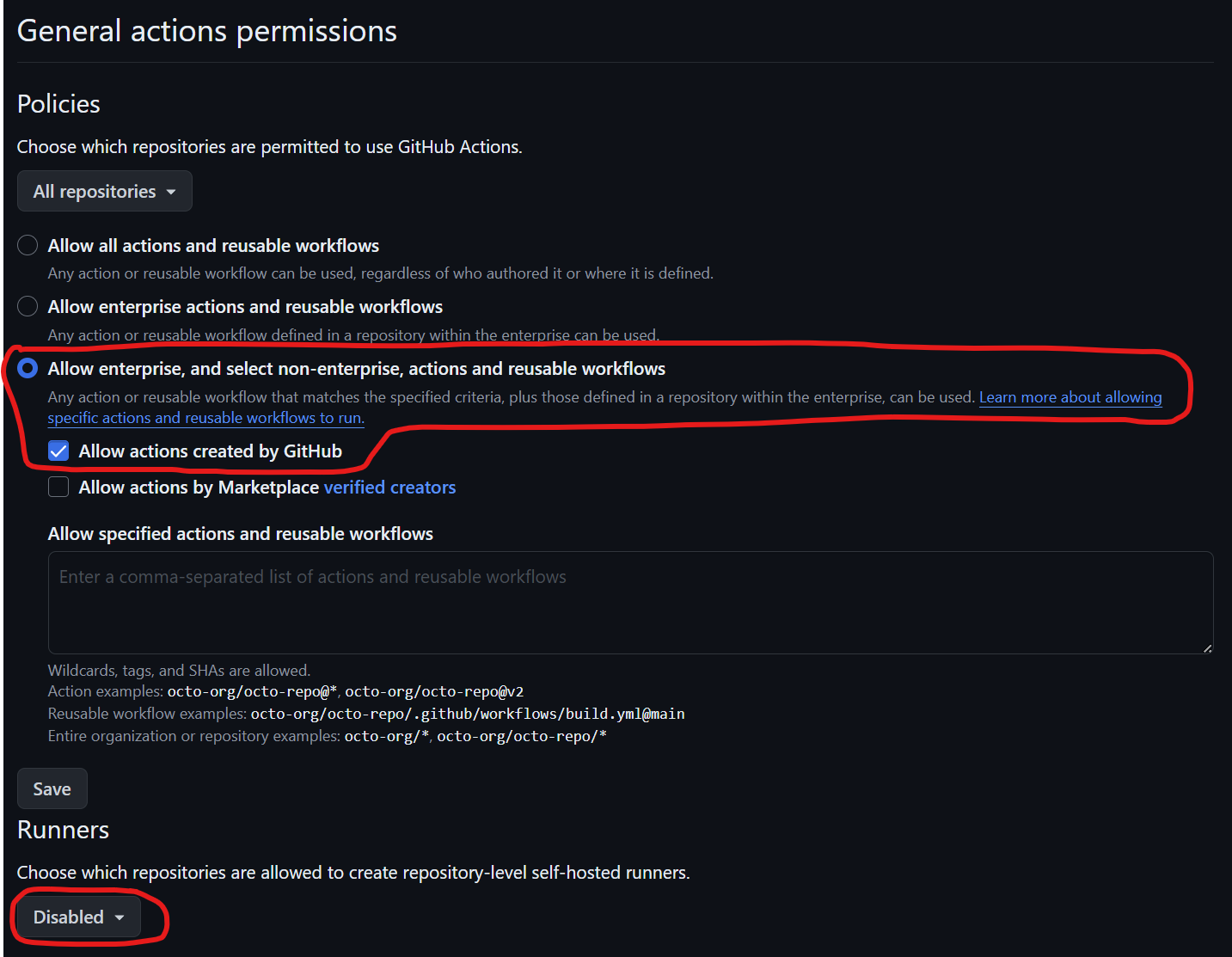

GitHub is a company that has a product called "Actions" (sometimes referred to as "GitHub Actions") that is a set of build servers and software that runs GitHub Actions workflows. These YAML workflows are created by the developer and normally build, test, and lint the code using the GitHub Actions YAML syntax and run on the GitHub Actions build servers.

GitHub is a company, thus, it is not possible to install GitHub--it is not an application. Git is the version control system that can be installed.

If you need more information on the specific intricacies of GitHub Actions, please see the GitHub Actions documentation. GitHub Actions documentation - GitHub Docs

Let's build a pipeline that can do the following:

-

Checkout the code (i.e., clone it onto the runner.)

-

Build the code.

-

Run automated tests, and linting.

-

Publish artifacts to an artifact server, in this case, to GitHub, along with a versioning strategy that will help identify which artifacts you are publishing.

-

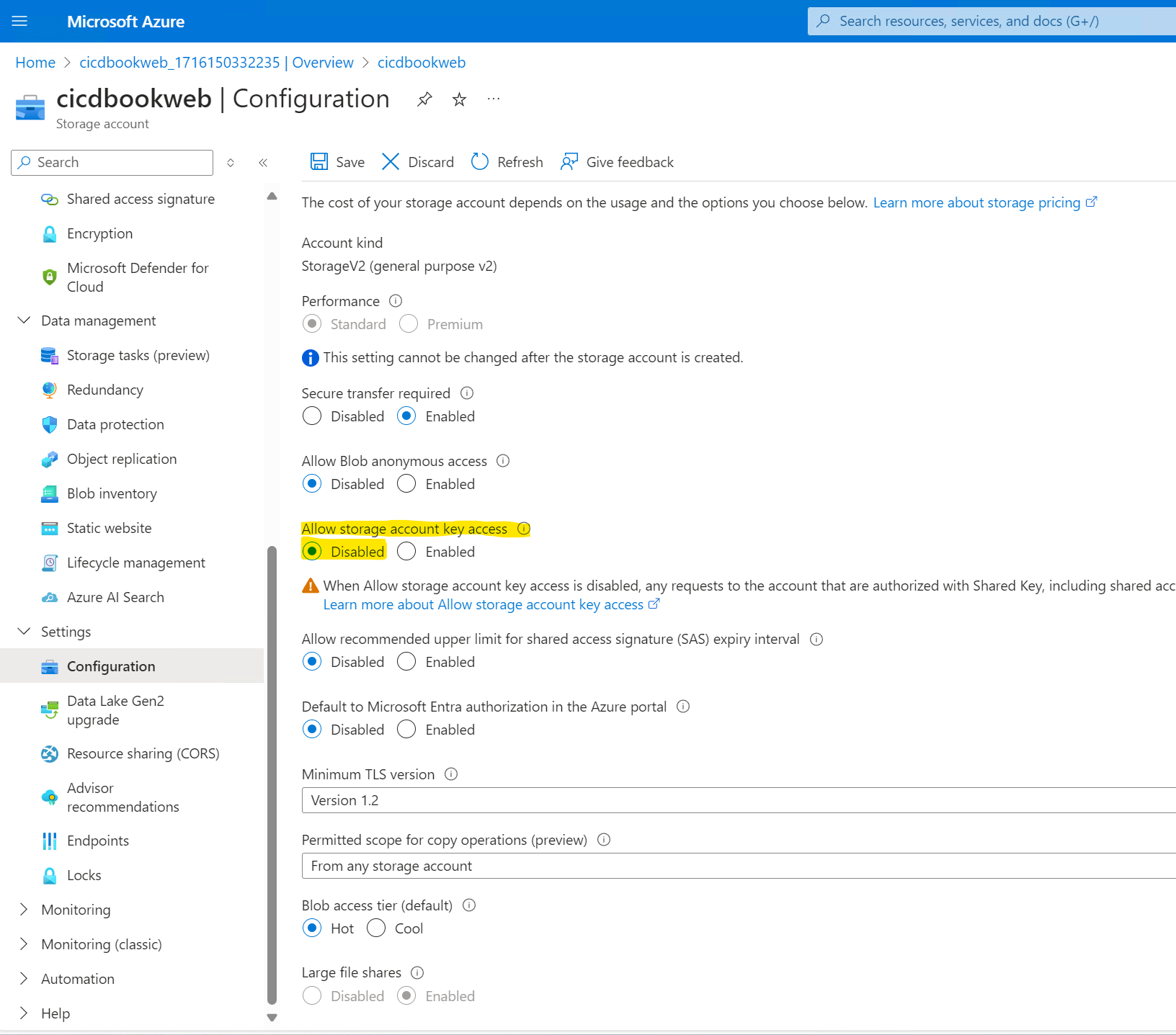

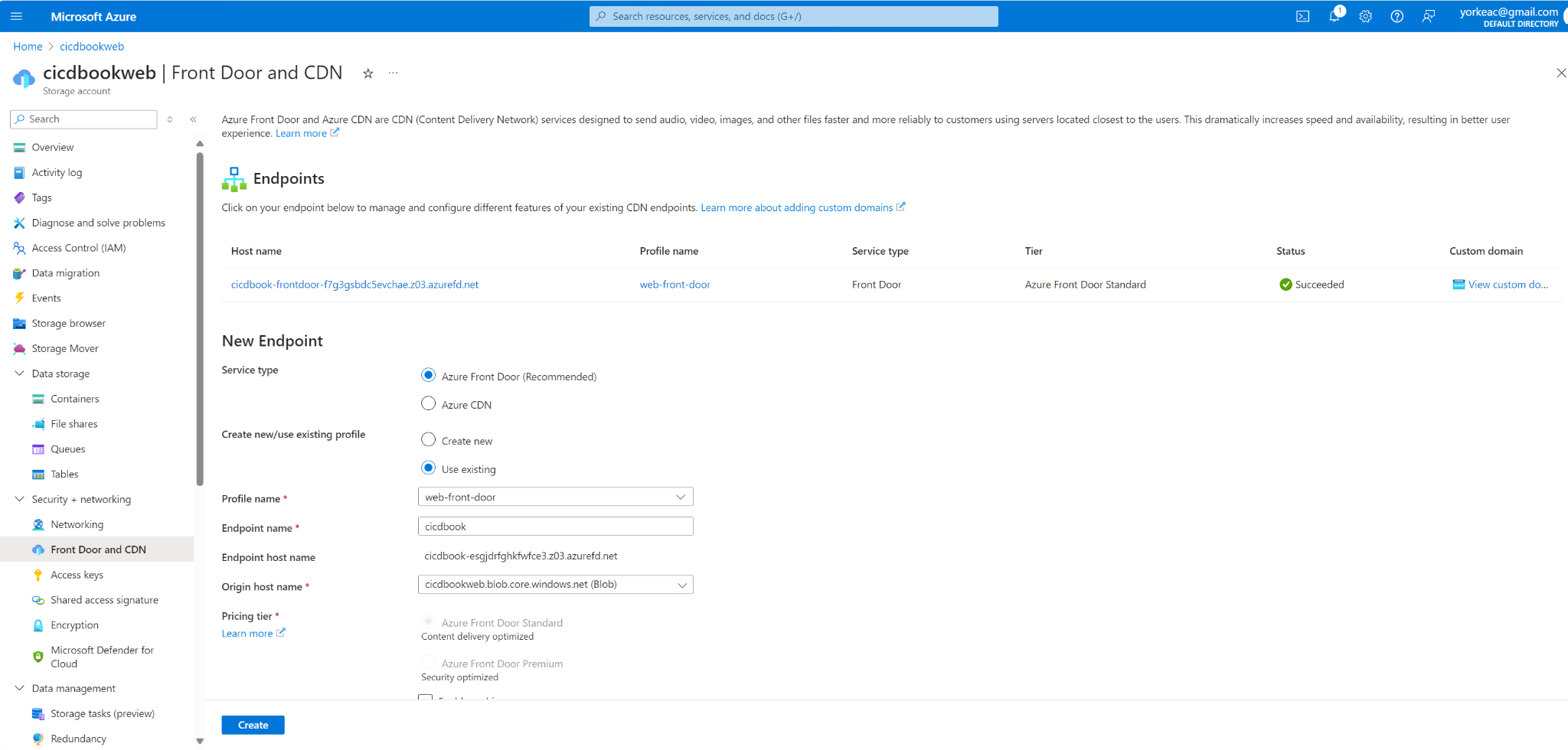

Deployed the website to Azure.

Workflow Structure

Here's an overview of how GitHub workflows are structured:

-

1. Events: Workflows begin with events, such as pushes or pull requests, which trigger the workflow.

-

2. Jobs: Workflows may contain multiple jobs, but we will focus on a single job for simplicity. Each job specifies an environment to run in, indicated by a string that corresponds to an operating system and a pre-configured image. This image includes pre-installed software, allowing us to get started quickly and reduce setup times and costs.

-

3. Steps: Each job is composed of multiple steps. These steps can use either the

usesorruncommand: -

- Uses: This command utilizes actions provided by GitHub Actions, sourced from the GitHub Marketplace. These actions are pre-configured scripts that handle tasks like software installation, version management, or building.

-

- Run: This command executes shell commands specific to the operating system defined in the job's environment, using bash scripting for Linux, for example.

-

4. Artifacts: Typically, workflows end with steps for uploading artifacts, though the initial steps may also involve downloading or preparing artifacts.

Below is an overview of a typical workflow structure:

Workflow │ ├── Events (e.g., push, pull_request) │ ├── Jobs │ ├── Runs-on (Runner) │ ├── Needs (Dependencies on other jobs) │ ├── Steps │ │ ├── Uses (Actions) │ │ │ ├── Inputs │ │ │ ├── Outputs │ │ │ └── Environment (e.g., secrets, env variables) │ │ └── Run (Shell commands) │ ├── Environment Variables │ ├── Secrets │ ├── Services (Service Containers) │ └── Artifacts │ ├── Upload Artifact │ └── Download Artifact │ └── Workflow Commands (e.g., set-output, set-env)

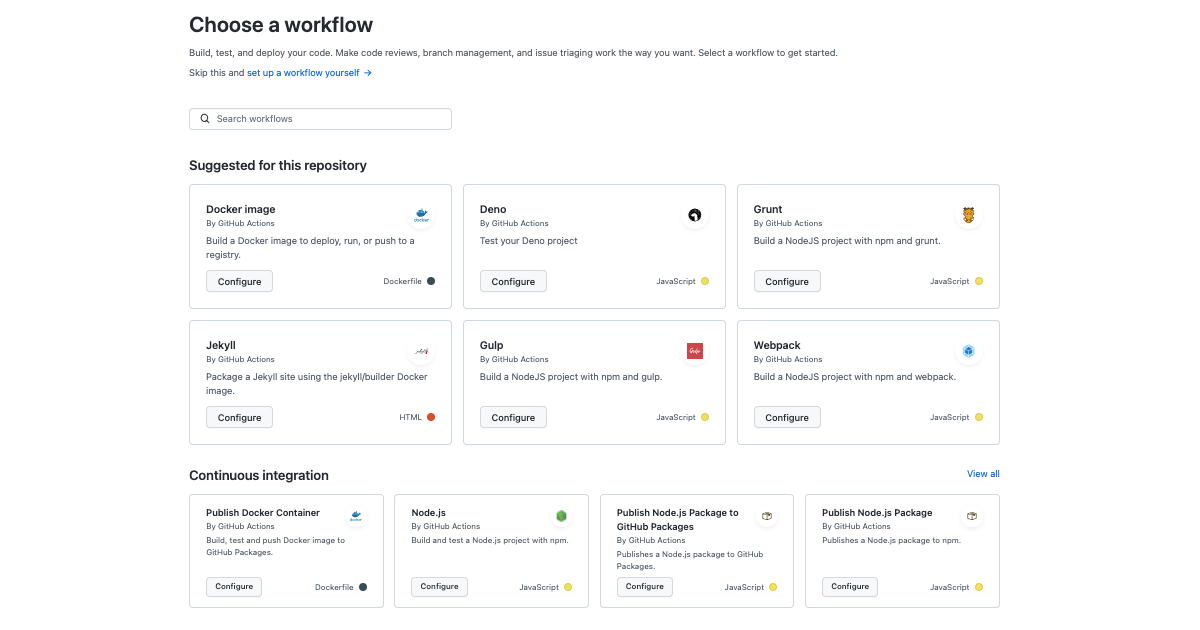

If you want to get started right away, GitHub Actions has several templates for many different project types. Use a template to get started quickly.

Setting up error notifications

When your workflow fails, it means that continuous integration is no longer possible. Implement error notifications to alert your team when the build pipeline fails—especially for production workflows. Consider these notification methods:

- Email Notifications: Configure GitHub Actions to send emails upon failure.

- Messaging Platform Integrations: Integrate with platforms like Microsoft Teams, Slack, or Discord to receive instant alerts (including texts or phone calls).

Ensure your GitHub email settings are correctly configured to receive these notifications. GitHub Actions is a CI/CD platform that automates software development tasks within GitHub repositories. It uses "workflow files," which are YAML-based instructions that define the steps of a CI/CD pipeline, similar to a project manager for your build scripts.

These workflows are triggered by specific events in your repository, like pushing code or creating a pull request. When triggered, they run on virtual build servers provided by GitHub, executing tasks such as building, testing, and deploying your application. These servers are ephemeral -- they're created for each workflow run and deleted afterward, ensuring a clean and consistent environment.

Workflows are organized into "jobs," each containing multiple "steps." Each step represents a discrete action, like running a script or using a pre-built action from the GitHub Marketplace.

Benefits of this structured approach:

-

Clarity and Organization: Named steps improve readability and make it easier to track progress, debug issues, and set up notifications.

-

Security and Isolation: Steps run in isolated environments, protecting sensitive information like secrets and environment variables.

-

Efficiency and Automation: GitHub Actions provides features for parallelization, triggering, resource management, and secret management, simplifying complex tasks.

-

Standardization and Collaboration: The workflow syntax promotes consistency across projects and teams, facilitating collaboration and knowledge sharing.

5 Things to Know About Pipe Scaffolding (supremepipe.com)

| CI server | macOS/Linux | Windows |

|---|---|---|

| name: CI | #!/bin/bash | @echo off |

| on: | echo "Starting CI process" | echo Starting CI process |

| push: | # Assuming Git and Node.js are already installed | REM Assuming Git and Node.js are already installed |

| jobs: | git clone <repository_url> | git clone <repository_url> |

| setup_and_test: | cd <repository_directory> | cd <repository_directory> |

| runs-on: ubuntu-latest | # Note: this depends on the NPM version installed on your computer | npm install |

| steps: | npm install | npm test |

- name: Checkout code | npm test | uses: actions/checkout@v2 | |

- name: Install dependencies | | run: npm install # we will get into later as to why we shouldn't be running npm install, instead, npm ci | |

- name: Run tests | | run: npm test | |

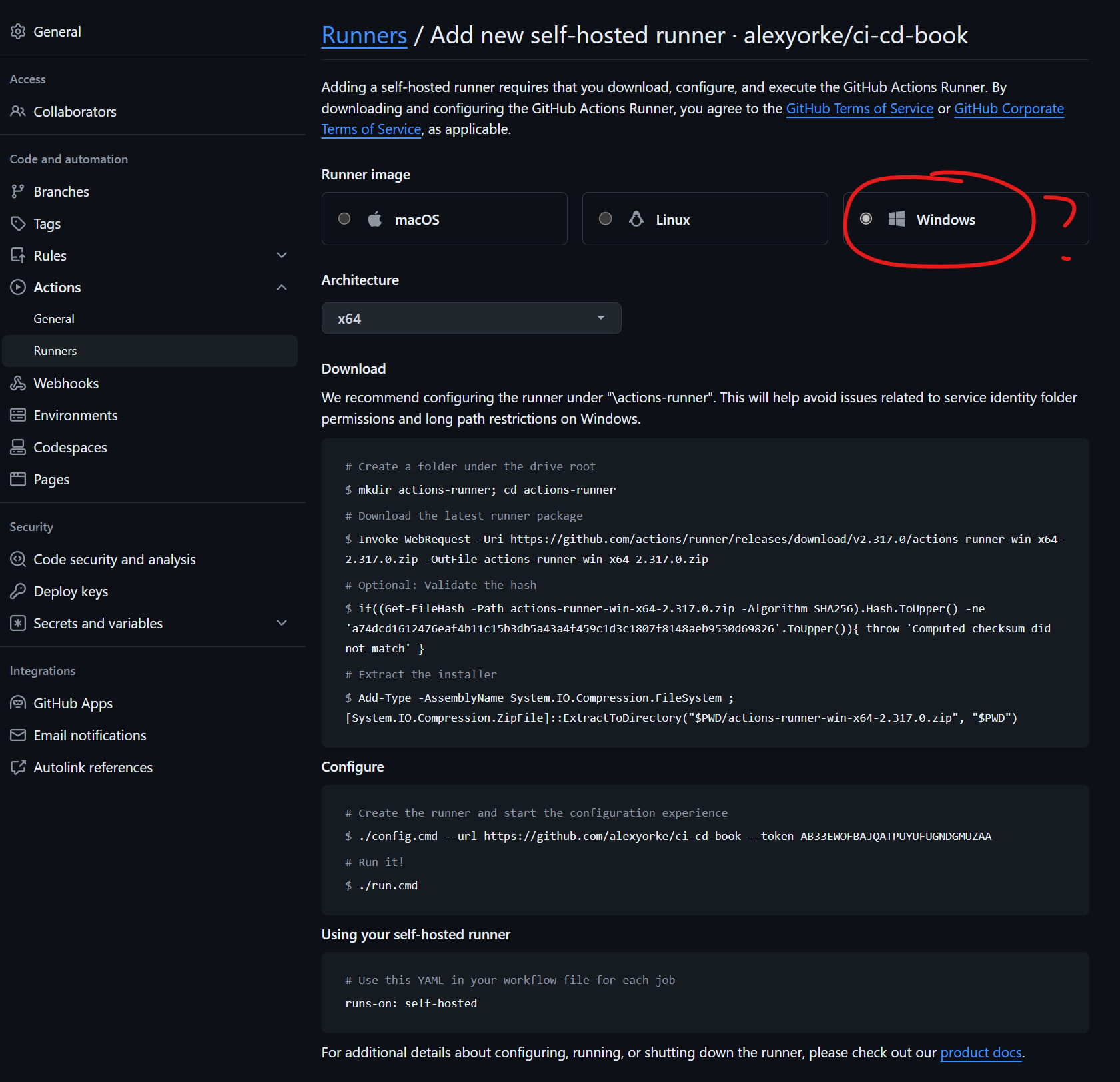

In this example, we demonstrate how you can execute commands on your local computer to simulate what a build server does. You can effectively use your own laptop as a server, albeit with caveats mentioned earlier. As an exercise, consider installing the GitHub Actions agent on your computer. Then, set up a self-hosted runner and execute the build script on it. This process will allow you to recreate or emulate the actions performed by a build server, right from your local environment. See the appendix for more info.

Aside

The script echo hello world is a Bash script. Note that while Bash is commonly used, some scripts might be written for sh, which has slight syntax differences. For Windows runners, remember that these execute PowerShell scripts—not Bash scripts. This guide does not cover PowerShell in detail, but if you are new to Bash, consider reading a beginner’s guide. Given Bash’s long-standing usage, it’s likely to remain relevant for some time.

The feedback loop for workflow changes can be slow—you typically need to edit, commit, and run the workflow on GitHub Actions to see the results. To streamline this process, consider these strategies:

-

Simplify Workflow Steps: Ensure that workflow steps are simple enough to run locally. This improves speed and manageability. Use provider-agnostic scripts (e.g., PowerShell or Bash).

-

Use Docker Containers: Create and use a Docker container that closely mirrors the GitHub Actions environment. This lets you test workflows locally in a similar setting.

-

Utilize the

actLibrary: Theactlibrary lets you run GitHub Actions locally. While it may not perfectly replicate the GitHub Actions environment, it works well for simpler scripts. See the appendix for more details.

Aside end

Workflow files must be stored in the .github/workflows directory of your repository. This YAML file dictates the sequence of operations executed by GitHub Actions during the CI/CD process.

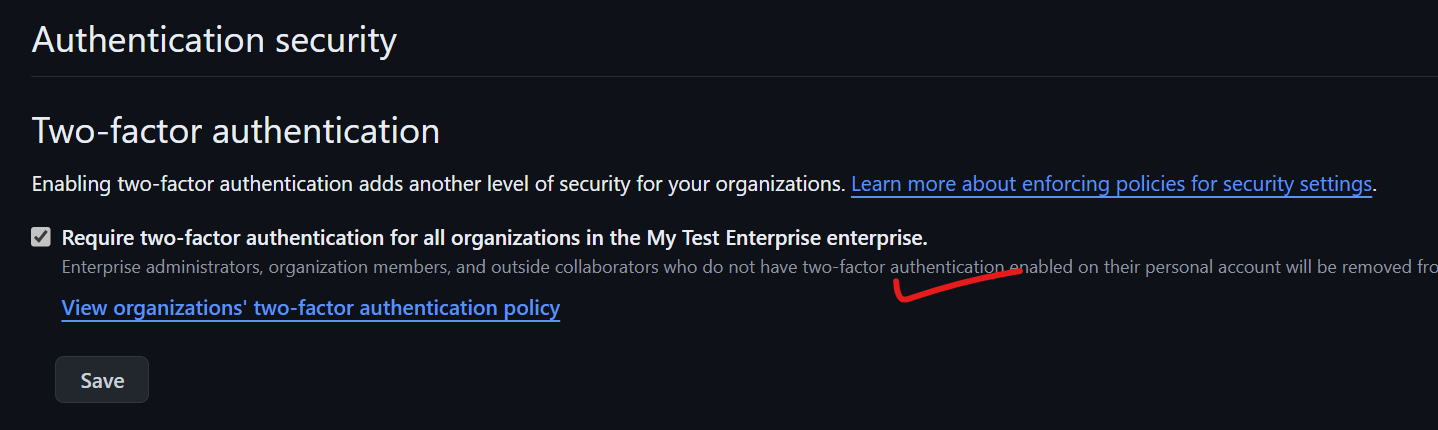

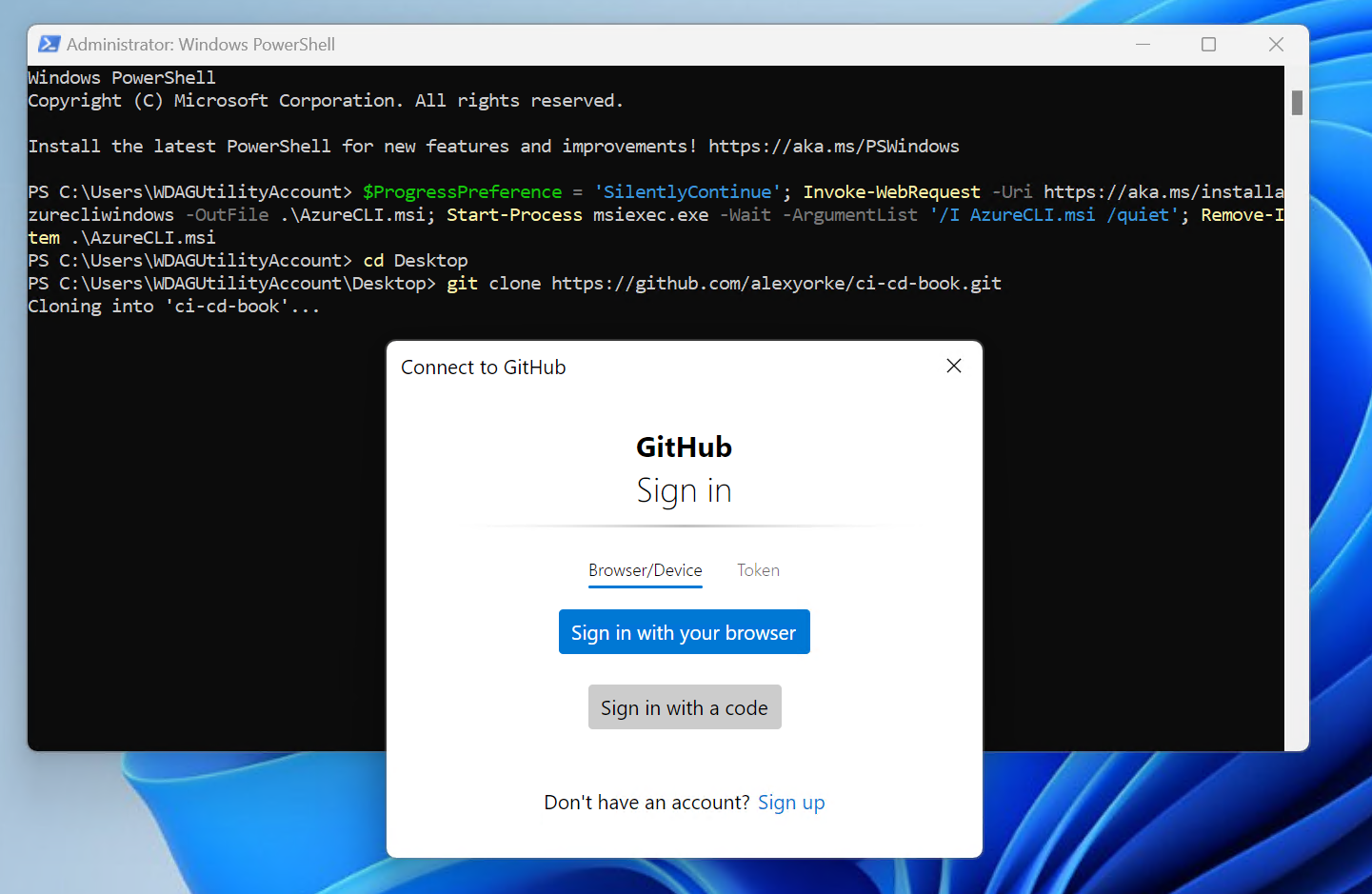

In order to run a workflow, you need a GitHub account and potentially a GitHub Enterprise organization. To create a new GitHub Enterprise repository, you first need to set up an account on GitHub and potentially get access to GitHub Enterprise, depending on your organization's setup. Here's how you can do it step-by-step:

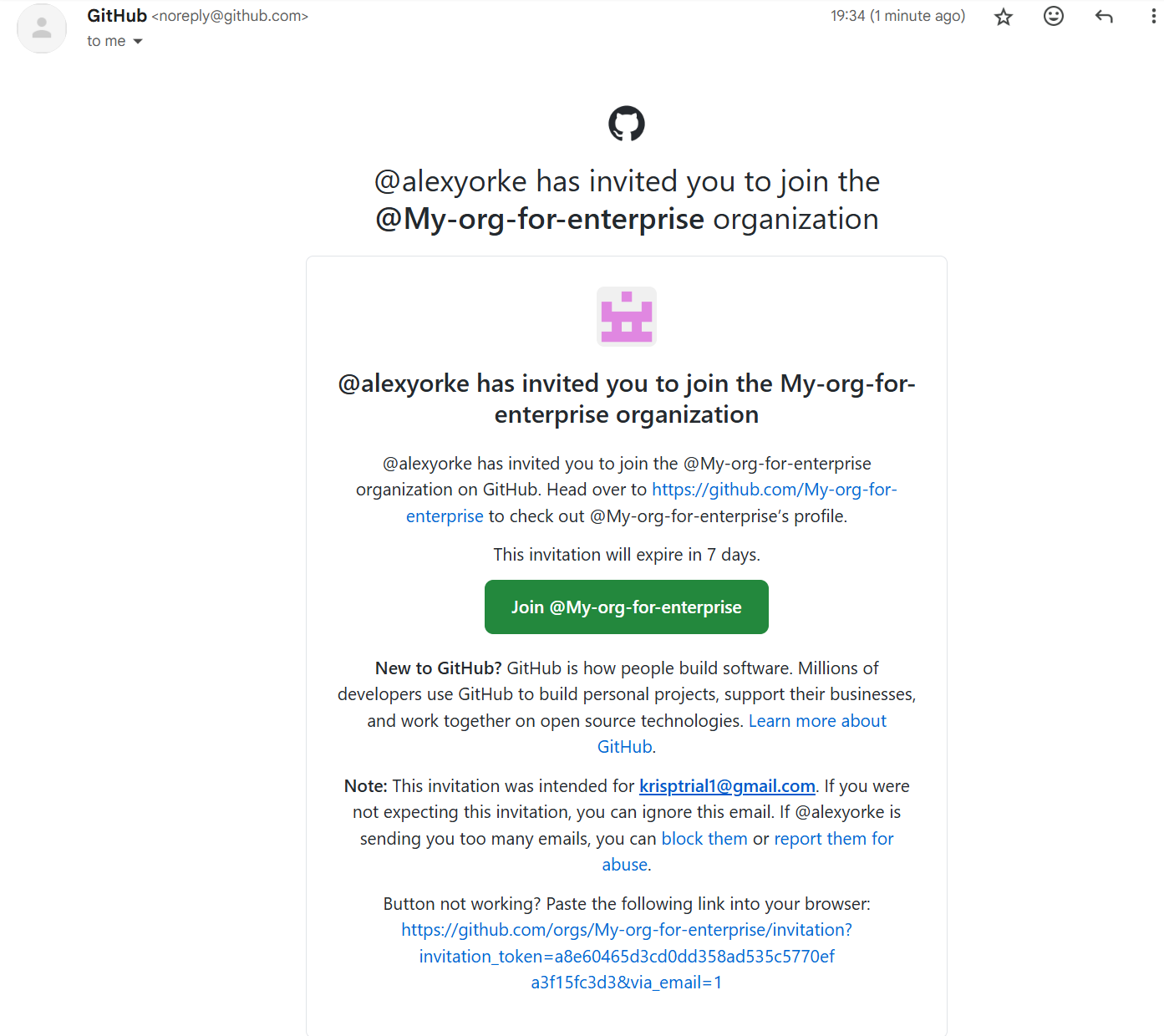

1. Sign Up for GitHub

-

Go to [GitHub](https://github.com/).

-

Click on the "Sign up" button at the top right corner of the page.

-

Fill in your details, including a username, email address, and password.

-

Verify your account by following the email verification link sent to your email.

2. Join or Set Up GitHub Enterprise

-

If your organization already has GitHub Enterprise: You will need an invitation to join from your organization's GitHub Enterprise admin. Once invited, you can log in using the credentials or SSO (Single Sign-On) method prescribed by your organization.

-

If you are setting up a new GitHub Enterprise: You can start a trial or purchase it by visiting the [GitHub Enterprise page](https://github.com/enterprise). Setting up GitHub Enterprise usually requires more extensive IT involvement to handle the installation on cloud or on-premises infrastructure.

3. Create a New Repository

Once you have access to GitHub (and GitHub Enterprise if using):

-

Click on your profile photo in the upper right corner, then click Your repositories.

-

Click the green New button, or if you are on your organization's GitHub Enterprise account, you may need to select the organization context first.

-

Enter a repository name, description (optional), and decide if the repository will be public or private.

-

Configure other settings like adding a README file, .gitignore, or a license according to your project needs.

-

Click Create repository.

4. Clone the Repository

- After creating your repository, clone it to your local machine to start working on the project. You can do this by opening your command line or terminal and running:

git clone https://github.com/username/repository-name.git

Replace username and repository-name with your GitHub username and the new repository's name.

5. Start Pushing Code

- After cloning the repository, you can start pushing your code to the GitHub repository by using:

git add .

git commit -m "Initial commit"

git push -u origin main

An exercise, try putting in the workflow described earlier in the doc GitHub workflow and see if you can figure out how to run it. It should just display Hello World and it doesn't require any code to build.

Workflow Triggers and Patterns

Workflow triggers are ways to automatically trigger your pipeline. When the workflow is triggered, it receives a set of inputs, for example the branch that it was triggered on as well as the date and time.