Extra Content (Draft)

This is a super super early version of some extra content that needs to be reorganized and put into other chapters. Most is likely duplicates from what is already included.

Frequent logging data types in Google GitHub Actions workflows

Okay, let's break down the specific kinds of information frequently logged in the run steps of these workflows, going beyond just the actions performed:

-

Versions:

- Tool Versions: Explicit checks like

cmake --version,bazel version,bazelisk --version,clang --version,go version,magika --version,clang-format --version. Implicitly logged when tools likesetup-java,setup-python,setup-go,rustup,actions/setup-noderun or during installation (apt install,pip install, etc.). - Dependency Versions: Logged during installation steps (

pip install,npm install,apt install,cargo build/update,mvn dependency:go-offline,conan install). Checks likecargo outdatedexplicitly log version differences. Specific versions are often pinned inuses:lines (e.g.,actions/checkout@v4,golangci/golangci-lint-action@v6.5.2). - OS/Platform Versions: Implicit in the

runs-on:directive (e.g.,ubuntu-22.04,macos-14). Android API levels (matrix.api-level) are logged. - Language Standard Versions: Explicitly set C++ standards (

-std=c++17,-std=c++20). - Build/Release Versions: Calculated from Git tags (

${GITHUB_REF#refs/tags/v},${REF:10}) or commit SHAs (${GITHUB_SHA}) and often logged viaechoor used in artifact names/paths. Tools likegoreleaserlog the version being released.

- Tool Versions: Explicit checks like

-

Hashes:

- Commit SHAs: Frequently logged for checkout actions, determining base refs (

${{ github.event.pull_request.base.sha }},git merge-base), identifying the commit being built/tested (${{ github.sha }},${{ github.event.pull_request.head.sha }}), generating build versions, or reporting status (statuses/${{ github.sha }}). Explicitly logged withgit rev-parse HEADorgit describe. - File Hashes: Used in cache keys (

hashFiles(...)). - Checksums: Logged by Gradle Wrapper validation (

wrapper-validation-action). GoReleaser generates checksum files, which are then often logged (e.g., base64 encoded). SLSA verification steps involve checksums. - Container Image Digests: Logged by GoReleaser and used in SLSA provenance generation/verification for images.

- Commit SHAs: Frequently logged for checkout actions, determining base refs (

-

Configuration & Flags:

- Build Types:

Release,Debug,RelWithDebInfo(often viamatrix.build_typeorCMAKE_BUILD_TYPE). - Compiler/Build Flags:

CMAKE_CXX_FLAGS,CXXFLAGS,-march=,-fsanitize=,-DBUILD_SHARED_LIBS=ON/OFF,-DDRACO_TRANSCODER_SUPPORTED=ON/OFF,-DSNAPPY_REQUIRE_AVX=..., CMake presets (--preset). - Tool Arguments: Arguments passed to scripts (

./script.sh arg), linters (golangci-lint-actionargs), tests (pytest -n auto), build tools (bazel build --config=...),osv-scannerargs (scan-args),cibuildwheelenv vars (CIBW_...). - Environment Variables: Explicitly set via

echo "VAR=value" >> $GITHUB_ENVor logged viaenv:blocks in steps. - Targets/Architectures:

TARGET: ${{ matrix.targets[0] }},matrix.arch,--config=android_arm64,--platform=....

- Build Types:

-

File Paths & Names:

- Paths added to

$GITHUB_PATH. - Paths specified in

actions/cacheoractions/upload-artifact. - Output directories (

out/dist,build,wheelhouse). - Specific config files being used (

.github/labeler.yml,debian/control). - Lists of changed files (

git diff --name-only). - Artifact names (often including versions/platforms).

- Source/test directories targeted by commands (

./src,./test,po/*.po).

- Paths added to

-

Test Results & Diagnostics:

- Pass/Fail status of individual tests and suites.

- Verbose test output (e.g.,

test_output=errors,CTEST_OUTPUT_ON_FAILURE=1). - Specific test names being run or filtered (

--gtest_filter=...,-E IntegrationTest). - Code coverage upload status (Codecov, Coveralls).

- JUnit XML report paths/generation (

make junit-regtest). - Flaky test run counts (

--runs_per_test 50). flutter doctor -voutput (detailed environment info).- Emulator configuration (API level, target, arch).

-

Linter/Formatter/Static Analysis Results:

- Specific findings (file:line:message) from tools like

clang-format,clang-tidy,golangci-lint,ruff,black,flake8,isort,mypy,pytype,pylint,gosec. - Diffs generated by formatters (

clang-format.diff,go mod tidy -diff). - SARIF file generation/upload status (CodeQL, OSV Scanner, Gosec).

- License header check results (list of files missing headers).

- API compatibility diffs (

japicmp:cmp). - Security scan results (OSV Scanner, CodeQL, Gosec, Coverity).

- Scorecard results.

- Specific findings (file:line:message) from tools like

-

Dependency Information:

- Packages being installed/updated (

apt install <pkg>,pip install <pkg>). - Cache hit/miss status and keys (

actions/cache). - Outdated dependency lists (

cargo outdated). go mod tidy -diffoutput.

- Packages being installed/updated (

-

Deployment & Release Information:

- Target tags/branches (

${{ github.ref_name }}). - Asset upload status and names (

actions/upload-release-asset, GoReleaser logs). - Publishing status to registries (PyPI, NPM, GHCR, Sonatype, CocoaPods).

- SLSA provenance generation/verification logs.

- Sigstore signing logs.

- Release note paths (

docs/release-notes/...).

- Target tags/branches (

-

System & Environment Information:

- Cache statistics (

ccache --show-stats). - Docker system info (

docker info,docker buildx ls). - Basic system info like processor count (

getconf _NPROCESSORS_CONF).

- Cache statistics (

-

Git Operations Details:

- Changed file lists (

git diff --name-only). - Merge base commit hashes.

- Commit counts (

git rev-list --count). - Cherry-pick status and target commits.

- Changed file lists (

In essence, while high-level actions are performed, the logs are rich with specific details about versions, hashes, configurations, file paths, test outcomes, static analysis findings, and deployment statuses.

Okay, let's start transforming these files into a structured document. I will begin by organizing the content into logical chapters and sections, ensuring the information is retained verbatim as requested.

Chapter 1: Introduction to Continuous Integration and Continuous Deployment (CI/CD)

1.1 What is CI/CD?

Continuous Integration/Continuous Deployment (CI/CD) software represents a suite of tools designed to enhance the software development lifecycle. These platforms provide extensive functionalities that optimize the CI/CD process. Key features include automated builds, continuous testing, artifact management, deployment automation, and environment management. Furthermore, they allow for intricate pipeline orchestration, support a myriad of integrations, offer monitoring metrics, and include security scanning tools.

CI/CD is a software development methodology optimizing for frequent and efficient deployment of small changes. It is a combination of Continuous Integration and either Continuous Deployment or Continuous Delivery, depending on where this term is used.

CI/CD aims to avoid “integration hell” by ensuring continuous integration and either continuous delivery or deployment. Work is constantly merged into the main/master branch after it has been verified via code review and the continuous integration pipeline. This involves practices like trunk-based development, where all developers work on a shared branch, promoting constant integration and minimizing merge conflicts. While CI/CD emphasizes readily deployable code, it allows flexibility in release schedules to align with business needs and customer expectations.

It requires both technical and cultural shifts, including:

- Smaller work units: Breaking down features into independently deployable and testable components.

- Modular codebase: Facilitating localized changes without impacting the entire application.

- Focus on rapid feedback: Prioritizing quick delivery of changes and gathering customer insights.

1.2 History of CI/CD

- Pre-1960's: Early computing was exclusive to entities like governments and large corporations due to high costs and complex maintenance. This led to a risk-averse, bureaucratic software development culture, using the Waterfall methodology. Dr. Winston W. Royce critiqued the Waterfall model in 1970, yet its basic form was widely adopted for its structured approach, fitting the slow, tedious programming challenges of the time.

- 1960-1970s: The era's bureaucratic environment influenced the development of critical practices like Source Code Management (SCMs), vital for managing and auditing code changes. Key developments included the introduction of the Source Code Control System (SCCS) in 1975, as discussed in Marc J. Rochkind's paper, and B. L. Ryle's work on software configuration management. This period also saw increased focus on testing and established repeatable build processes to mitigate risks.

- 1980s: The late 20th century saw advancements with SCM systems like SCCS, RCS, and CVS, and the rise of Integrated Development Environments (IDEs). Notable developments included the GANDALF IDE, which integrated development with RCS, and Watts S. Humphrey's "Managing the Software Process" (1989), focusing on iterative development approaches and process improvement. Challenges included real-time testing for embedded systems, highlighted in Richard N. Taylor's 1984 study.

- 1980s-1990s: Increased computer accessibility led to a boom in the software industry, with startups like Amazon emerging. The period was marked by "Integration Hell," a term possibly first formally introduced in Douglas Hackney's 1997 work. To combat this, the concept of nightly builds became popular. These are builds that are automatically triggered every night from the latest version of the codebase, allowing teams to detect and fix integration issues the next morning. Integration challenges were further analyzed by Nancy Staudenmayer and Michael A. Cusumano (MIT, 1998). Watts S. Humphrey emphasized the need for reproducible builds in "Managing the Software Process." The early 2000s saw the rise of Extreme Programming (XP), addressing integration risks, and the emergence of the Capability Maturity Model (1991). Microsoft's daily builds in 1996, detailed in Steve McConnell's work, marked a significant shift towards more efficient development practices.

- 2000s: Continuous Integration (CI) revolutionized software development, popularized by Martin Fowler in 2000. CI's emphasis on regular integrations, automated builds, and fast feedback loops significantly improved development efficiency. Tools like CruiseControl, Jenkins, TeamCity, Bamboo, and GitLab CI further established CI/CD practices.

- 2010's onwards: The rise of Distributed Version Control systems like Git signaled a shift in software development, emphasizing continuous feedback and iterative processes. Jez Humble and David Farley's "Continuous Delivery" (2010) advocated for automation and ensuring software readiness for release, paving the way for the evolution of DevOps, which emphasized collaboration, automation, measurement, and sharing.

1.3 Key Concepts and Terminology

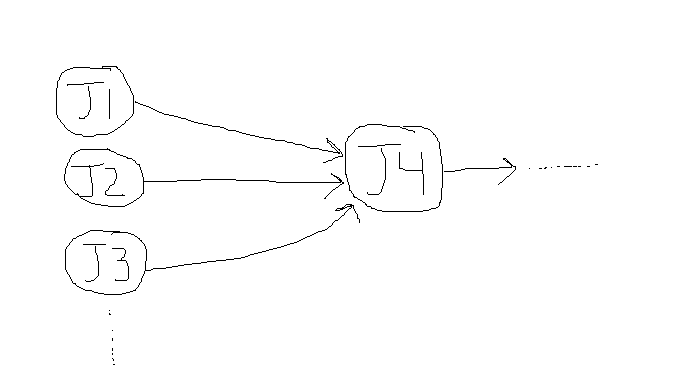

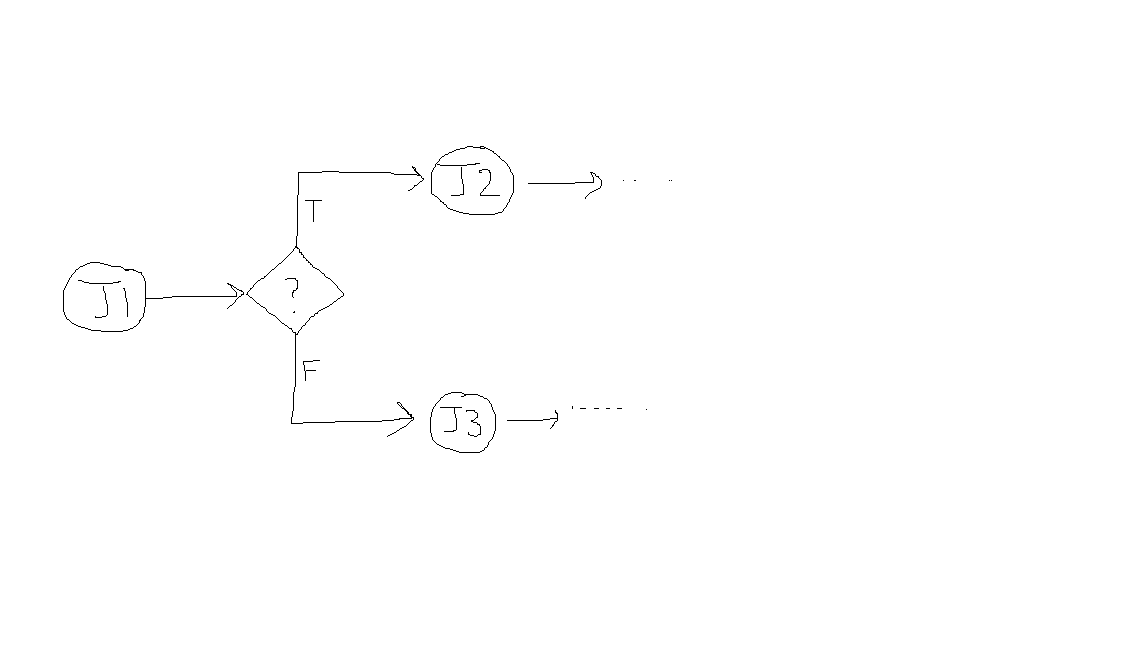

- Build pipelines (or just pipelines) are simply scripts that developers maintain to perform useful work, such as building your application, generating build artifacts, or deploying your application. It is called a pipeline because the way it is structured encourages a one-way dataflow, and a set of goals that are or are not achieved.

- They are central to CI/CD, and developers will be maintaining them constantly, and updating them. Therefore, it's very important that you know the right terminology for them, how they work, and how to create them. If, for example, the deployment pipeline fails, then it must be fixed quickly, because this is usually the only route to production. Therefore, it is important to know what they are, how to create them, and how to debug them quickly and effectively.

Disambiguation of terms between CI providers:

-

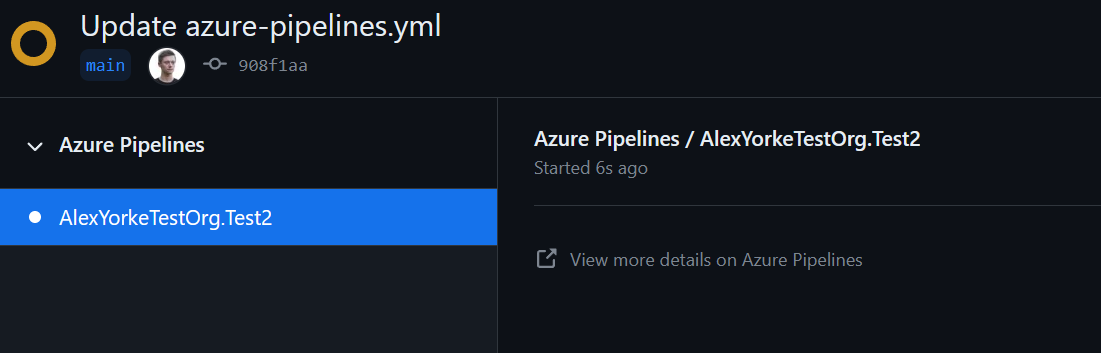

This book is somewhat focused on GitHub Actions, but tries to provide a provider-agnostic view. Some of the terms might be a bit different depending on your CI/CD provider. Here is a table that helps clarify.

Definition Generic Term Jenkins GitHub Actions GitLab CI/CD CircleCI A build step is a single task or command within a CI/CD pipeline. It's a specific action to be executed, such as compiling code, running tests, or deploying software. Build Step Build Step Job Job Job In software development, an environment refers to a setup where software runs. This can include factors like the operating system, available software and tools, system variables, and network access. Different environments (like development, testing, and production) mimic different stages of the software lifecycle. Environment Node Runner Runner Executor A workflow is a sequence of tasks that process a set of data. In CI/CD, a workflow is a set of rules for defining the build process, typically comprising multiple jobs or build steps. Workflow Pipeline Workflow Pipeline Workflow In CI/CD, a trigger is an event that initiates the execution of a workflow or pipeline. Common triggers include code commits, pull requests, scheduled times, or manual intervention. Trigger Build Trigger Event Trigger Trigger Secrets are sensitive data, such as passwords, tokens, or keys, essential for the operation of applications and the security of resources. In CI/CD pipelines, secrets are used to access resources without exposing them in the code or workflow definitions. Secrets Credentials Secrets Variables Environment Variables A container is a lightweight, executable package that includes everything needed to run a piece of software, including the code, runtime, system tools, libraries, and settings. Containers are isolated from each other and the host system, ensuring consistency across different environments. Container Agent/Docker Agent Container Docker Executor Docker Configuration in software development refers to the settings and parameters that define how software or hardware operates. In the context of CI/CD, configuration files (like YAML files in GitHub Actions) specify the parameters and settings of the build process. Configuration Jenkinsfile .github/workflows/* .gitlab-ci.yml .circleci/config.yml Artifacts are files or data that are produced as a result of a build step or job in a CI/CD pipeline. These can include compiled code, binaries, libraries, containers, and documentation. Artifacts Build Artifacts Artifacts Artifacts Artifacts In CI/CD, caching refers to the practice of storing a part of the build process, like dependencies or compiled code, so that it can be reused in subsequent runs, improving build speed and efficiency. Cache Workspace Cache Cache Cache Parallelism in CI/CD is the execution of multiple build steps or jobs simultaneously. It is used to speed up the build process by dividing the workload across multiple runners or agents. Parallelism Parallel Builds Matrix Builds Parallel Matrix Parallel Jobs Build status is an indicator of whether a build or integration process in a CI/CD pipeline succeeded or failed. It provides immediate feedback on the health and success of a change or a set of changes made in the repository. Build Status Build Status Check Pipeline Status Build Status -

Some testing environments might have different terms. These might be called "Development Environment", Dev, QA, Staging, UAT, PPE, Testing, Experimental, or Beta. These terms may have different connotations, depending on which environments you are using, and for which purpose.

1.4 Continuous Integration (CI)

The first step is continuous integration. Breaking this down, continuous means often or as frequently as possible in integration means the act of combining multiple disparate pieces into a whole. Integration is the act of constantly merging your changes with other developers', and vice-versa. It's the act of combining multiple changes, from multiple developers, into a single, cohesive whole, regularly. All developers work on a shared codebase. Integration (i.e., snapping the puzzle pieces together) occurs regularly.

Normally in CI/CD, long-lived branches are discouraged because it means that work is not integrated and testable with the rest of the application. Separate branches are normally considered developer workspaces. So, we need a way to make sure that integration work is done, well, continuously. Work is only integrated if it exists as part of the application.

Trunk-based development is a software development strategy that emphasizes frequent merges to a single branch known as the “trunk.” This approach can be likened to the structure of a tree. In this analogy, the trunk represents the main codebase, which is central and robust, continually growing as new changes are integrated. The branches, on the other hand, represent smaller, temporary code changes or features that developers work on. These branches are merged back into the trunk, ensuring that they become part of the main/master branch. This method promotes a continuous integration environment where updates are made regularly and incrementally to the central codebase, minimizing the complexity of merges and reducing the risk of significant conflicts.

To support integration the tasks themselves have to be small and integrable, and must be done in an intelligible order. Simply making the PRs small isn't sufficient. At every point after you finish your task, you should be able to validate whether your work is getting closer to being fully integrated. This is because if the PR's are too small, there might not be any functionality to test, so it might be unclear if the work is actually integrated. An anti pattern in this case would be to commit one line of code that creates A variable but the variable is not used. It's unclear if this code is actually integrated, because simply writing the code assigns it to the variable, but the variable is not used. This means that the code is likely removed at runtime by the compiler. And has no effect on the application.

This means that you should be able to integrate against others' work, that is, you should be able to know if you're making progress towards your goal, and have concrete evidence (i.e., tests pass and these tests are related to what the user can do.) You should be able to know, very early on, if part of your feature is working with the other parts of the application. It doesn't have to be finished super fast, rather, that you are aware that things are fitting together properly, and you're able to test it in production. Say for example, we're working on the weather application. We would like to create a new user interface for this weather application. If we start with removing the existing weather overlay, well, then the customers can't use it in the meantime, so we can't integrate these changes--this is a problem. Instead, we should develop the newer features alongside the current overlay and provide the capability to remove and replace the old weather overlay with the new one. This is normally made much easier through modular application approaches (and good application architecture.) This may require a bit of refactoring initially, as the application may not be built with every possible business requirement in mind.

1.5 Continuous Delivery vs. Continuous Deployment (CD)

Continuous Deployment (often confused with Continuous Delivery) is the practice where every change that passes the automated tests is automatically deployed into the production environment With little to no human intervention. This also encompasses CI and is a superset of CI. The work has to be integrated first with continuous integration before it is deployed to the production environment.

Continuous Delivery, on the other hand, ensures that the code is always in a deployable state, but it may not be deployed to production automatically. Instead, it might require manual approval. It provides the business with the opportunity to deploy at any point. Further tests, often user acceptance testing (UAT) or other types of end-to-end tests, are run in this environment. Therefore, in organizations with low risk tolerance, continuous delivery might be a safer approach. Continuous Deployment is a superset of continuous deployment. It just extends continuous delivery to make sure that the code is always in a deployable state and also deploys it.

This should be the only route to deploy to production because otherwise the point of having the continuous delivery pipeline is compromised because changes external to it can be non-idempotent, thus, making using the pipeline more brittle as manual configuration changes aren't reflected in the automation. The goal is to create artifact(s) that can be deployed as close as possible with minimal modifications. Do use the artifacts created by the continuous integration pipeline as inputs. Failure to do so means there is no trust in the continuous integration pipeline's ability to generate usable artifacts that can be used as-is for continuous deployment.

Note: how do I remember the difference between continuous delivery and continuous deployment? Continuous Deployment means to Deploy (i.e., a ship), and Deploy is getting it out to production.

- [Continuous Delivery: Reliable Software Releases through Build, Test, and Deployment Automation: Humble, Jez, Farley, David: 9780321601919: Books - Amazon.ca]

- [Continuous Integration: Improving Software Quality and Reducing Risk: Duvall, Paul, Matyas, Steve, Glover, Andrew: 9780321336385: Books - Amazon.ca]

1.6 The CI/CD Process Overview

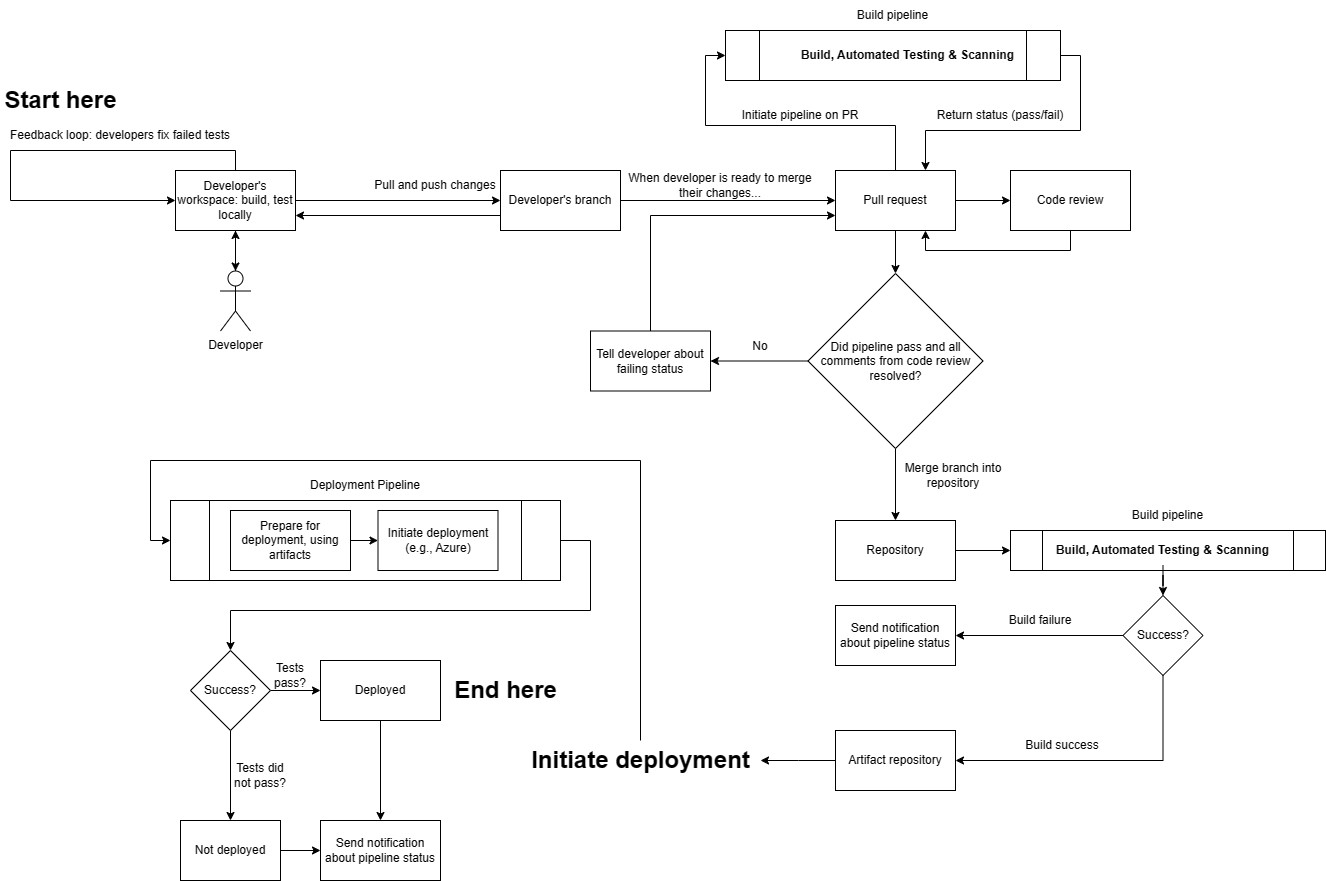

Here is what the software development process looks like when using CI/CD. Note that many of these processes are automated.

Here's a description of what's going on in the diagram.

- After tasks are broken down, then they can be worked on by the developers. Once completed, they create a Pull Request (PR). This automatically triggers a Continuous Integration (CI) pipeline that includes building, testing, and linting the PR. Your CI/CD provider should have detailed instructions on how to get the pipeline set up for the first time, and can automatically run it when developers create PRs. The pipeline, maintained by developers, must succeed before the PR is merged. This is a critical part of CI/CD, because the pipeline builds and runs the automated tests, which allow developers to gain confidence in their changes, and ensures that the work meets a quality threshold prior to merging (and thus becoming available for other developers to work on the changes, and for them to be available to the customers.)

- If it is not successful, then developers have to fix their changes before they are merged--this also includes code review. A successful pipeline run results in build artifacts being published to an artifact repository. However, at this stage, the artifacts remain unused in the repository and are not yet accessible to customers. Therefore, we need to have a way to release these to production.

- In the past, releasing software meant that changes were available to customers immediately, or via a death-march rollout that was difficult to undo. This mimicked the real world closely, which meant that it was easy to understand the process. For example, say I am writing a newspaper. If I hit print, and distribute all of the newspapers to everyone, and there is a typo, then it's going to be costly to fix. I can't easily pull the newspapers back into my office and change them.

- This is a very scary prospect, and so code was withheld until it was thoroughly tested. This makes sense: It was difficult to quickly deploy a new version of your application with the fix and tooling was not as mature as it was today.

- Deployments are managed via a “deployment pipeline” similar to the build pipeline but tailored for deployment. The specific deployment steps and commands vary based on the cloud provider, but all require the build artifacts and relevant credentials to access production environments. Deployments utilize infrastructure as code, using predefined templates to dictate resource provisioning and application setup, ensuring configurations are standardized and rebuilt from scratch to avoid nonstandard “snowflake” machines.

- When we do CD, we can deliver code that sometimes is still a work in progress. Normally, this would be of great concern: the feature isn't done yet, so customers would be negatively impacted because the feature would be buggy or wouldn't work. However, we can safely hide it behind a feature flag. This means that customers are not impacted by the work that is in progress. The application looks the same, and functions the same. Instead of it being integrated on a develop branch internally, it is in production, but just inactive.

- There are many strategies for deploying the new changes. One way is to put the new change behind a feature flag, which means that it is only enabled once the feature flag is turned on. This approach is useful if you're developing a small-to-large sized feature, and need multiple PRs to create it. If it's a very small change, then it's not as useful to put behind a feature flag, as the overhead is likely too high. Additionally, any changes, even if they are behind a feature flag, have a risk to production. For example, upgrading dependencies.

- Therefore, we need a way to make sure that we can gradually incorporate changes to production without interrupting existing users. There are two main strategies we can use to do this. One is called blue-green deployments: Maintain two production environments (blue for the current version and green for the new version) to achieve zero-downtime deployments. Another is called incremental deployments, or canary deployments: Release updates to a subset of users initially, gradually increasing the reach, allowing early feedback and risk mitigation.

- Blue-green deployments are useful when there are major changes, or if customer traffic cannot be served by two different nodes running different versions of the application. Think large infrastructure upgrades, database refactorings, etc. Most changes can be serviced via incremental deployments, where old copies of the application are destroyed, and newer copies are deployed in its place. This allows for a gradual introduction of new changes to production, and traffic is drained from the nodes before they go into service.

- Note that the act of moving changes to production does not necessarily mean that they are "released." Deploying, Delivering, and Releasing take on different definitions. This requires a very different way of thinking about how you approach releasing changes to customers, and is a very large paradigm shift.

- Over time, as we work on the feature, it might become ready for customers. In this case, we can gradually enable the feature flag to make it available to customers.

- To mitigate risk, we adopt a two-pronged approach. First, we slowly enable the feature flag. This means that the feature is not available to all customers yet. Second, we write monitors for our feature (much like the canary in the coal mine.)

- This stage ends once the application is available to the customer. This means that there are some post-deployment checks that occur, however, they are short in nature and only represent a snapshot of time.

- After (and during release), the application is closely monitored using continuous monitoring. This ensures that if the application goes down, that the deployment is quickly rolled back.

1.7 Benefits of CI/CD

(This section could incorporate benefits mentioned implicitly, like faster feedback loops, reduced risk, improved quality, and avoiding "integration hell". The source text doesn't explicitly list benefits in one place, but they are woven throughout the descriptions.)

1.8 Related Concepts

-

DevOps:

- [The Phoenix Project: A Novel about IT, DevOps, and Helping Your Business Win: Kim, Gene, Behr, Kevin, Spafford, George: 9781942788294: Books - Amazon.ca]

- [The DevOps Handbook: How to Create World-Class Agility, Reliability, and Security in Technology Organizations: Kim, Gene, Debois, Patrick, Willis, John, Humble, Jez, Allspaw, John: 9781942788003: Books - Amazon.ca]

-

Complexity in Software Development:

-

Key Points from the Continuous Delivery Podcast: Complexity This episode explores complexity in software development from various angles.

What is complexity?

- Difficulty in completion: Many dependencies, unreachable stakeholders, and external factors contribute to complexity.

- Unpredictability: Inability to foresee how changes will impact the system.

- Effort disproportionate to change: Simple changes requiring extensive coordination and effort.

- Codebase intricacy: Difficulty understanding code structure, duplication, and fear of unintended consequences.

Causes of complexity:

- Technical debt and legacy code: Messy, poorly architected codebases.

- Overly complex frameworks: Using "one size fits all" solutions that introduce unnecessary dependencies.

- Designing for unknown future: Building features for anticipated needs instead of focusing on current requirements.

- Organizational structure: Conway's Law - system complexity mirrors organizational complexity. Poorly architected systems reflecting organizational changes.

Combating complexity:

- Merciless refactoring: Continuously simplify code, keeping methods and classes small.

- True DevOps adoption: Empowering developers to build automation and simplify workflows and environments.

- Tight feedback loops: Short planning cycles with frequent feedback from product and end-users.

Identifying and tracking complexity:

- Cyclomatic complexity, maintainability index, and other static code analysis tools.

- Time to implement changes: Increasing time indicates growing complexity.

- Throughput measurement: Low throughput can be a symptom of a complex system.

- Number of code changes to fix a bug: Multiple changes for a single bug suggest a complex system.

Other important points:

- Cynefin framework: A model for understanding and addressing complexity based on the nature of the problem.

- Stacy complexity matrix: Applying the Cynefin framework to system design.

- Complexity impacts the entire organization: From development teams to organizational structure.

Call to action:

- Join the Continuous Delivery Podcast LinkedIn group.

- Follow the podcast on Twitter: @continuouspod.

- [1w7qpw29ni6lnbex9gksl5y6d961.mp3](#w7qpw29ni6lnbex9gksl5y6d961.mp3)

-

This concludes the first chapter. Please let me know when you're ready for Chapter 2, which will cover preparing the development environment.

Okay, here is the next chapter, focusing on preparing the development environment, version control, and build tools.

Chapter 2: Preparing the Development Environment

2.1 Setting Up Version Control

Effective software development, especially within a CI/CD framework, relies heavily on robust version control practices. Version Control Systems (VCS) like Git track changes to code over time, enable collaboration among developers, and provide a history that is crucial for debugging and understanding the evolution of the software.

2.1.1 Setting Up Developer Identity

- Each developer should have an identity, that is, some sort of way to identify them when contributing to the repository. This normally requires creating an account for each developer, or, depending on your CI software, might be able to be linked to another identity provider. Instructions vary. The developer's identity must be set up prior to cloning the repo, otherwise, developers would not be able to clone it. This normally requires setting up SSH Keys with your CI/CD provider. Your provider will have more instructions on how to set this up.

- It is important that each developer has their own identity, because one has to be able to track their changes. This is useful for example for security purposes, and having the ability to know who to ask if more information is required on a change. It ensures only those authorized and authenticated can contribute.

2.1.2 Get a Repository

- This will eventually contain everything that your application needs to run. All developers must be able to access this repository. This means that the server (in this case the Git server) has to be accessible to all of the developers. If you are already working from a repository, then you can skip this step. Usually, CI/CD software will host it for you automatically, and you don't have to manage or host it, all you have to do is go through a wizard on a webpage to set one up for your project.

- Second, you will need to store all of your application code in the repository. Usually, this includes application-specific dependency specifications but does not usually include the actual dependencies themselves. It also includes application source code, configuration files, database migrations, documentation, tests, resources, localizations, etc. (everything that your application needs to run.) If your code is hosted somewhere else, then it will have to be copied to this repository and then pushed to the main branch.

- If you already have a repository, then you'll have to import it into the provider.

2.1.3 Creating a .gitignore file

A .gitignore file is a special file used by Git, the version control system, to determine which files and directories should be excluded (or ignored) from version control. This means that the specified files and directories will not be tracked by Git, even if they exist in your working directory.

The .gitignore file goes in the root of your repository, and is shared by all developers.

Not all build artifacts are necessary to run the application, and some files are necessary but shouldn't be part of VCS because they should be injected through the environment at runtime (e.g., credentials and sensitive data.) For example, when building an application, it might produce build artifacts such as its executable or final build artifacts. These are necessary to run the application, but shouldn't be committed to version control because they are published as part of the build process. If they were part of version control, then it would be difficult to know how they were generated--were they generated on the build server, or someone's development machine? It would also make working with the repository very, very slow, as Git and other VCSes are not designed to work with large binary files. It would also take up a lot of disk space, too.

| Type of File/Folder | Commonly Stored in VCS | Not Commonly Stored in VCS |

|---|---|---|

| Source Code Files | ✓ | |

| Documentation | ✓ | |

| Configuration Files | ✓ | |

| Build Scripts | ✓ | |

| Unit Test Files | ✓ | |

| Images & Other Media (if essential to the project) | ✓ | |

| Dependency Files (e.g., package.json, requirements.txt) | ✓ | |

| Large Binary Files (e.g., videos, large images) | ✓ | |

| Generated Files (e.g., compiled binaries) | ✓ | |

| Temporary Files | ✓ | |

| User-specific Configuration | ✓ | |

| Credentials & Sensitive Data | ✓ | |

| Log Files | ✓ |

There are some general principles on whether to version control something or not.

- Is it reproducible and what is the effort for reproducing it? If I run the same command(s) on the same set of file(s) later on, will I get the same output/same changed state? How is it generated, is it generated via a script?

- How large are the files? Are they significantly larger than the files in the repo? Does it generate many thousands of files at once? Many tools are not designed to work with thousands or tens of thousands of changed files at once.

- Are these file(s) not meant to be shared among developers? For example, do developers gain a benefit by having these files version controlled? Should they be shared?

- Are these changes not feasible to be reviewed during code review? For example, thousands of changed files, or a binary blob could be anything. Does a criteria exist to review it or are the changes overwhelming where it is not possible to efficiently do so?

- Answering yes to all questions means that it is likely that the file(s) should not be version controlled and vice-versa.

Example: node_modules folder

- Is it reproducible? Yes, through package-lock.json.

- How large are the files? The node_module folder can be very large, up to 600MB. This is usually much larger than the source code in the repo.

- Are the files not meant to be shared among developers? The node_modules folder is specific to an OS and package-lock.json file. Each developer has their own node_modules folder.

- (Conclusion: Should not be version controlled)

Example: source-code

- Is it reproducible? In theory, yes but it is highly unlikely to be 100% reproducible and takes a lot of effort to reproduce. There are many ways to solve a problem, similar to writing a book. Even small variations can change program behavior.

- The files are likely to be of similar size.

- The files are meant to be shared among developers, as other developers will be working on the source code.

- The number of files is likely to be able to be modified by the programmer by working on a smaller feature. Thus, they should be able to be reviewed during code review.

- (Conclusion: Should be version controlled)

Link to gitignore templates: [GitHub - github/gitignore: A collection of useful .gitignore templates]

2.2 Establishing a Consistent Local Development Environment

When you're working on multiple projects (or a single project) it can be useful to containerize your development setup. What this allows you to do is to come back to a consistent development environment, and also make sure that other people on the team have a consistent environment. The advantages here is that it can help minimize debugging time (and toil) because everything is set up exactly the way that you want it to be. Since containers are ephemeral, then any changes that you might accidentally make to the environment are not saved. This allows for faster development time, because you are able to easily start up an environment with a couple, simple commands that might have complicated instructions to set up the environment.

The best part is that it is set up the same time every time, so it is always consistent. Since it is isolated, it doesn't matter what other software is on your computer, it is isolated. This means that if you have other versions of software installed, then they won't conflict. If you know that the software dependencies are the same between your environment and CI, then you have greater confidence that your changes are correct, and match what the customers will see. This is because it is technically possible to run different versions of the software on CI and your local testing environment--it is possible that the application will still build and run fine, however, there might be strange issues that weren't covered by tests, for example.

Automate the developer environment setup with scripts or containers to save time and avoid inconsistencies. Use dev containers as much as possible.

How do you make other people use those settings/containers/dev containers to build your software? Is there a way to enforce that particular IDE extensions are installed on each developer's computer? There doesn't appear to be a way to have the extensions force-installed, but you can add them to devcontainer json and it'll prompt as recommendations.

2.2.1 Tools for Managing Development Environments

Setting up a local development environment can be time-consuming and challenging, especially when working on complex projects or collaborating with multiple teams. Today, there are several tools and platforms designed to streamline this process, ensuring that developers can start coding with minimal setup. Let's delve into some of these solutions.

-

GitHub Codespaces:

- Overview: Codespaces provides a complete, configurable dev environment on top of GitHub. It enables developers to work from anywhere, on any device, without lengthy setup.

- Features:

- Browser-Based IDE: Develop directly within your browser without any setup.

- Visual Studio Code Integration: Offers the same features and extensions as VS Code.

- Customizable: Use a

devcontainer.jsonfile to specify the tools, extensions, and configurations needed.

- Use Cases: Ideal for open-source contributors, remote teams, or any situation where setting up a local environment might be cumbersome.

- Getting Started with GitHub Codespaces:

- Prerequisites:

- Ensure you have a GitHub account. If not, sign up at GitHub.com.

- Currently, GitHub Codespaces is a paid service, so you'll need to have billing set up or be part of a team that has access to Codespaces.

- Access Codespaces:

- Navigate to the GitHub website and sign in.

- Once logged in, click on your profile picture in the top right corner and select "Codespaces" from the dropdown.

- Create a New Codespace:

- Click the "New codespace" button.

- Choose a repository from your existing ones, or use a public repository's URL. This repository will be the base for your Codespace.

- GitHub will prepare the Codespace and start up a virtual machine. This may take a few minutes the first time.

- Setting Up The Environment:

- GitHub Codespaces will attempt to automatically configure the environment based on the repository. If the repository contains a

.devcontainer/devcontainer.jsonfile, it will use it to configure the Codespace environment. Otherwise, it will provide a standard environment. - You can customize the environment by modifying the

devcontainer.jsonfile, allowing you to specify software, extensions, and settings for the Codespace.

- GitHub Codespaces will attempt to automatically configure the environment based on the repository. If the repository contains a

- Using the Codespace:

- Once your Codespace is ready, it will open in the browser using the Visual Studio Code (VS Code) interface.

- Use it just like you would use VS Code locally. You can write code, run commands in the integrated terminal, debug, use Git, and install extensions.

- Committing Changes:

- Make changes to your code and files as you would in a local development environment.

- Commit your changes directly from Codespaces to the GitHub repository.

- Suspending or Deleting a Codespace:

- If you're done with your work session, you can close the Codespace tab. It will automatically be suspended after a period of inactivity, saving your work and state.

- To delete a Codespace, navigate to the Codespaces section on GitHub, hover over the Codespace you want to delete, click on the "..." (more options) button, and select "Delete".

- Accessing Codespace on Different Devices:

- You can access your Codespace from any device with a web browser. Just navigate to GitHub, go to the Codespaces section, and select the one you wish to work on.

- Local Development (Optional):

- If you prefer, you can also connect to your Codespace using the local VS Code application on your machine, ensuring a seamless transition between local and cloud development.

- Stay Updated: * As GitHub continues to refine and expand the Codespaces feature, it's a good idea to check the official documentation and GitHub blog for updates, new features, and best practices. Remember, while Codespaces provides a powerful cloud development environment, always be conscious of the associated costs, especially if you're working with a large team or on multiple projects.

- Prerequisites:

-

Dev Containers:

- Overview: Development containers, or "dev containers", provide a consistent environment for development, which can be shared across a team. They're powered by Docker and can be used with platforms like Visual Studio Code.

- Features:

- Isolated Environment: Ensure that all developers are working within the same setup, reducing the "it works on my machine" syndrome.

- Reproducibility: Easily recreate the environment, making onboarding new team members smoother.

- Integration with IDEs: Visual Studio Code, for example, has a Remote - Containers extension that integrates seamlessly with dev containers.

- Use Cases: Suitable for teams looking for consistency across development environments, or for projects with complex setup requirements.

- [Pros and cons of using devcontainers in pipelines - DEV Community] nuanced perspective, some github actions can't run inside of the container unfortunately. Useful if you don't want to use any actions and just want to do your own thing, completely separate from github.

- [devcontainers/ci: A GitHub Action and Azure DevOps Task designed to simplify using Dev Containers (https://containers.dev) in CI/CD systems.] using them locally (even if they're not on the CI) is still useful, however, as it ensures consistency when developing locally. Just remember to make sure to update the workflow file when the dev container changes, maybe in the pipeline make a script that puts a comment on the PR reminding people to verify if the dev container and workflow are using the same dependencies.

- Getting Started with Dev Containers in VS Code:

Development containers in Visual Studio Code, also known as "Dev Containers," are a part of the Remote Development extension pack. They allow developers to define consistent, reproducible, and isolated development environments encapsulated within Docker containers. This ensures that everyone on the team has the same setup and tools, regardless of their local machine setup.

- Prerequisites:

- Install Visual Studio Code.

- Install Docker Desktop and ensure it's running on your machine.

- Inside VS Code, go to the Extensions view by clicking on the square icon in the sidebar or pressing

Ctrl+Shift+X. - Search for and install the

Remote - Containersextension.

- Open or Create a Project:

- Open an existing project in VS Code or create a new one.

- Add Dev Container Configuration:

- Press

F1to open the command palette. - Type and select

Remote-Containers: Add Development Container Configuration Files.... - A list of predefined container configurations will appear, based on the detected type of your project. Choose a configuration that matches your project or select a base one (like

Node.jsorPython 3). - This action will add a

.devcontainerdirectory to your project with adevcontainer.jsonfile (and possibly aDockerfile).

- Press

- Customize the Dev Container (Optional):

- Edit the

Dockerfileif you want to customize the container's base image, install additional software, or change settings. - Modify the

devcontainer.jsonto adjust various settings like forwarded ports, mount points, extensions to be installed, etc.

- Edit the

- Open Project in Dev Container:

- Press

F1to open the command palette again. - Type and select

Remote-Containers: Reopen in Container. - VS Code will build the Docker image (this might take some time during the first run), start a container, and then reopen your project inside the container.

- Press

- Develop Inside the Container:

- Once inside, you can code, run, debug, and use the terminal just like you would locally. Any tools, SDKs, or configurations you defined for the container are immediately available.

- Extensions defined in

devcontainer.jsonare installed within the container, ensuring everyone on the team has the same development setup.

- Managing the Container:

- To stop or start the Dev Container, use the

Remote-Containers: Stop ContainerandRemote-Containers: Start Containercommands from the command palette. - If you make changes to the

Dockerfileordevcontainer.json, use theRemote-Containers: Rebuild Containercommand to apply them.

- To stop or start the Dev Container, use the

- Returning to Local Development:

- To go back to local development, click on the green remote indicator in the bottom left corner and select

Close Remote Connection.

- To go back to local development, click on the green remote indicator in the bottom left corner and select

- Sharing the Setup:

- Commit the

.devcontainerdirectory to your version control system (e.g., git). This allows other team members to check out the project and immediately get the same development environment by reopening the project in a container.

- Commit the

- Advanced Configurations: * As you become more familiar with Dev Containers, you can take advantage of advanced configurations like using Docker Compose to set up multi-container environments, setting post-create scripts, and more. By following these steps, you'll have a consistent and isolated development environment that can be shared and reproduced across your team, helping eliminate the "it works on my machine" problem.

- Prerequisites:

-

Docker Compose:

- Overview: Docker Compose is a tool for defining and running multi-container Docker applications. Developers can use a

docker-compose.ymlfile to configure application services. - Features:

- Multiple Services: Easily define and run applications comprised of multiple containers.

- Networks and Volumes: Create shared networks, storage volumes, and more.

- Easy Scaling: Scale specific services with a single command.

- Use Cases: Great for local development and testing of microservices architectures or any multi-container app.

- Getting Started with Docker Compose:

Docker Compose is a powerful tool for defining and running multi-container Docker applications. In this guide, we'll use Docker Compose to set up a simple web server as an example.

1. Prerequisites:

- Install Docker Desktop for Windows or Mac. For Linux, install Docker and Docker Compose separately.

- Ensure Docker is running on your machine. 2. Create a new directory:

3. Create a Dockerfile: Inside themkdir my-webserver && cd my-webservermy-webserverdirectory, create a file namedDockerfilewith the following content to set up a basic Nginx web server:

4. Create a directory for your HTML files:FROM nginx:alpine COPY ./html /usr/share/nginx/html

5. Create a sample HTML page: Inside themkdir htmlhtmldirectory, create a file namedindex.htmlwith the following content:

6. Create a docker-compose.yml file: Inside the<!DOCTYPE html> <html> <head> <title>My Test Server</title> </head> <body> <h1>Welcome to the Test Server powered by Docker Compose!</h1> </body> </html>my-webserverdirectory, create a file nameddocker-compose.ymlwith the following content:

This file tells Docker Compose to build the Dockerfile in the current directory and map port 8080 on your host machine to port 80 on the container. 7. Build and start the services using Docker Compose: In the terminal or command prompt, navigate to theversion: "3" services: webserver: build: . ports: - "8080:80"my-webserverdirectory and run:

This command will build the Docker image and start a container with the Nginx web server. 8. Access the test server: Open your web browser and navigate todocker-compose uphttp://localhost:8080. You should see the "Welcome to the Test Server powered by Docker Compose!" message. 9. Stopping the test server: PressCtrl+Cin your terminal where Docker Compose is running to stop the containers. Alternatively, you can rundocker-compose downin another terminal window to stop and remove the containers. 10. Cleanup (Optional): If you want to remove the built Docker images, you can do so using:

That's it! Using Docker Compose, you've set up a local test server with a basic web page. This example can be extended by adding database services, backend APIs, and other components as needed by defining them in thedocker-compose down --rmi alldocker-compose.ymlfile.

- Overview: Docker Compose is a tool for defining and running multi-container Docker applications. Developers can use a

-

Virtual Machines (VMs):

- Overview: VMs allow developers to run another operating system within their primary OS, creating isolated environments for testing or development.

- Features:

- Full OS Isolation: Run multiple OS instances on a single physical machine.

- Snapshotting: Save the current state and roll back to it as needed, which is useful for testing.

- Network Configurations: Create complex network topologies for testing distributed systems.

- Use Cases: Useful for OS-specific development, testing applications on different OS versions, or simulating production environments locally.

Conclusion: The landscape of tools and platforms for setting up local development environments is diverse, catering to various needs and complexities. By choosing the right tools, developers can ensure a smooth, consistent, and efficient workflow, regardless of where they are or what device they're using.

2.3 Choosing and Configuring Build Tools

A fundamental prerequisite for CI/CD is the ability to build your project reliably from the command line without manual IDE interaction. This forms the basis for automated builds.

2.3.1 Tips on Selecting Build Tools

- "Favor specific, portable tools over hacking"

- ChatGPT summary of paper explanation: "A CI bad smell arises from a sub-optimal selection of tools in the CI pipeline, which can lead to delays and force developers to adopt hacking solutions like custom shell scripts. These scripts may initially solve the problem but can later cause maintainability and portability issues. To avoid this, developers should use suitable plugins instead of shell scripts and be cautious of different versions of tools conflicting with each other on the same server."

- "Do not use out-of-the-box tools, nor listen customers only"

- ChatGPT summary of paper explanation: "Proper configuration of tools is essential in software development, and using external tools with default configurations is a bad practice. Involving developers in defining quality gates is crucial, as relying solely on customer requirements may lead to irrelevant warnings and slow down the CI process. Quality gates should be established with input from both developers and customers to ensure an efficient and effective CI process."

- Build scripts are highly dependent upon the IDE (BM2)

- "The two most positively assessed bad smells were related to the usage of absolute paths in the build (BM1), and the coupling between the build and the IDE (BM2). The high perceived relevance of such smells is justified considering that their presence 26 Fiorella Zampetti et al. will unavoidably limit the portability of the build resulting in statements such as "but it works on my machine"."

- Certain IDEs install their build and compilation software in hard-coded locations that may or may not be used by the configuration files in the program. This means that other people who use different IDEs may not have those exact same paths, which makes the application IDE dependent. This can cause issues with portability, as the CI server must also be set up exactly the same, which isn't guaranteed (as the workspace folder is usually dynamic.) This can cause configuration errors.

- Might be hard to collaborate and share configuration with others, as configuration is mixed in with personal preferences and build settings that are required for the application to run. This can also make it difficult for other people to use their IDEs.

- If the builds are too dependent on the IDE, then it might be difficult to run them or reproduce the environment on CI. This is because the IDE may have custom settings, or special versions of software, that are specific to a single developer. Or, they may be using environment variables injected at build time that the CI does not use. This can change application behavior and make it difficult to build.

2.3.2 Understanding IDE-Specific Build Processes

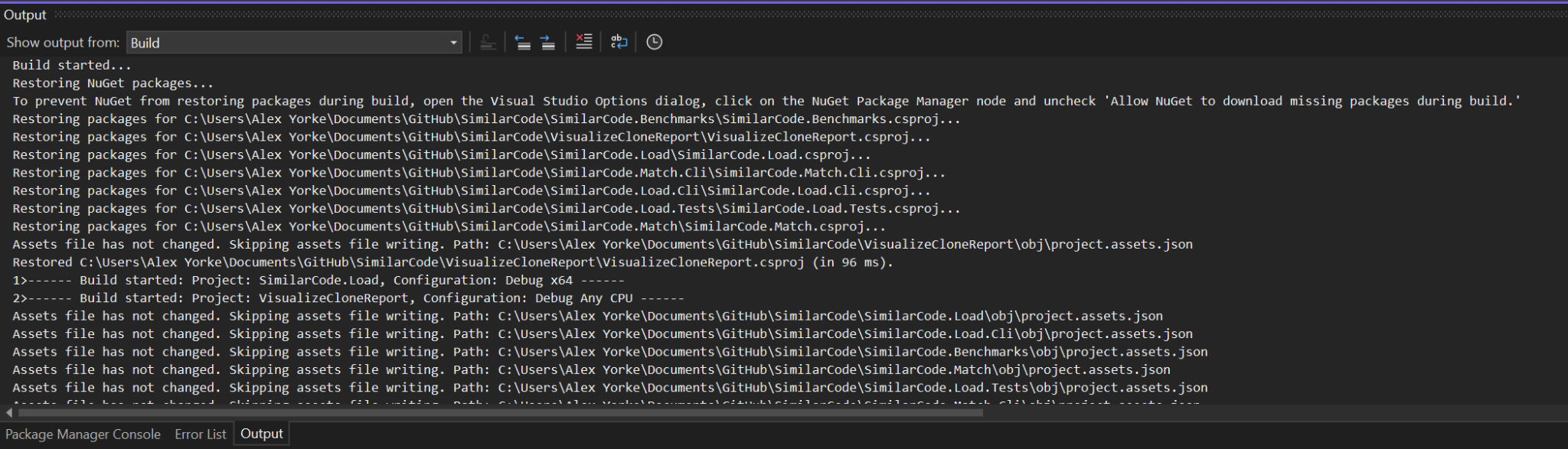

Different IDEs manage build processes uniquely, often abstracting complex commands into user-friendly interfaces with detailed logs available for troubleshooting. For instance, Visual Studio provides build command details through its verbosity settings, while IntelliJ IDEA and Eclipse offer insights via built-in terminals and verbose output settings. Xcode allows developers to track build commands and order through the "Report Navigator."

Different Integrated Development Environments (IDEs) have varied ways of presenting build commands and the order in which they're run. Here are instructions for a couple of popular IDEs:

[Debugging in Visual Studio Code] (i.e., launch.json file)

-

Visual Studio (for C++/C#):

- Build Commands: Visual Studio uses

msbuildfor building its projects. To see the exact build commands:- Go to the "Tools" menu.

- Select "Options."

- Navigate to "Projects and Solutions" -> "Build and Run".

- In the "MSBuild project build output verbosity" dropdown, select "Detailed" or "Diagnostic" to increase the verbosity of the build output.

- Build Order: The build order can also be observed in the output window when you build the solution, especially if you've set the verbosity to "Detailed" or "Normal."

The build log might have many things. This is normally useful for troubleshooting, it's less likely that you'll need to provide manual commands. If you have a legacy project, or it's complex, then you might need to provide custom commands.

The build log might have many things. This is normally useful for troubleshooting, it's less likely that you'll need to provide manual commands. If you have a legacy project, or it's complex, then you might need to provide custom commands.

- Build Commands: Visual Studio uses

-

IntelliJ IDEA (for Java):

- Build Commands: IntelliJ IDEA uses its own builder, but you can see the build commands if you're using Maven or Gradle by looking at the output when you run the respective build lifecycle or task.

- Open the "Terminal" tab (usually at the bottom).

- Run your build tool command, e.g.,

mvn compilefor Maven. - The executed commands will be printed in the terminal.

- Build Order: If you're using a build tool like Maven, the build lifecycle phases determine the order. For a default Java project in IntelliJ, the IDE handles this, and you can infer the order by observing the messages in the "Build" tool window.

- Build Commands: IntelliJ IDEA uses its own builder, but you can see the build commands if you're using Maven or Gradle by looking at the output when you run the respective build lifecycle or task.

-

Eclipse (for Java):

- Build Commands: Eclipse uses its own builder for Java. To see detailed build info:

- Go to "Window" -> "Preferences."

- Navigate to "General" -> "Workspace".

- Check "Enable verbose output for the build."

- Build Order: Eclipse handles the order internally for Java builds. For more detailed projects, you'd likely be using a tool like Maven, in which case the build lifecycle phases determine the order.

- Build Commands: Eclipse uses its own builder for Java. To see detailed build info:

-

Xcode (for C++/Swift/Objective-C):

- Build Commands:

- Go to "Xcode" in the top menu.

- Select "Preferences."

- Navigate to "Locations" tab.

- Set the "Derived Data" location to "Relative".

- After building, in the "Report Navigator" (rightmost tab in the left pane), you can see the build logs. Click on the latest build under the "Build" section.

- Build Order: This is determined by the dependencies set up in your project. You can observe this order in the build logs in the "Report Navigator" after a build.

- Build Commands:

For all these IDEs, reading the output or log pane during a build will give you a good sense of the commands executed and their sequence.

Makefile Example

Here's a simple makefile that demonstrates dependencies and compilation for a C program:

main.o: main.c mathFunctions.h utilFunctions.h

gcc -c main.c

utilFunctions.o: utilFunctions.c utilFunctions.h

gcc -c utilFunctions.c

mathFunctions.o: mathFunctions.c mathFunctions.h

gcc -c mathFunctions.c

This format allows developers to easily manage and scale complex projects with numerous dependencies. Use a makefile when building your software is complex, and you have many dependencies (e.g., C or C++) that can't be easily defined through steps or jobs.

2.3.3 Identifying Project Type and Common Tools

Normally, the type of project you are trying to build can be determined via a few heuristics. First, you can try using github-linguist to determine what programming languages are used in the project the most frequently. Programming languages that are commonly used help indicate which type of project it is.

- Java is code -> byte code -> run it on JVM

- Python is code -> interpreted by interpreter

- C# write code -> compile -> dll (or exe), if dll then it is included (optionally) as part of another program

Use github-linguist to determine what type of project you're running. A project might contain many different languages; this gives you a high level overview of where to start in terms of build script.

In this case, this is clearly a TypeScript project. It also has a packages.json file, indicating that it is an npm project.

alex@DESKTOP-7M8V9ET:/dev/shm$ github-linguist angular-cli/

94.69% 3661931 TypeScript

2.60% 100620 Starlark

1.36% 52459 JavaScript

0.77% 29939 HTML

0.33% 12624 EJS

0.21% 8143 Shell

0.03% 1281 jq

0.00% 160 CSS

0.00% 36 SCSS

There are many ways in which your IDE can be configured. These are the most common build tools for most projects. Consult the documentation for your IDE for more specific instructions, such as if you use special build steps or plugins.

- (Maven: [Maven -- Introduction to the Standard Directory Layout (apache.org)])

- (Gradle: [Organizing Gradle Projects])

- (.NET: [samples/framework/libraries/migrate-library at main · dotnet/samples (github.com)])

2.3.4 Local vs. CI Build Commands

Here is a table to show how you can adapt your commands that you might run in your local environment, to those on a CI.

| Language | Local Development Command | CI Environment Command | Explanation |

|---|---|---|---|

| JavaScript (Node.js) | yarn install or npm install | yarn install --frozen-lockfile or npm ci | In CI, yarn install --frozen-lockfile and npm ci ensure reproducible builds by respecting exact versions in yarn.lock or package-lock.json. |

| Python | pip install -r requirements.txt | pip install --no-deps -r requirements.txt | CI might use --no-deps to avoid installing unnecessary dependencies, relying on an accurately populated requirements.txt. |

| Ruby | bundle install | bundle install --deployment | The --deployment flag in Bundler ensures dependencies are locked to those in Gemfile.lock, similar to yarn install --frozen-lockfile for JavaScript. |

| Java (Maven) | mvn install | mvn -B package --file pom.xml | In CI, Maven might use batch mode (-B) for non-interactive builds and focus on packaging (package) rather than installing (install). |

| Java (Gradle) | gradle build | gradle build -x test | In CI, Gradle might skip certain tasks like testing (-x test) if the tests are run separately in the pipeline. |

| Go | go get ./... | go build or go test | Locally, developers might use go get to fetch dependencies, but in CI, explicit build or test commands are used to ensure compilation and test execution. |

| Rust | cargo build | cargo build --locked | The --locked flag ensures that CI uses the exact versions specified in Cargo.lock. |

| PHP (Composer) | composer install | composer install --no-interaction --prefer-dist | CI environments use flags like --no-interaction and --prefer-dist for non-interactive installs and to prefer distribution packages. |

2.4 Dependency Management

Proper dependency management is crucial for stable and reproducible builds.

-

Inventory Dependencies: Inventory all dependencies.

-

Explicit Versioning: Explicitly show versions in the code to ensure build consistency. Explicit versioning is crucial because relying on the latest version of a package can introduce unforeseen breakages, making debugging and historical comparisons difficult. Always specify the version of your libraries to ensure consistency across development environments. [Best practices for Azure RBAC | Microsoft Learn] (Note: This link seems misplaced, likely intended for the Principle of Least Privilege section elsewhere)

-

Dependency Manifests: Projects may have dependency manifests, which are specific to a certain programming language or project type. Check to see which file(s) exist in the root directory of your project.

-

Reproducible Builds: Automated dependency management is needed (manual dependencies are harder to scan). [GUAC Docs | GUAC] [GitHub - dotnet/reproducible-builds: Contains the DotNet.ReproducibleBuilds package] [bmwiedemann/theunreproduciblepackage: The Unreproducible Package (github.com)] [ftp2.osuosl.org/pub/fosdem/2024/k1105/fosdem-2024-3353-reproducible-builds-the-first-ten-years.mp4]

-

Phantom Dependencies: [ftp2.osuosl.org/pub/fosdem/2024/k4401/fosdem-2024-3146-phantom-dependencies-in-python-and-what-to-do-about-them-.mp4]

-

Reproducible Builds Security: [31c3-6240-en-Reproducible_Builds_mp3.mp3](#c3-6240-en-reproducible_builds_mp3.mp3)

- Reproducible Builds: Closing the Trust Gap in Software Security

This talk, featuring Mike from the Tor Project and Seth from EFF, delves into the crucial security concept of reproducible builds and its increasing relevance in today's software landscape.

Key Points:

- The Trust Gap: Free software promises transparency, but verifying that a binary matches the source code relies on trust in developers and infrastructure. This trust gap exposes users to potential vulnerabilities and malicious code.

- Why Developers Are Targets: Developers' computers and build servers, while often assumed secure, are attractive targets for attackers seeking to compromise widely used software and gain access to millions of machines.

- Reproducible Builds as a Solution: Reproducible builds ensure that anyone can generate an identical binary from the source code, eliminating the single point of failure of the developer's machine and making it significantly harder to inject malicious code undetected.

- Examples & Implementations: The talk highlights successful implementations of reproducible builds, including:

- Tor Browser: Leveraging the Gideon system for reproducible builds across different platforms.

- Debian: Achieving reproducible builds for a significant portion of its package repository.

- F-Droid: Developing a verification server to enhance trust in Android packages.

- Addressing the Trusting Trust Attack: Reproducible builds, combined with techniques like diverse double compilation, offer a way to mitigate the "trusting trust" attack where backdoors can be hidden in compilers and propagate through software generations.

- Challenges & Future Directions: _ Reproducibility efforts require addressing challenges like build environment variations, timestamps, and file system inconsistencies. _ Ensuring software update distribution integrity is crucial and can be enhanced using technologies like blockchain and certificate transparency. * Continuous improvement and adoption of reproducible builds across the software development community are vital for a more secure and trustworthy software ecosystem. This talk effectively emphasizes the importance of reproducible builds for enhancing software security and encourages developers and users to champion this practice for a more trustworthy digital future.

- Reproducible Builds: Closing the Trust Gap in Software Security

This talk, featuring Mike from the Tor Project and Seth from EFF, delves into the crucial security concept of reproducible builds and its increasing relevance in today's software landscape.

Key Points:

-

Private Package Repositories: Some commands may require access to private package repositories, such as a company-specific NPM repository. Setting this up on CI systems typically involves obtaining a Personal Access Token (PAT) specific to the CI. Once obtained, it's crucial to treat the PAT as a secret. Later in this chapter, we'll discuss the preference for using managed identities over PATs wherever possible.

-

Migrating Resources to GitHub: When managing resources on GitHub, you have several strategies depending on your needs. For handling artifacts like packages or executables, using GitHub Packages is advisable for easier access and streamlined authentication, beneficial for both GitHub Actions and remote developers. For resources such as special servers or shared file drives typically hosted on-premises, consider using a self-hosted GitHub runner or deploying a GitHub agent on your cloud. For example, if migrating Docker images or local server packages to GitHub, the process typically involves re-tagging and pushing them to GitHub. Post-migration, setting up access for your team involves configuring authentication methods, possibly using OAuth, and managing permissions for public and private packages, which may require GitHub Enterprise for enhanced control.

-

Proxying Public Registries: Additionally, proxying public registries like npmjs.org with your own server can provide control over package updates and enhance security by allowing you to monitor package usage and identify potentially malicious downloads, ensuring a secure development environment.

2.4.1 Example: Creating and Publishing NPM Packages to GitHub Packages

To create and publish a new NPM package to GitHub Artifacts (assuming you want to use GitHub Packages as your artifact repository), follow these detailed instructions. This guide will also show you how to create three versions of your package.

Step 1: Set Up Your Project

-

Create a New Directory for Your Project:

mkdir my-npm-package cd my-npm-package -

Initialize a New NPM Package: Initialize your project with

npm init. This command will prompt you to enter several pieces of information (like the package name, version, description, etc.), or you can usenpm init -yto accept default values.npm init -y -

Create Your Package: Write the code for your package. Create a new file (e.g.,

index.js) and add your code logic:// Example function in index.js function greet(name) { return `Hello, ${name}!`; } module.exports = greet;

Step 2: Configure GitHub Packages

- Authenticate to GitHub Packages:

You need to authenticate with GitHub Packages to publish your package. Create a

.npmrcfile in your project root:

Replace//npm.pkg.github.com/:_authToken=TOKEN @YOUR-USERNAME:registry=https://npm.pkg.github.comTOKENwith your personal access token (PAT) from GitHub (make sure it has the appropriate scopes for package publication), andYOUR-USERNAMEwith your GitHub username. - Update

package.json: Add apublishConfigsection to yourpackage.jsonto specify the GitHub Packages registry:

Replace"publishConfig": { "registry": "https://npm.pkg.github.com/@YOUR-USERNAME" }, "name": "@YOUR-USERNAME/my-npm-package", "version": "1.0.0"YOUR-USERNAMEwith your GitHub username.

Step 3: Publish Your Package

- Publish the Package:

Ensure you are logged into NPM configured to use your GitHub token, then publish your package:

npm publish - Verify Publication: Check your GitHub repository under the 'Packages' section to see your newly published npm package.

Step 4: Update and Publish New Versions

To publish new versions of your package, you will make changes, update the version in your package.json, and then run npm publish again. Here's how to create three versions:

- Version 1.1.0 (Minor Update):

Make some changes to your code. Then update the version in

package.json:

Publish the updated version:"version": "1.1.0"npm publish - Version 1.1.1 (Patch Update):

Make minor changes or fixes. Update the version:

Publish the patch:"version": "1.1.1"npm publish - Version 2.0.0 (Major Update):

Make significant changes that might break backward compatibility. Update the version:

Publish the new major version:"version": "2.0.0"npm publish

How do I consume this package on GitHub on my developer's machines? They would also need to create their .npmrc file (not committed to Git) with the aforementioned content. You may not want to give all developers package publish permissions.

2.5 Installing Software on CI Runners

The reason why commands like npm ci work is because there is some software pre-installed on the runner (in this case, ubuntu-latest). You can find out more about which software is pre-installed here: [runner-images/images/ubuntu/Ubuntu2204-Readme.md at main · actions/runner-images (github.com)]

When examining dependencies in our script, we need to consider npm, which is pre-installed in the "ubuntu-latest" GitHub Actions environment. However, since the ubuntu-latest image is updated periodically, the npm version may change, Addition to other pre installed packages on the runner, impacting the reproducibility of builds. If reproducibility is compromised extensively, what this means is sometimes there might be errors on the CI CD runner that may suddenly be unexpected given that there is no code changes or the results do not match what is on the developers machines, compromising its integrity and usefulness. However, on the other side, not upgrading software at all means that there is a risk that there could be security vulnerabilities. Therefore, it is important that the software is stable and consistent, but upgraded regularly. [Updating dependencies sucks, so let's do more of that - Depfu Blog]

In scenarios where additional software or specific versions are required, installation can be managed through several methods:

- Official Repositories: Use commands like

sudo apt-get install <package>to install software directly from Ubuntu's repositories. For specific versions, specify the version, e.g.,sudo apt-get install nodejs=16. The advantage of this approach is that it allows for more people to review it and allows packages to remove quickly if there's malicious code, because it's part of a repository that's controlled by a very large community. Disadvantage however is if you need a more recent version then you may have to get it through alternative manners. This is because the Ubuntu repositories usually keep packages for a long time to maintain stability.aptpackages are apparently pretty stable per release so unsure if sticking to a specific version is ok (given that it will be subsequently removed.) Perhaps [snapshot.debian.org] could be useful if you were to go back in time and had to reproduce an older build for example. [https://unix.stackexchange.com/a/544434/6737] - Bash|Curl Scripts: Software can be installed via bash scripts executed with curl. However, this method poses risks such as unverified script integrity and potential partial execution, leading to unpredictable system states. This also can potentially compromise the reproducibility of your build, because it is unclear if the package maintainer will keep the scripts the same or may make modifications to it, or the script may become unavailable. In contrast with using the official repositories, the official repositories are dependent upon by many people and so the packages usually remain in the old versions for quite some time. Even back to the Ubuntu versions from 5 or 10 years ago. Unfortunately, some of the scripts may not be written with integrity in mind. It is possible for a script to be partially downloaded or potentially redirect to a malicious website and the script might or the server might time out halfway through the requests. This means that the script will be sent or potentially successful status code, but the script will not be executed in its entirety because it will be cut off. This is frequently remedied using legitimate software repositories that normally back the script in a function to make sure that partial execution is not possible. Don't use

curl | bashbecause there is no trusted maintainer, the URL could redirect (or someone else can take over the domain), network connection is closed, or, it might hide its content via the user-agent check.Invoke-WebRequestfor PS not good because it can execute javascript. [ftp2.osuosl.org/pub/fosdem/2024/ub5230/fosdem-2024-1909-broom-not-included-curling-the-modern-way.mp4] - Private Package Repositories: These are useful when needing trust and security in your software supply chain, though they lack the broad security scanning and community oversight found in public repositories. Advantage is it's very easy to get the latest version of the software or another version by simply changing the version inside of the package string. The disadvantage is again, this is controlled by a third party, and this may not necessarily have all of the auditing requirements commonly found in large package or public package repositories. However, if you can use GPG keys, then you could have at least some semblance of security to make sure that it is published by the intended publisher. Mr. only requires trusting the GPG keys beforehand.